Why Vanar Chain Treats Data Latency as an Economic Problem? Not a Technical One:

When I first looked at Vanar Chain, I expected the usual conversation about speed. Faster blocks. Lower latency. Bigger throughput charts. What caught me off guard was that latency barely showed up as a bragging point. Instead, it kept reappearing as something quieter, almost uncomfortable. A cost. An economic leak. A pressure point that compounds over time.

Most blockchains still talk about latency as a technical inconvenience. Something engineers smooth out with better hardware or tighter consensus loops. That framing made sense when chains mostly moved tokens between people. But the moment you look at systems that operate continuously, especially AI-driven ones, latency stops being a delay and starts becoming friction you pay for again and again.

Think about what latency really is underneath. It is waiting. Not just for confirmation, but for information to settle before the next action can happen. On the surface, that might look like 400 milliseconds versus 1.2 seconds. In isolation, that difference feels small. But when actions depend on previous state, and decisions chain together, those milliseconds stack into real economic drag.

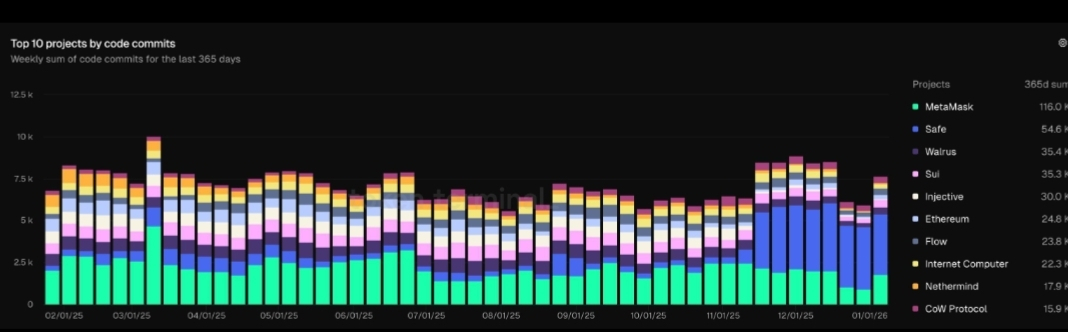

Early signs across the market already show this.

Automated trading systems on-chain routinely lose edge not because strategies are bad, but because execution lags state changes. If a system recalculates risk every second and each update arrives late, capital allocation drifts off target. A few basis points here and there turn into measurable losses across thousands of cycles.

Vanar seems to start from that uncomfortable math. Latency is not something you tune away later. It shapes incentives from the beginning. If your infrastructure forces delays, participants either slow down or overcompensate. Both cost money.

On the surface, Vanar still processes transactions. Blocks still finalize. Validators still do their job. But underneath, the design treats state continuity as an asset. Data is not just written and forgotten. It remains close to where decisions are made. That proximity changes how fast systems can react, but more importantly, it changes what kinds of systems are economically viable.

Take AI agents as an example, because they make the tradeoff visible. An AI system that updates its internal state every 500 milliseconds behaves very differently from one that updates every 3 seconds. At 500 milliseconds, the system can adapt smoothly. At 3 seconds, it starts buffering decisions, batching actions, or simplifying logic. That simplification is not free. It reduces precision.

Precision has a price. So does imprecision.

What struck me is how Vanar seems to acknowledge this without overselling it. Instead of advertising raw TPS numbers, the architecture keeps pointing back to memory, reasoning, and persistence. Those words sound abstract until you map them to cost curves.

Imagine an automated treasury system managing $10 million in stable assets. If latency forces conservative buffers, maybe it keeps 5 percent idle to avoid timing risk. That is $500,000 doing nothing. If lower latency and tighter state continuity allow that buffer to shrink to 2 percent, $300,000 suddenly becomes productive capital. No new yield strategy required. Just better timing.

Now scale that logic across dozens of systems, each making small concessions to delay. The economic effect becomes structural.

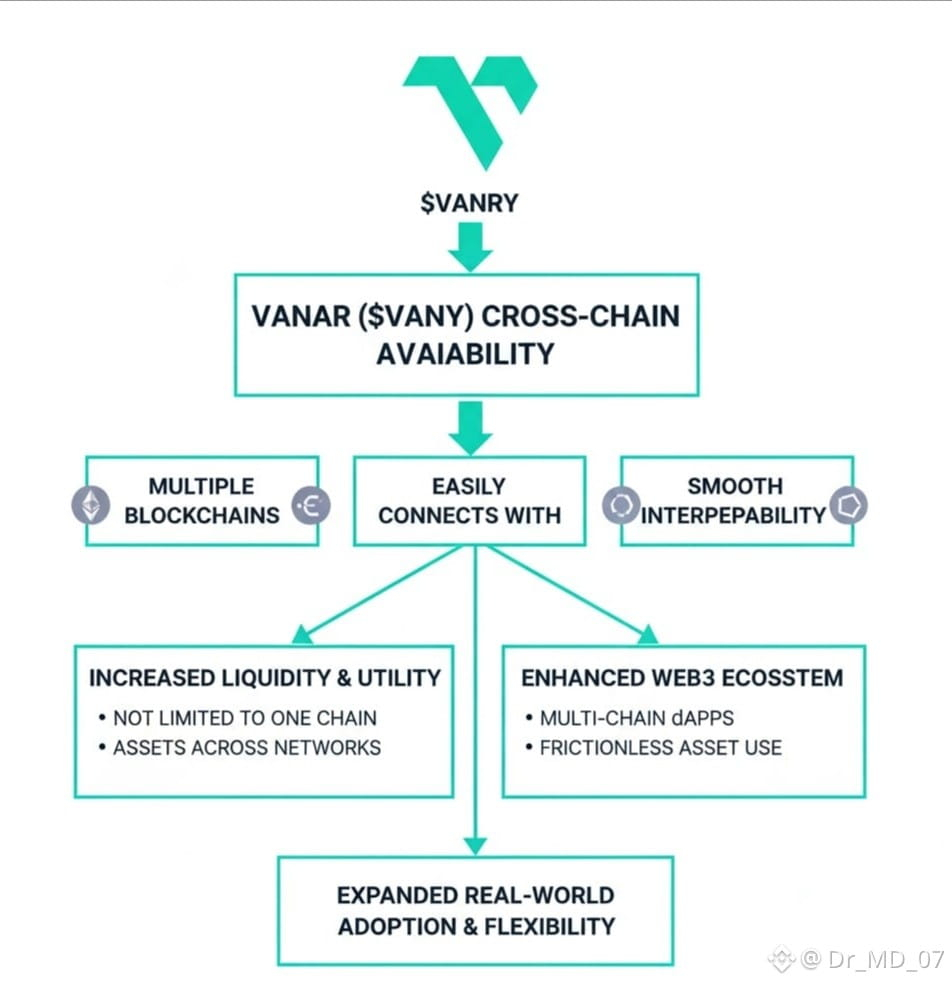

This is where Vanar’s approach starts to diverge from chains that bolt AI narratives on later. Many existing networks rely on stateless execution models. Each transaction arrives, executes, and exits. The chain forgets context unless it is explicitly reloaded. That design keeps things clean, but it pushes complexity upward.

Developers rebuild memory off-chain. AI agents rely on external databases. Latency sneaks back in through side doors.

Vanar seems to pull some of that complexity back into the foundation. Not by storing everything forever, but by acknowledging that decision-making systems need continuity. That continuity reduces round trips. Fewer round trips mean fewer delays. Fewer delays mean tighter economic loops.

Of course, there are risks here. Persistent state increases surface area. It can complicate upgrades. It raises questions about validator load and long-term storage cost. If this holds, Vanar will need careful governance around pruning, incentives, and scaling. Treating latency as an economic variable does not magically eliminate tradeoffs. It just makes them explicit.

And that explicitness matters, especially now. The market is shifting away from speculative throughput races. In the last cycle, chains advertised peak TPS numbers that rarely materialized under real load. Meanwhile, real-world applications quietly struggled with timing mismatches. Bridges stalled. Oracles lagged.

Bots exploited gaps measured in seconds.

Right now, capital is more cautious. Liquidity looks for systems that leak less value in day-to-day operation. That changes what matters. A chain that saves users 0.2 seconds per transaction is nice. A chain that saves systems from structural inefficiency is something else.

Another way to see this is through fees, even when fees are low. If a network charges near-zero transaction costs but forces developers to run heavy off-chain infrastructure to compensate for latency, the cost does not disappear. It moves. Servers, monitoring, redundancy. Someone pays.

Vanar’s framing suggests those costs should be accounted for at the protocol level. Not hidden in developer overhead. Not externalized to users. That does not guarantee success, but it aligns incentives more honestly.

Meanwhile, the broader pattern becomes clearer. Blockchains are slowly shifting from being record keepers to being coordination layers for autonomous systems. Coordination is sensitive to time. Humans tolerate delays. Machines exploit them.

If AI agents become more common participants, latency arbitrage becomes a dominant force. Systems with slower state propagation will bleed value to faster ones. Not dramatically at first. Quietly. Steadily.

That quiet erosion is easy to ignore until it compounds.

What Vanar is really betting on is that future value creation depends less on peak performance and more on sustained responsiveness. Not speed for marketing slides, but speed that holds under continuous decision-making.

Whether that bet actually pays off is still an open question. But the early signals feel real. The people paying attention are not just chasing yield dashboards or short-term metrics, they are builders thinking about what has to work day after day. That said, none of this matters if the system cannot hold up under pressure. Ideas only survive when the chain stays steady, secure, and cheap enough to run without constant compromises.

But the shift in perspective itself feels earned.

Latency is no longer just an engineering inconvenience. It is a tax on intelligence.

And in a world where machines increasingly make decisions, the chains that understand that early may quietly set the terms for everything built on top of them.