Data has become the primary competitive resource of the digital era, yet most blockchain applications still treat storage as an inconvenient afterthought. Computation is transparent and verifiable, but the files that actually matter for modern products remain scattered across brittle infrastructure. Media assets, model checkpoints, training corpora, analytics logs, user generated content, and application archives are the real weight of Web3 and AI systems, and they are rarely handled with the same integrity guarantees as onchain state.

This is the exact gap @walrusprotocol aims to close. Walrus is designed as a decentralized storage and data availability network optimized for large unstructured files. Instead of pretending that every byte belongs directly inside a smart contract, Walrus treats storage as a first class primitive with cryptographic accountability, economic incentives, and retrieval performance that can support serious applications. The result is a storage layer that is not merely an accessory, but a composable backbone for builders who want to ship products that remain durable under stress.

Walrus is best understood as an engineering answer to a market reality: users want verifiable ownership and censorship resistance, but they also want rich experiences built on heavy data. If the storage layer cannot keep up, everything else becomes fragile, including the business model.

Why storage becomes the bottleneck in modern crypto

Blockchains excel at consensus and state transitions, not bulk storage. The moment a product needs images, audio, video, datasets, documents, or long term archives, teams face a familiar dilemma:

1. Keep data off chain and trust external infrastructure, sacrificing resilience and neutrality

2. Force data onchain and accept severe cost and performance constraints

3. Use decentralized storage primitives that struggle with either availability, verification, or economic efficiency

The core issue is not simply where data lives. It is whether the network can prove that data is truly stored, recover it quickly when nodes disappear, and price it in a way that stays predictable for applications.

Walrus positions itself around these pressure points. It is designed for storing large “blob” style objects with strong availability and a verification mechanism that does not collapse into wasteful full replication.

The architectural idea that makes Walrus distinct

Most storage networks historically leaned on replication: store multiple complete copies across different operators and hope enough remain reachable. Replication is intuitive, but it becomes expensive at scale. Walrus leans into erasure coding, a technique that splits data into fragments and distributes them so the original can be reconstructed even if many fragments are missing.

This matters because a storage network is not judged by its best day. It is judged by its worst day: sudden node churn, network partitions, malicious operators, and inconsistent connectivity. The winning design is the one that can absorb loss without ballooning costs or degrading into chaotic recovery.

Walrus incorporates an erasure coding approach that aims to reduce overhead while keeping recovery fast and reliable. In practical terms, it seeks to deliver three properties at once:

1. Lower storage overhead than brute replication

2. Faster recovery that scales with what was lost, not with the size of the entire file

3. Continuous verifiability so the network can challenge storage providers and detect dishonesty

That last element is crucial. Many networks can store data, but fewer can enforce storage honesty without turning the system into a heavy surveillance machine or an inefficient bandwidth sink. Walrus is built around the idea that storage must be provable and enforceable, not merely promised.

Data availability, not just cold storage

A common misunderstanding is to treat decentralized storage as a long term archive. For modern applications, storage must be actively available. Data availability means the network can reliably serve the required fragments quickly enough to support application flow.

This is especially relevant for: AI agent frameworks that need to fetch context or tools Onchain media products that require immediate asset loading

Analytics and indexers that need consistent access to historical data

Games that stream assets dynamically instead of bundling everything upfront

Proof systems that rely on accessible external data commitments

Walrus emphasizes data availability as a practical property not a theoretical comfort This focus changes how developers architect products Instead of building a brittle bridge from a contract to an external server they can design data pipelines where storage is composable verifiable and economically aligned with network participants.

A more mature economic model for storage

Storage is not only a technical concern, it is a pricing problem. If storage costs swing unpredictably, applications cannot sustainably plan. If incentives are weak, operators vanish. If enforcement is soft, dishonest providers can extract fees without delivering service.

Walrus approaches this by integrating a native token model that can coordinate:

Payment for storing data over specified durations

Incentives for storage operators to remain reliable

Staking based security and accountability

Governance for parameters that influence network performance and pricing

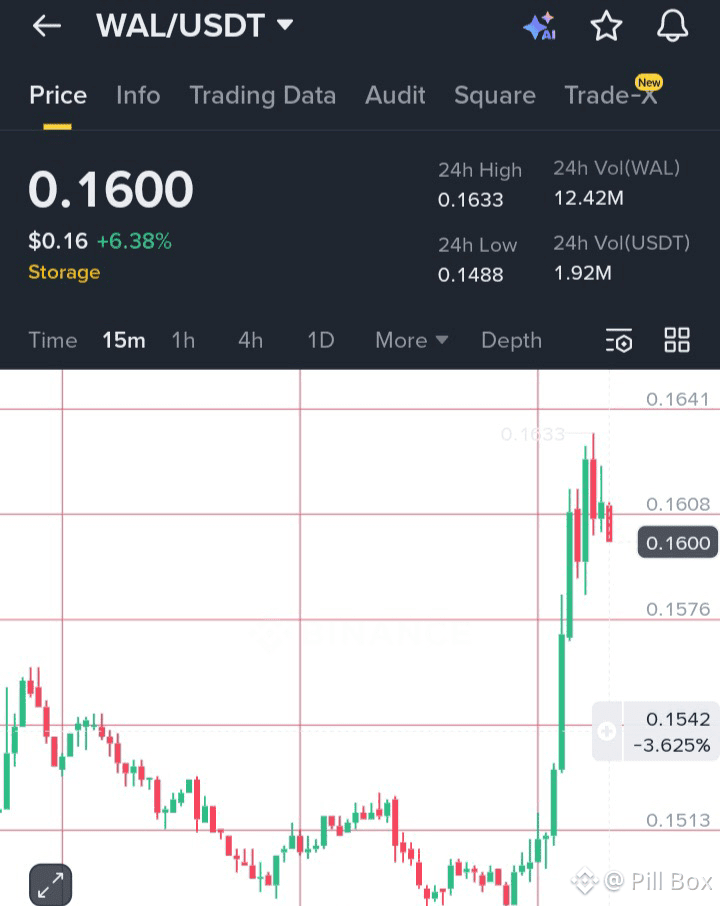

In this model, the token is not ornamental. It is the mechanism for aligning incentives across users, builders, and operators. The cointag $WAL represents the economic interface for this storage layer, and its long term relevance will depend on how well the network sustains real usage while preserving predictable economics.

What builders can do with Walrus that feels difficult elsewhere

Walrus enables a class of applications that need both composability and heavy data. Several categories stand out.

Decentralized websites and front ends

Projects can host fully decentralized experiences where the application assets live on a verifiable storage network instead of centralized hosting. This reduces the surface area for censorship and outages while improving the credibility of “unstoppable” product claims.

AI native dApps with verifiable data pipelines

Many AI products depend on data integrity. If training sets, embeddings, or tool outputs can be tampered with, the application becomes untrustworthy. Walrus supports a workflow where large inputs and outputs can be stored with verifiable commitments, creating cleaner provenance and stronger auditability.

Creator infrastructure and media permanence

Media is heavy, expensive, and often the first thing to break when budgets tighten. A storage layer designed for large files opens the door for creator economies that do not collapse into link rot, missing assets, or broken collections.

Onchain archives and historical continuity

Apps that rely on historical state often face an awkward truth: the chain stores the results, but not always the raw artifacts. Walrus supports the idea that archives, snapshots, and historical datasets should remain accessible, not just referenced.

Why this matters in the next market phase

The market is moving toward applications that feel complete. Users increasingly judge products by reliability, speed, and continuity, not by ideology. Infrastructure must therefore become quieter and more dependable. The storage layer is a major part of that maturation.

Walrus fits this trajectory because it focuses on a hard engineering constraint rather than a narrative trend. Storage is unavoidable, and a credible decentralized storage layer increases the ceiling for what can be built without returning to centralized dependencies.

The more sophisticated insight is that storage does not simply support apps. It shapes business models. When data becomes verifiably ownable and transferable, new forms of coordination become possible: data marketplaces, usage based licensing, composable datasets, and agent economies that can transact over information rather than just tokens.

The network effects of a data layer

A strong storage layer creates compounding benefits:

Builders gain a standard way to persist large assets

Tooling improves around predictable primitives

Operators compete on reliability and cost

Applications integrate storage natively rather than bolting it on later

New markets form around data portability and provenance

This is how infrastructure becomes a gravitational center. Not through marketing, but through repeated developer decisions that choose the same primitive because it works.

A practical lens for evaluating Walrus going forward

If you want to track progress without getting distracted by noise, focus on observable signals:

Growth in stored data volume that reflects real use

Diversity of applications using storage as a core dependency

Retrieval performance and uptime under stress conditions

Pricing stability across different demand cycles

Operator participation and resilience against churn

These metrics reveal whether the network is becoming essential rather than merely interesting.

Closing perspective

Walrus is positioning itself as an infrastructural answer to one of the least glamorous but most decisive problems in crypto: making data persistent, verifiable, and usable at scale. The deeper value is not only cheaper storage. It is the ability to build applications that remain coherent over time, where assets do not vanish, integrity does not depend on trust, and developers can architect without fear of invisible centralized failure points.

If decentralized applications are going to compete with mainstream products, they will need more than execution environments. They will need durable data. Walrus is an attempt to make that durability programmable, economical, and genuinely composable.