A couple of years back, I was knee-deep in managing a portfolio of DeFi positions when I ran into an unexpectedly dull problem: archiving historical trading data. Centralized cloud providers were quietly eating into margins, and every minor outage was a reminder of how brittle the setup really was. Nothing catastrophic happened, but the dependency felt wrong. One service hiccup, one policy change, and access to years of records could vanish. That low-grade friction was enough to push me toward decentralized storage, where the real problem turned out to be more structural than I first assumed.

Large, unstructured data is becoming unavoidable. Videos, datasets, logs, model weights, and other blobs keep growing, while most blockchain systems are still optimized for small, hot state. Putting this kind of data directly onchain is expensive and inefficient. Offloading it usually means trusting centralized servers or semi-decentralized gateways, which reintroduces single points of failure. It is not a flashy issue, but it sits underneath everything. If storage fails quietly, the rest of the stack becomes unreliable no matter how good execution logic looks.

A useful mental model is a library that does not store whole books in one place. Each book is split into pages, in practice, extra copies are added, and those pages are distributed across different rooms. If one room is lost, the book can still be reconstructed from what remains. This is the basic idea behind erasure coding, similar to how RAID systems protect against disk failures by combining data and parity.

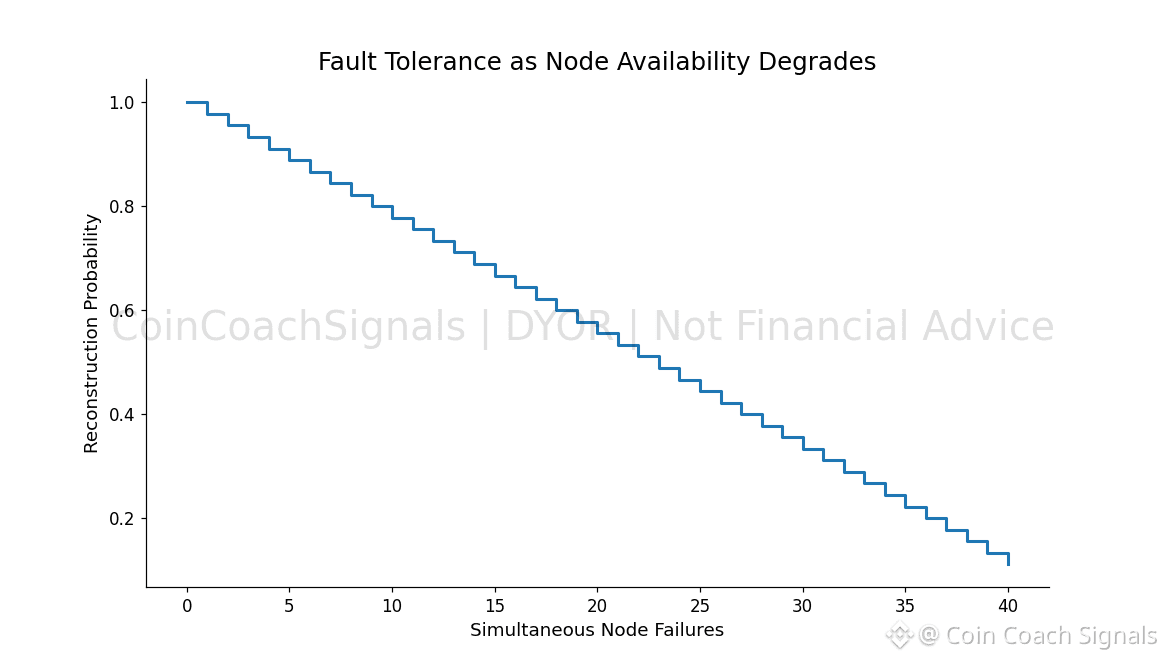

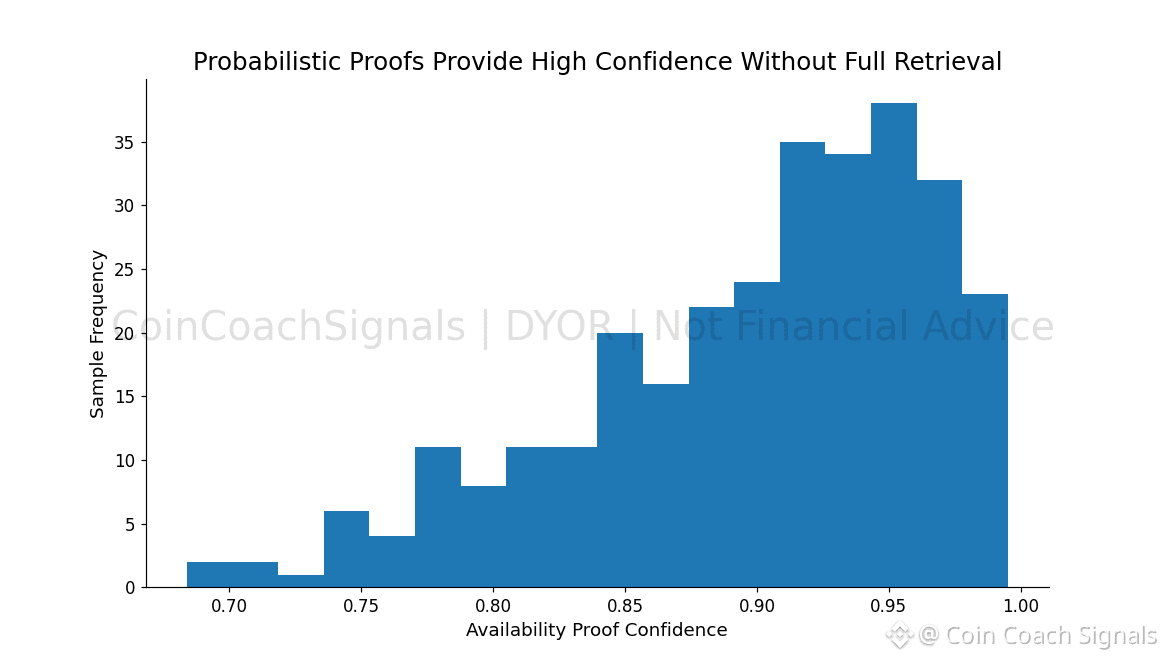

That principle is what Walrus applies to decentralized blob storage. Data is encoded using Reed–Solomon schemes, split into shards with built-in redundancy, and distributed across independent storage nodes. As a simple example, a blob might be broken into 30 shards with enough redundancy to tolerate the loss of a third of them. Those shards are held by nodes selected through delegated proof-of-stake, so storage responsibility is tied to economic commitment rather than goodwill.

Coordination and verification happen on Sui. Proofs of availability, metadata, and lifecycle management are handled onchain, while the heavy data lives off the execution layer. Stored blobs become programmable objects, which allows applications to reference, extend, or reason about data availability directly, without relying on offchain assurances. The flow is deliberately plain: upload, encode, distribute, verify.

The token model stays functional. WAL is used to pay for storage based on size and duration, to stake nodes that provide storage, and to participate in governance decisions like parameter tuning. There is no attempt to dress this up as yield engineering. The goal is incentive alignment so data stays where it is supposed to stay.

From a market perspective, the project sits in a middle tier rather than at the extremes. It raised roughly $140 million from firms including Andreessen Horowitz and Standard Crypto, and its token market cap sits around the low hundreds of millions with about 1.6 billion tokens in circulation. That gives enough resources to build without implying guaranteed dominance.

Short-term trading around infrastructure like this tends to be noisy. Prices move with ecosystem announcements and broader sentiment, and it is easy to get chopped up trying to time those swings. The longer-term question is whether usage compounds. If applications, rollups, or AI systems actually depend on this layer, value accrues through steady fees rather than attention cycles. That is the only angle where the bet really makes sense.

Risks remain. Competition from Filecoin and in practice, Arweave is real, with larger networks and longer histories. Correlated node failures are another concern. If too many storage providers go offline at once, reconstruction thresholds could be breached, undermining confidence. There is also open uncertainty around whether programmable storage truly becomes essential for AI-heavy applications, or whether that demand stays more centralized.

Infrastructure like this does not prove itself quickly. Adoption arrives quietly, integration by integration, long after launch narratives fade. Whether Walrus becomes a durable part of the stack depends less on theory and more on whether these guarantees keep holding up when nobody is paying close attention.

@Walrus 🦭/acc #Walrus $WAL