Everyone in crypto assumes erasure coding with Reed-Solomon recovery just works at scale. It doesn't. The moment you add real validator churn to the picture, traditional RS-recovery becomes a bottleneck that grinds the network to a halt. Walrus solves this by rethinking how recovery actually works.

The RS-Recovery Problem Nobody Discusses

Here's what the theory says: use Reed-Solomon codes to shard your data across n nodes, and you need any k of them to reconstruct. Simple, elegant, mathematically proven. Then reality hits.

In a decentralized network, validators aren't permanent. They join. They leave. They go offline. They get slashed. This epoch churn means the nodes holding your erasure-coded shards are constantly changing. Every time a shard holder leaves, the network needs to reconstruct data and re-shard it across new validators.

With traditional RS-recovery, reconstruction is computationally expensive. You need to gather k shards, do polynomial interpolation, recover the original data, then re-encode across the new validator set. Do this constantly and you're burning massive computational resources just keeping the lights on.

Scale this to thousands of validators churning in and out every epoch, and traditional RS-recovery becomes a computational nightmare.

Why Epoch Churn Breaks the Math

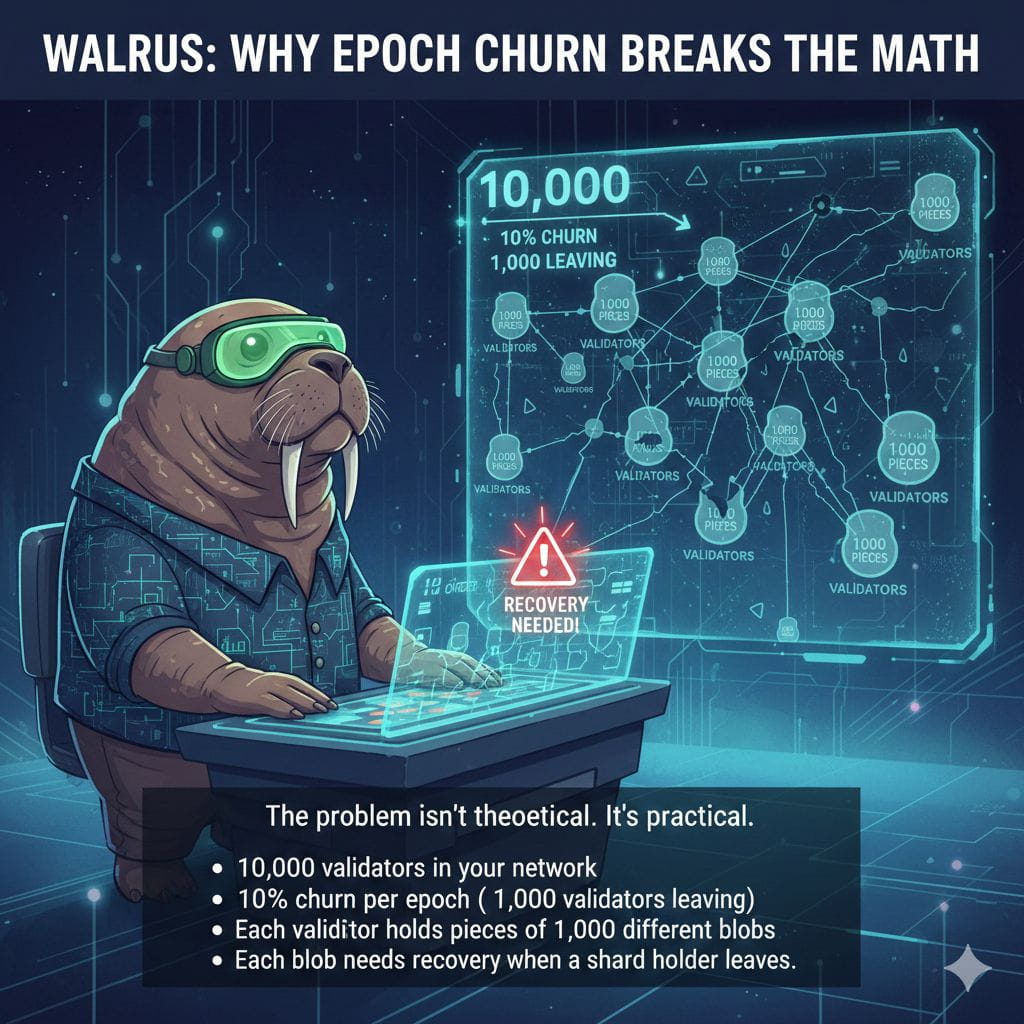

The problem isn't theoretical. It's practical arithmetic. Assume:

10,000 validators in your network

10% churn per epoch (1,000 validators leaving)

Each validator holds pieces of 1,000 different blobs

Each blob needs recovery when a shard holder leaves

Suddenly you're doing 1,000,000 recovery operations per epoch. Each recovery requires gathering shards, computing interpolation, re-encoding. Even at millisecond per operation, you're looking at hours of computational load per epoch.

Add in network bandwidth for gathering shards and redistributing recovered data, and the system collapses under its own recovery load.

How Traditional Systems Cope (Badly)

Projects using traditional RS-recovery mostly handle churn by pretending it's rare. They overprovision the network. They use shorter epochs to reduce churn pressure. They limit the number of blobs. They accept that performance degrades during high-churn periods.

None of this solves the fundamental problem. They're just reducing how often you hit the wall.

Some projects use full replication specifically to avoid RS-recovery overhead. That trades computational complexity for storage waste—which is fine until you care about scale.

Walrus's Different Approach: Strategic Recovery

Walrus doesn't pretend epoch churn is a minor issue. It designs around it by changing when and how recovery happens.

Instead of recovering immediately when shards go missing, Walrus uses a lazy repair strategy. Missing shards are marked and queued. The network prioritizes recovery based on how critical the data is and how many shards are already missing. Non-critical blobs wait longer. Critical blobs get fixed immediately.

This batching dramatically reduces recovery load. Instead of 1,000,000 individual operations, you're doing batch recovery across clusters of related shards. Computational cost drops. Network bandwidth usage becomes manageable.

The Static Committee Advantage

Walrus Static Committees reduce churn pressure on data directly. Within a storage epoch, your data's custodian committee is fixed. Validators rotate in and out of the global set without touching the committee responsible for your data.

This means recovery happens less frequently. When it does happen, it's organized and batched, not scattered across the network like random fires.

Dynamic Repair Without Full Reconstruction

The other insight: you don't always need full data reconstruction. If 8 of 10 shards are healthy, you only need to compute missing shards. Traditional RS-recovery reconstructs everything then re-encodes. Walrus computes replacements directly.

This reduces computation per recovery operation by orders of magnitude.

Real-World Numbers

Traditional RS-recovery at scale: dozens of recovery operations per second during normal churn periods. Hundreds during high churn. Computational load measured in cores per validator.

Walrus recovery: a few recovery operations per second during normal churn. Batched and optimized. Computational load measured in percentages of validator capacity.

The difference is categorical.

Why This Matters for Production Scale

Validators choosing infrastructure want to know: how much computational resource will I need to dedicate to recovery overhead? Traditional RS-recovery? Assume 20-50% of your capacity during active churn periods. Walrus? Assume 5-10%.

That's the difference between viable validator economics and unsustainable ones.

@Walrus 🦭/acc shows why traditional RS-recovery breaks under real validator churn. The math works in theory. Implementation fails in practice. Walrus solves this through lazy repair, static committees, and direct computation. You get erasure coding's efficiency without the recovery overhead that kills traditional systems. For infrastructure that needs to handle real validator dynamics at scale, this is foundational. Walrus handles it. Traditional RS-recovery doesn't.