When blockchains talk about AI integration, the language often sounds reassuring. Tooling. SDKs. Middleware. External computation layers. On paper, it looks flexible. In practice, it introduces a quiet form of technical debt that compounds over time.

Vanar Chain avoided that debt by refusing a popular shortcut: building a conventional blockchain first and “adding AI later.”

That decision is less about ideology and more about operational reality.

Retrofitting AI onto legacy infrastructure creates a split brain. Core execution lives on-chain, while intelligence lives elsewhere. Decisions are computed off-chain, then passed back for settlement. Over time, the blockchain stops being the system of record for why something happened and becomes only the place where outcomes are finalized.

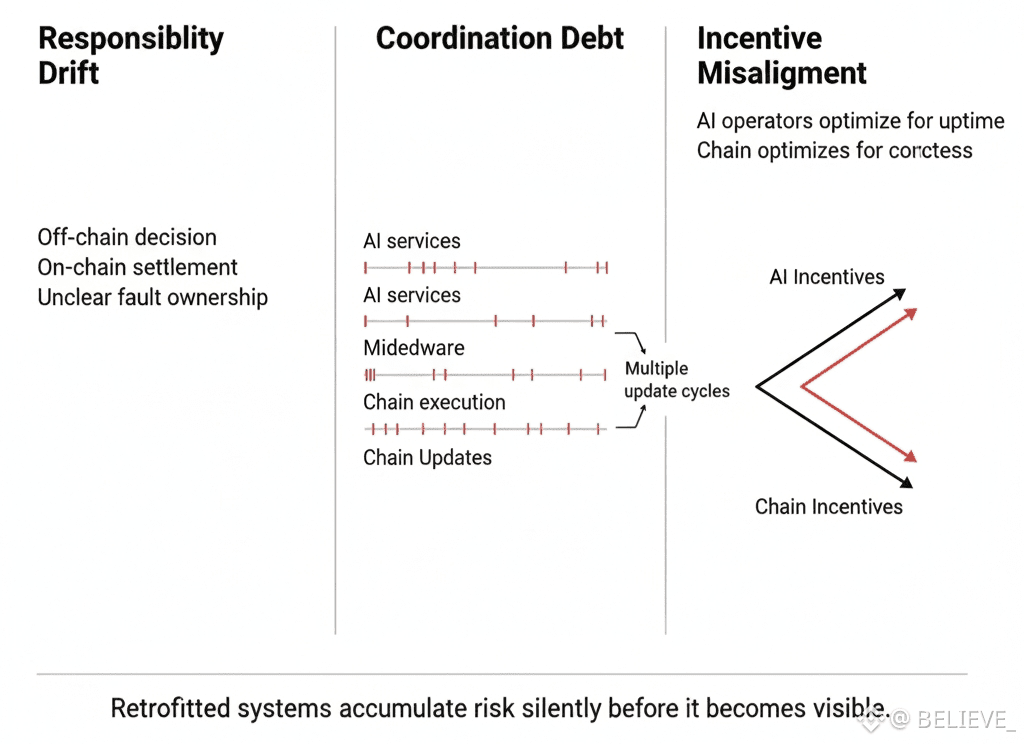

This separation introduces three problems that don’t show up immediately.

The first is responsibility drift. When logic executes off-chain, accountability becomes ambiguous. If an AI agent makes a decision that causes loss, where does fault reside — in the model, the middleware, the operator, or the chain that settled the transaction? Retrofitted systems struggle to answer this clearly, which becomes a critical issue in regulated or consumer-facing environments.

The second problem is coordination debt. Each off-chain component brings its own assumptions, update cycles, and failure modes. Over time, maintaining alignment between AI services and on-chain execution becomes harder than maintaining the chain itself. What starts as modular flexibility turns into a fragile dependency graph.

The third problem is incentive misalignment. When AI logic sits outside the protocol, its operators are often economically detached from the network’s health. They optimize for uptime, not correctness. For speed, not explainability. For convenience, not long-term integrity.

Vanar’s choice to avoid retrofitting addresses these issues at the root rather than treating symptoms later.

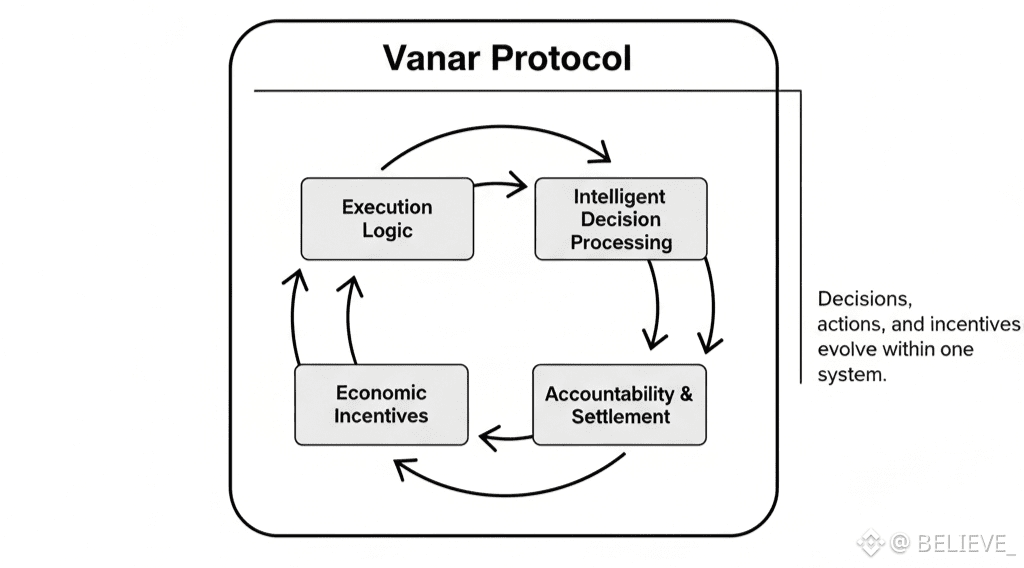

By assuming intelligence as a core participant from the outset, Vanar aligns execution, incentives, and accountability within the same system boundary. Decisions, actions, and settlement remain tightly coupled. That coupling is often criticized as restrictive — but in systems that must behave reliably, restriction is a form of safety.

Another overlooked consequence of retrofitting is lifecycle mismatch. AI systems evolve quickly. Blockchains are designed to change slowly. When the two are loosely connected, upgrades become negotiation points. A model update might require changes in execution assumptions. A chain upgrade might break AI dependencies. Coordination becomes political instead of technical.

Vanar’s architecture reduces that friction by narrowing the gap between intelligence and execution. Instead of negotiating across layers, the system evolves with a shared understanding of what “intelligent behavior” means on-chain. This doesn’t eliminate complexity, but it localizes it — which is essential for long-term maintainability.

There is also a strategic consideration many projects underestimate: perception by serious builders. Teams building real products evaluate infrastructure differently than speculators. They look for predictability, debuggability, and long-term support. A chain that depends heavily on external AI layers raises questions about durability.

Who maintains those layers five years from now?

Who audits them?

Who absorbs failure when they break?

Vanar’s refusal to rely on AI as an attachment rather than a component makes those questions easier to answer. Responsibility stays closer to the protocol. That clarity matters to enterprises, brands, and platforms that cannot afford ambiguous infrastructure risk.

This approach also influences how innovation happens on the network. Retrofitted systems tend to innovate at the edges — new tools, new plugins, new services layered on top. Vanar’s model encourages innovation inward: improving how intelligence and execution coexist, rather than proliferating dependencies.

That inward focus is slower. It doesn’t generate flashy announcements every week. But it creates a foundation where new capabilities don’t require renegotiating trust boundaries each time they are introduced.

Critically, this does not mean Vanar rejects external tooling or interoperability. It means the core assumptions about intelligent behavior are enforced at the protocol level, not outsourced. Extensions enhance the system rather than define it.

From an economic perspective, this decision reinforces coherence. When intelligent execution happens within the network, activity translates more directly into protocol usage. There are fewer leakage points where value accrues off-chain while the chain bears the risk.

This alignment between function and economics is subtle but powerful. It ensures that as intelligent systems grow in sophistication and volume, the infrastructure that supports them remains relevant — not just as a settlement layer, but as the environment in which behavior unfolds.

Retrofitting AI is tempting because it promises speed.

Avoiding it requires patience.

Vanar chose patience.

Not because it guarantees success, but because it avoids a class of failures that are nearly impossible to fix once systems are live. In infrastructure, some mistakes can be patched. Others become permanent constraints.

Vanar’s refusal to add AI later suggests an understanding of that difference — and a willingness to build for the long horizon where intelligent systems stop being experimental and start being expected.

That is not a fashionable choice.

It is a foundational one.