The metaverse promises real-time digital worlds where millions of users move, trade, create, and socialize at the same time. That sounds exciting, but it also creates a technical reality most blockchains were never built for. As someone who has watched blockchain infrastructure evolve since the early days of Bitcoin, I’ve seen the same pattern repeat: new use cases arrive, and old systems start to show their limits.

The metaverse is not just about tokens and NFTs. It is about persistent environments. These are worlds that need to stay alive every second, process constant updates, store large files, and react instantly to user actions. In 2025, global metaverse activity crossed an estimated 400 million monthly active users across gaming, virtual worlds, and enterprise simulations. Real-time platforms now generate terabytes of interaction data daily. That scale changes everything.

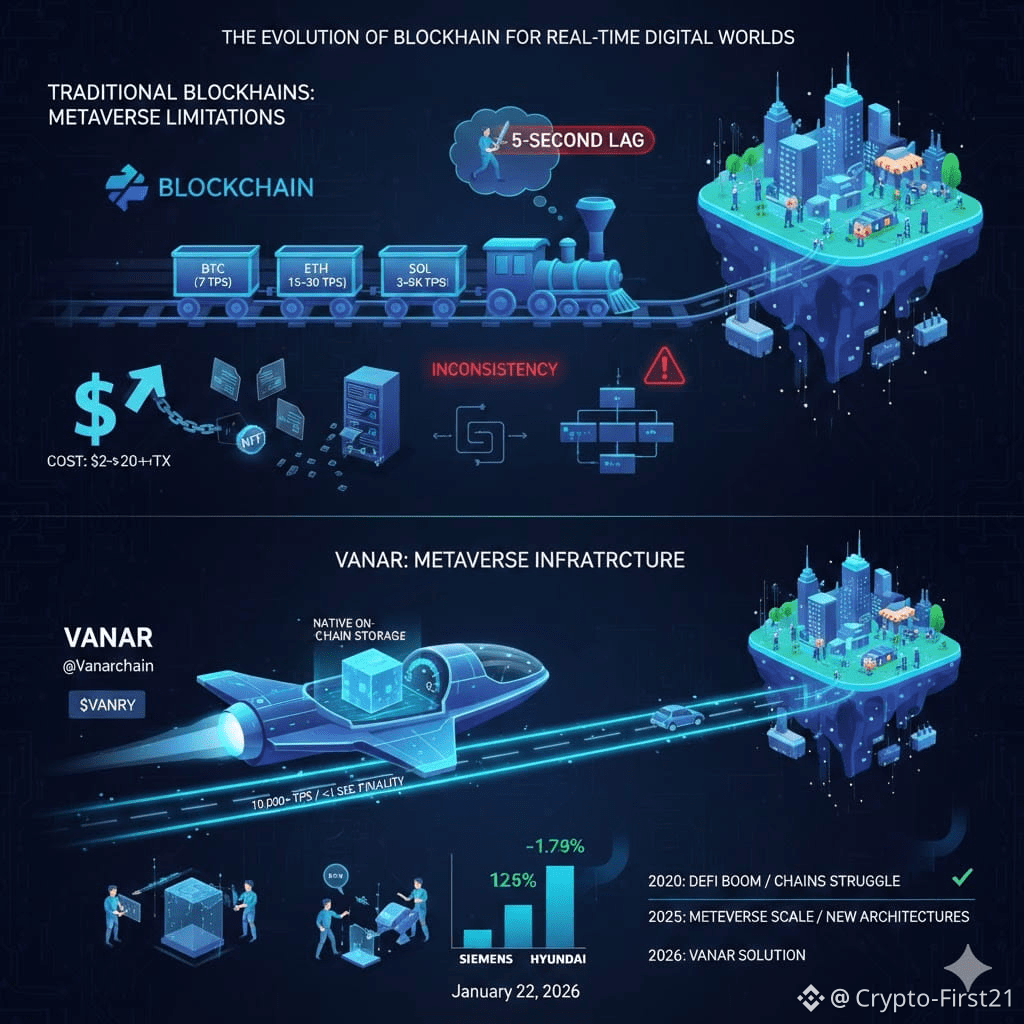

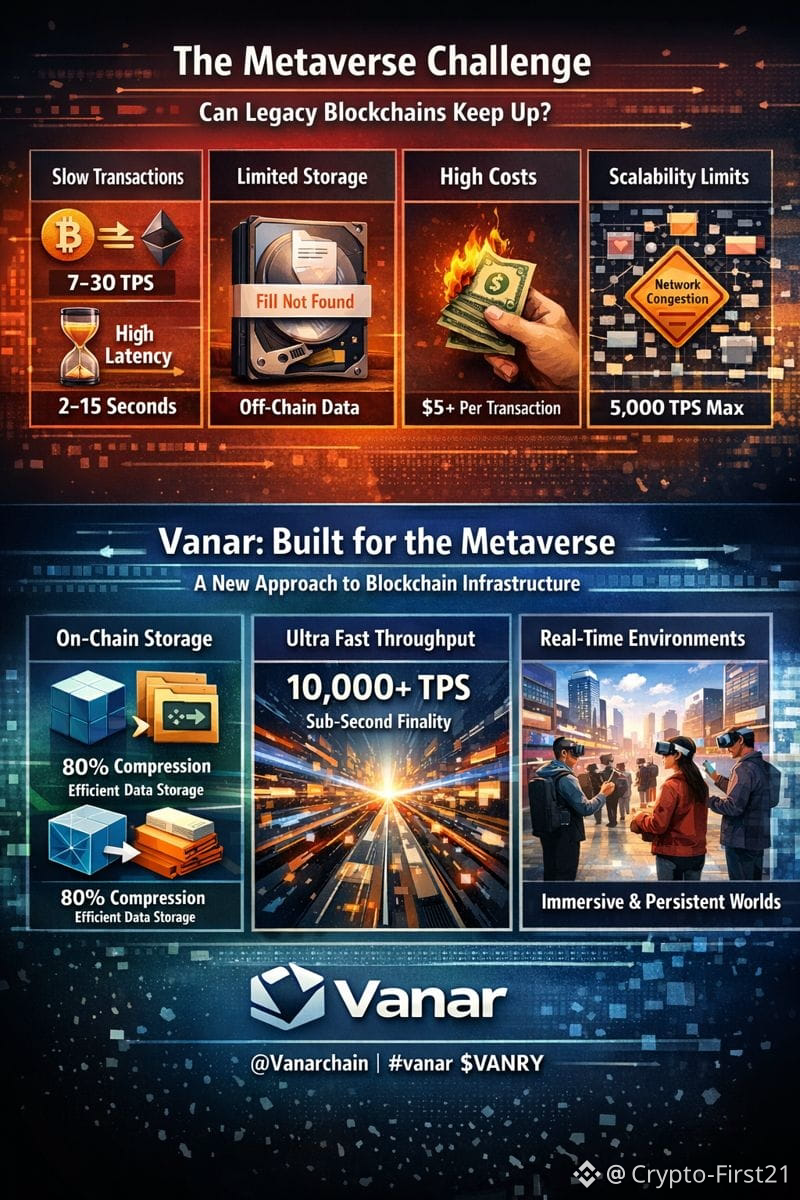

Traditional blockchains were designed to record financial transactions, not to manage living digital environments. Bitcoin processes around 7 transactions per second. Ethereum, after years of upgrades, averages between 15 and 30. Even fast chains like Solana typically handle between 3,000 and 5,000 in real-world conditions. In contrast, a single popular metaverse game can generate tens of thousands of micro-events per second, from avatar movements to asset interactions and environmental updates.

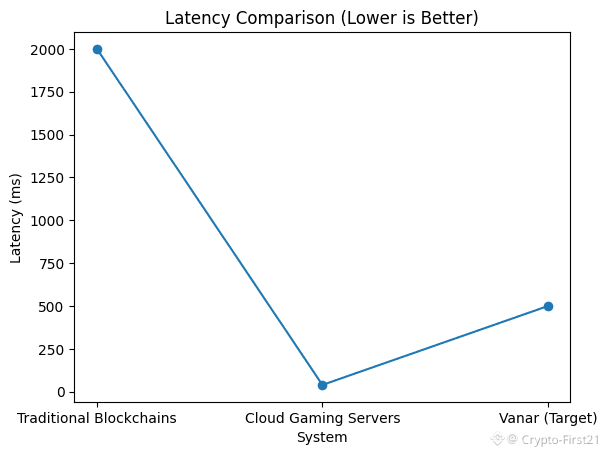

Latency becomes the first major barrier. In gaming or immersive environments, anything above 100 milliseconds feels sluggish. Many blockchains finalize transactions in seconds, sometimes minutes during congestion. That delay might be acceptable for payments, but it breaks immersion. Imagine swinging a sword, opening a door, or placing an object and waiting five seconds for confirmation. Users simply won’t tolerate it.

Then comes data storage. Metaverse environments rely on massive volumes of dynamic data: 3D assets, environment states, identity records, social interactions, and ownership proofs. Most blockchains were never built to store large files. They only store transaction records and tiny pieces of metadata. All actual content typically exists in an off-chain manner on centralized servers or in a Decentralized system such as IPFS. This creates a tenuous connection between on-chain ownership and what exists in reality. Once a node goes down or a connection fails, assets will “exist” on a blockchain network yet not be accessible in reality in any functional manner.

Another limitation is the cost. On the Ethereum network, the average cost of a transaction, peaking in 2024, stood between $2 and $20, occasionally going as high as much higher amounts. When this is multiplied in the thousands for in-game interactions per user, the viability of the economy fails. Even the most inexpensive blockchain platforms fail when transactions rise in unpredictable ways.

Security and consistency also become harder. Metaverse worlds require constant state updates. When thousands of transactions arrive simultaneously, block reordering, mempool congestion, and temporary forks can introduce inconsistencies. That may not matter much for token transfers, but in persistent worlds, inconsistent states can break game logic, ownership history, and environmental continuity.

Classical blockchains have always been optimized for correctness and decentralization, not speed, storage, and maintaining a state. They perform amazingly in financial settlement systems but not in virtual reality worlds.

This gap is exactly why new infrastructure designs are emerging. One interesting response is Vanar, which started from the assumption that future digital environments would require constant interaction, large-scale storage, and real-time processing.

Instead of treating data as something that must live off-chain, Vanar integrates native on-chain storage optimized for compressed files. At launch, internal benchmarks showed up to 80 percent reduction in file size for typical game and media assets, while preserving verification capability. Instead of storing pointers, the chain itself becomes the data layer.

A latency issue is addressed by employing a high-throughput, parallel-processing-friendly Layer 1 architecture. While exact production figures fluctuate, Vanar’s architecture targets sub-second finality and sustained throughput above 10,000 transactions per second. That moves blockchain responsiveness closer to traditional cloud gaming servers, which typically operate at 20 to 60 millisecond response windows.

For easier comparison, consider an example to understand their functionality. A traditional blockchain network can support 30 transactions in one second with 2 to 15 seconds of confirmation time. It can store metadata of transactions and use external storage for storing files. A high-performance chain like Solana might process thousands of transactions per second with sub-second latency but still offloads most data storage. Vanar’s design pushes toward high throughput combined with native data compression and persistent storage, enabling the chain to directly manage digital environments instead of outsourcing them.

This is important since the metaverse is getting more complex rather than being simple. Enterprise metaverse applications such as training simulation, manufacturing simulation, and healthcare continued to grow significantly in 2025.Siemens and Hyundai showed double-digit increases in their productivity through immersive simulates, although they also indicated that the data consistency/latency environment is a limitation in their infrastructure. Such use cases require digital continuity beyond quick payments. From a trader’s point of view, I am reminded of the early days of the boom in DeFi in 2020.

Why is this trend accelerating now? Part of the answer lies in hardware maturity. Affordable VR headsets, AI-driven content creation, and real-time rendering engines have finally reached consumer and enterprise readiness. Once users can enter immersive spaces easily, expectations change. Lag, missing assets, and broken state transitions become unacceptable.

In Early DeFi boom in 2020, chains struggled, fees exploded, and networks froze. Over time, new architectures emerged that matched new workloads. The metaverse is entering a similar phase. Infrastructure is being stress-tested by real users, not just speculative activity.

One thing I’ve learned over the years is that narratives fade, but engineering problems persist. The core challenge here is not hype. It is math, physics, and bandwidth. Moving massive amounts of state data in real time is simply hard. Chains that adapt at the architectural level stand a better chance of surviving long-term.

Vanar’s response focuses less on marketing promises and more on solving structural constraints: storage, latency, data persistence, and execution efficiency. Whether this approach becomes dominant remains to be seen, but the direction aligns closely with where workloads are clearly heading.

Something that I have learned over the years is that stories have a shelf life, whereas engineering challenges do not. Here, the problem is not hype. It is math, physics, and bandwidth. It is hard to move a lot of state in real-time. Those who are able to adapt in terms of architectures will fare better in this space.

Vanar's answer is less focused on the marketing kind of promise and more on how the structural limitations can be alleviated, such as the need for storing, latency issues, data persistence, and executing efficiently. Only time will tell whether this will be the way the industry leans, but definitely a good trajectory for where the workloads appear to be trending.

Siemens and Hyundai reported double-digit growth for productivity based on immersive simulates, yet they also stated that the consistency/latency data environment is a challenge for them concerning the underlying infrastructure. These are the kinds of applications that need more than just fast money transfers and therefore digital continuity. As far as a trader is concerned, I am reminded of the beginning of the explosion in the world of DeFi last year, 2020.