A few months back, I was putting together a small AI experiment. Nothing fancy. Just training a lightweight model on scraped market data for a trading bot side project. Once I started logging images, intermediate outputs, and raw files, storage ballooned fast into the hundreds of gigabytes. Centralized cloud storage worked, but the costs stacked up quickly for data I only needed occasionally, and I never liked the feeling of being locked into one provider’s rules. On the decentralized side, older storage networks came with their own headaches: slow uploads, uneven retrieval, and pricing that made large, unstructured files feel like an afterthought. Nothing broke outright, but it left that familiar frustration. In a space built around resilience, why does storing big, boring blobs still feel fragile or overpriced?

That question points to a deeper issue with decentralized storage. Most systems treat all data the same, whether it’s a few bytes of metadata or massive datasets full of images and logs. To avoid loss, they lean heavily on replication, copying data many times over. That keeps things safe in theory, but it drives costs up and slows the network down. Nodes end up holding inefficient copies, and when churn happens, recovery can get messy. For users, this means paying extra for redundancy that doesn’t always hold under real stress. For developers, it means avoiding storage-heavy apps altogether, because retrieval can lag or fail when it matters most. AI pipelines, media platforms, and analytics workloads all suffer when access to large files becomes unpredictable.

I usually think of it like how large libraries handle archives. You don’t copy every rare book to every branch. That would be wasteful. Instead, you distribute collections intelligently, with enough overlap that if one vault goes offline, the material can still be reconstructed elsewhere. The goal isn’t maximal duplication. It’s efficient durability.

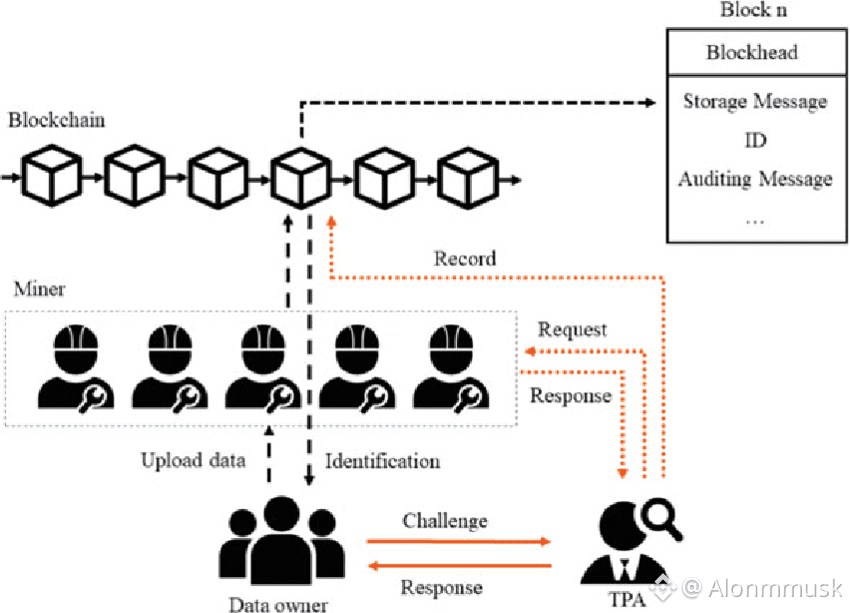

That’s the design lane Walrus Protocol sticks to. It doesn’t try to be a full filesystem or a general compute layer. It focuses narrowly on blob storage. The control plane lives on Sui, handling coordination, proofs, and payments, while the actual data lives off-chain on specialized storage nodes. Files are erasure-coded into slivers and spread across a committee, with automatic repair when pieces go missing. The system avoids anything beyond store-and-retrieve on purpose. No complex querying. No computation on the data itself. That restraint keeps operations lean and predictable. In practice, apps can upload data once, register it on-chain, and rely on fast retrieval without dragging execution logic into the mix. Since mainnet, blobs are represented as Sui objects, which makes it easy for contracts to manage ownership, lifetimes, or transfers without touching the underlying data.

Under the hood, one of the more interesting pieces is the Red Stuff encoding. It uses a two-dimensional erasure scheme with fountain codes, targeting roughly five times redundancy. That’s enough to survive node churn without blowing up storage costs. The encoding is designed for asynchronous networks, where delays happen, so challenges don’t assume instant responses. Nodes are periodically tested to prove they still hold their fragments. Another important detail is how epochs transition. Committees rotate every few weeks based on stake, but handoffs overlap so data isn’t dropped during the switch. With roughly 125 active nodes today, handling about 538 terabytes of utilized capacity out of more than 4,000 available, that overlap matters.

The WAL token plays a very utilitarian role. It’s used to pay for storage, with fees denominated in FROST subunits to cover encoding and on-chain attestation. Token holders can delegate stake to storage nodes. Those with enough backing join the committee and earn rewards from an end-of-epoch pool tied to how much data they serve. Governance flows through the same mechanism, with staked tokens influencing protocol changes, like the upcoming SEAL access control expansion planned for Q2 2026. Settlement happens through Sui contracts, with a portion of fees burned to manage inflation. There’s no yield theater here. WAL exists to keep nodes online and data available.

Market-wise, the numbers are fairly straightforward. Capitalization sits around 200 million dollars, with circulating supply close to 1.5 billion tokens after last year’s unlocks. Daily volume around 50 million gives enough liquidity without turning it into a momentum playground.

Short-term price action tends to follow headlines. AI data narratives or integrations like the January 2026 Team Liquid deal, which involved migrating around 250 terabytes of esports footage, can spark quick moves. I’ve seen that pattern enough times to know how it usually ends: a spike, then a cool-off when attention shifts. Long-term, the story is slower. If daily uploads keep climbing from the current roughly 5,500 blobs, and utilization moves beyond the current 12.9 percent of total capacity, demand builds through real usage. That’s where value shows up, not in chart patterns but in apps quietly treating the network as default storage, like the realtbook NFT collection did for permanent artwork.

There are real risks. Filecoin and Arweave already have deep ecosystems and mindshare. Even if Walrus undercuts costs dramatically for certain workloads, developers often stick with what they know. Sui’s ecosystem concentration is another variable. One scenario I keep in mind is correlated churn. If, during a single epoch, 30 percent of the 125 nodes drop at once due to shared infrastructure issues, self-healing could strain remaining bandwidth, delaying reconstruction and causing temporary unavailability. That kind of hiccup is survivable once, but damaging if repeated. And multichain expansion in 2026 is still an open question. Will bridges to other ecosystems bring meaningful volume, or just dilute focus?

In the quieter stretches, storage infrastructure shows its value slowly. Fifteen million blobs stored so far points to traction, but the real signal is habit. When applications keep coming back for the same data, day after day, without thinking about it, durability turns into dependence. That’s when infrastructure stops being an experiment and starts becoming invisible, which is usually the goal.

@Walrus 🦭/acc #Walrus $WAL