@Vanarchain always enters my mind through volume. It tends to appear later after the obvious debates have run their course and the usual metrics fail to explain why systems that look efficient on paper still bleed value in practice. I remember a discussion where one person blamed DeFi’s limits on throughput, another on regulation. Both arguments missed what sat underneath them: we keep accelerating data without improving our ability to understand it.

That’s where Vanar begins to make sense. Not as a pitch, but as a response to fatigue. Fatigue from protocols that record everything yet learn nothing. From governance processes flooded with proposals no one can meaningfully reason through. From capital that moves constantly but accumulates insight painfully slowly.

The understated premise behind Vanar is that on-chain data is treated backwards. We preserve too much and interpret too little. Storage has become cheap, so we hoard information indiscriminately. Understanding it, however, remains costly. That imbalance creates hidden friction. Traders react because historical context is too heavy to access in real time. DAOs repeat errors that are technically documented but functionally unreachable. Compliance risk doesn’t explode it quietly compounds until it can no longer be ignored.

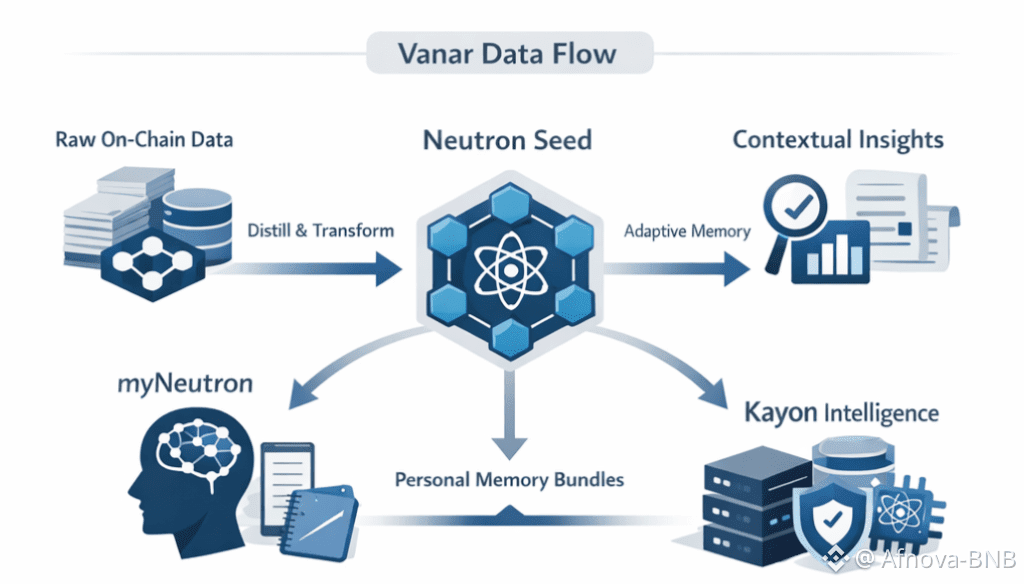

Neutron sits at the center of this shift, not as a compression trick, but as a change in philosophy. Traditional storage forces a binary choice: keep everything exactly as it was, or discard it entirely. Real decision-making doesn’t work like that. Humans don’t recall every detail, yet they retain what matters. Neutron mirrors that instinct. It doesn’t erase information; it reshapes it into something usable.

When raw data is distilled into a Neutron Seed, the meaningful change isn’t the dramatic reduction in size. It’s that memory becomes actionable again. A legal document isn’t just archived it’s searchable, provable, and usable without dragging the full file through every interaction. An invoice stops being inert storage and becomes a live reference for systems that understand its meaning. This matters because DeFi’s real inefficiency isn’t gas costs it’s cognition. Systems don’t fail from a lack of data; they fail because reasoning over that data is too expensive.

I have watched capital get misallocated during volatility not due to recklessness, but because systems offered no way to contextualize risk in the moment. Everything was technically transparent, yet practically unreadable. By the time insight arrived, action was already forced. Neutron quietly pushes back against that dynamic by lowering the cost of remembering reducing the need to act blindly.

The introduction of myNeutron adds another layer that feels intentionally understated. Most personal AI tools chase productivity theater. This feels closer to a research notebook that finally escaped isolation. Memory bundles aren’t exciting from a marketing perspective, but they’re deeply practical. Analysts don’t lose money because they lack tools; they lose it because their understanding is scattered across platforms that don’t talk to each other. A private, portable memory anchored on-chain isn’t about convenience it’s about continuity, something DeFi has never handled well across cycles.

If Neutron is about remembering correctly, Kayon is about acting responsibly on what’s remembered. This is where discussions often become uncomfortable. Many protocols outsource intelligence to off-chain services and call it decentralization as long as responses arrive quickly. That shortcut has consequences. Latency creates blind spots. External AI oracles introduce trust assumptions that quietly re-centralize power. When failures happen, accountability lives somewhere else.

Kayon’s choice to embed intelligence directly into validator infrastructure feels less like novelty and more like course correction. Intelligence isn’t layered on top it’s woven into consensus itself. That matters because real risk management doesn’t happen in governance calls; it happens in milliseconds. When a system can answer natural-language questions about flows, behavior, or exposure without leaving the chain, decisions change. Not louder decisions earlier ones.

People often focus on transactional examples, but the deeper value compounds over time. Governance becomes less ceremonial when delegate behavior can be surfaced without manual forensics. Token economies stop rewarding only the fastest exits when churn is understood before it accelerates. Compliance shifts from retroactive justification to a condition of execution. None of this is flashy. All of it is stabilizing.

What stands out most is that Vanar tackles problems many protocols prefer to avoid. Growth efforts fail not because markets are hostile, but because systems are built to optimize short-term signals. Data lives forever, but incentives reset constantly. Vanar doesn’t directly rewrite incentives it repairs memory. And memory, quietly, shapes behavior.

After enough cycles, it becomes clear that durable systems aren’t created by clever token mechanics alone. They’re built by architectures that reduce panic, guesswork, and forced decisions. Vanar feels designed by people who have watched liquidity drain not from fear, but from exhaustion.

In the end, the protocols that matter are the ones that help participants think more clearly under pressure not faster, but clearer. Vanar doesn’t promise certainty. It provides structure. And in markets defined by uncertainty, structure is often the most valuable contribution of all.