@Vanarchain #vanar #VANRY $VANRY

There’s a moment when you first use a blockchain that sticks with you. You send something meaningful — pay a supplier, settle a stablecoin invoice, or move funds that matter for a bill — and then you wait. A few seconds stretch into minutes in your mind, and every refresh feels too slow. You’re impatient, not because you want excitement, but because real money and real commitments live on those rails. People like me watch that friction closely. It’s where a system either earns trust or it doesn’t.

Vanar Chain began with that kind of practical frustration in mind: a belief that if blockchain is going to host real-world value, It has to behave like real financial infrastructure. Not as a marketing slogan. Not as an academic theory. As something people can rely on every day with predictable speed, cost, and certainty. That’s why they’re focusing on scalability solutions that aren’t just about ticker tape headlines, but about how transactions flow and how data lives on chain in ways that truly matter.

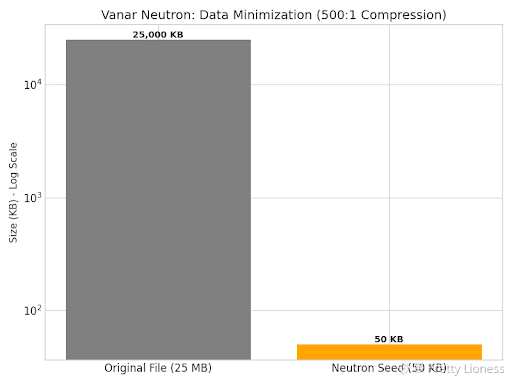

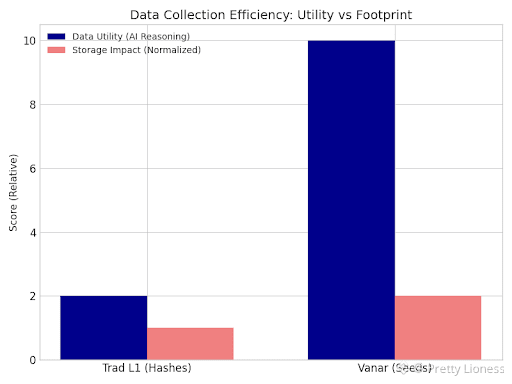

At its core, Vanar is a Layer 1 blockchain built to support intelligent, real-world finance. It embeds artificial intelligence into its architecture, aiming to turn raw data into actionable blockchain state rather than just storing opaque hash references off-chain. They made that choice because many blockchains today rely on centralized services for data storage, which introduces single points of failure — the very thing blockchain was supposed to eliminate. A major cloud outage affecting exchanges and disappearing assets punctuated this risk in real terms. Vanar responded with an on-chain data storage system called Neutron that uses AI-powered compression to shrink large files up to 500:1 so they can live directly on the ledger instead of being hosted somewhere else. Neutron doesn’t just squeeze bits. It preserves semantics — the meaning inside the data — so applications can query and verify it without trusting a third party.

If you’re thinking about scalability solutions in Vanar, the first pillar is that data layer. Traditional blockchains struggle with onchain storage because every node must keep a full copy, and that becomes expensive and slow. Vanar’s choice was to integrate AI at the core so that every piece of data becomes small, verifiable, and queryable rather than a bulky external artifact. This decision is not about futuristic buzz. It’s about making storage practical for real-world financial documents, contracts, and compliance records that businesses depend on.

Neutron alone would be novel, But scalability in Vanar isn’t just about compression. They’re also incorporating AI-driven validators — with Ankr as their first AI validator — to enhance the speed, accuracy, and security of transaction validation and smart contract execution. If a network can validate faster and with fewer bottlenecks, more transactions scale without choking. The integration of AI into validation processes aims to reduce friction in how transactions and data integrity checks operate across the network, again with reliability as the priority. Vanar plans to welcome additional AI validators to strengthen this capability.

They’ve also built the base chain with EVM compatibility and fixed, ultra-low transaction fees — often cited as $0.0005 per transaction — to make repeated, small-value transactions economically sensible. That matters if you’re talking about mainstream payments, microtransactions, or real-world asset tokenization. No one plans a business model around fees that spike unpredictably.

Metrics that matter in a system like Vanar are different from headline TPS numbers that get hyped in conference rooms. What really matters is latency — how long before a transaction is irreversibly settled — and data accessibility — how quickly can an application verify a contract or document on-chain without a cloud dependency. They matter because they determine whether a merchant accepts a stablecoin payment without fear of reversal, whether a remittance arrives reliably, and whether a compliance system can perform a real-time audit. That’s where edge-case bugs and design trade-offs are most visible. Real-world workflows care about sub-second finality, predictable transaction cost, and verifiable data provenance. Vanar’s scalable choices aim at those exact metrics.

But it’s not perfect and there are genuine risks. A lot of the innovation rests on novel AI-driven compression and reasoning layers. Any time you introduce complex components, you introduce new modes of failure. Semantic compression and AI logic engines must be audited, tested, and stress-tested under diverse conditions. If they’re wrong about compression or reasoning, the blockchain will propagate those errors. Integrating AI into validation and storage opens the door to subtle bugs that are harder to simulate than traditional cryptographic operations. Moreover, the governance and validator model — a hybrid of Proof of Authority and Proof of Reputation — walks a fine line. Centralization can creep in if reputations are not widely distributed or if the initial validator set is too concentrated. Those are real governance and decentralization questions that matter for network resilience.

Another risk is ecosystem adoption. They’re partnering with middleware and compliance partners to make tokenization of real-world assets easier, and they’re building tools that could help enterprises onboard. But bridging TradFi expectations with blockchain realities is hard. If UX remains too complex or the developer experience doesn’t keep up with expectations, usage may stagnate. Success is about retention — the habit users form in coming back again and again because the system feels dependable — not just hype around throughput or major listings. Adoption metrics will tell the story over time, if We’re seeing real usage rather than just promotional activity.

In terms of token economics, Vanar’s native token $VANRY plays a role beyond speculation. It is used to pay for transactions, power smart contracts, and enable actions within Neutron and other modules. There are initiatives to tie revenue directly into token dynamics, with buybacks and burns triggered by real service usage, not just market trading. That’s crucial because if token mechanics are tied to utility rather than price bets, the network can sustain itself in a way that aligns incentives between users and builders.

Ultimately, the future could look like this: a blockchain where data is truly on-chain and queryable, where financial documents live with the same permanence as money itself, where payments settle predictably, and where AI helps automate compliance and risk checks without taking you outside the ledger. That’s not a fantasy. It’s what the design choices in Vanar are trying to make practical. But it demands patient engineering, rigorous validation, and sober measurement of real adoption.

I’ve watched systems evolve from theory to operations, and what always matters is this: if the network feels reliable, people build workflows around it. That’s what leads to retention. If users come back because the system works in the real world — not because of a story — then We’re seeing a foundation for real usage. The rest follows from that.