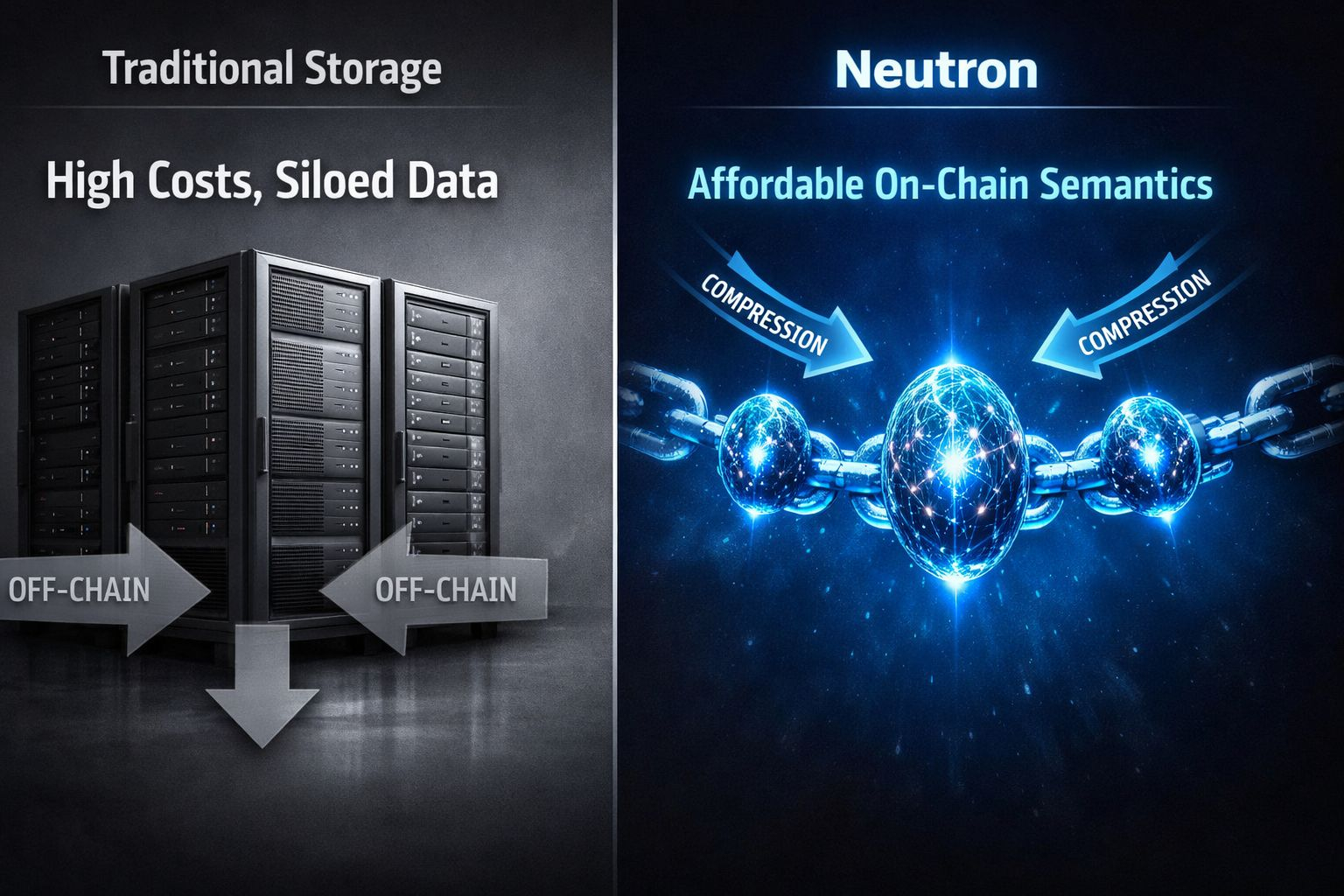

Think about it: we've all accepted that blockchains are great for ledgers but terrible for anything resembling actual data—too clunky, too costly, always shoving the heavy lifting off to some centralized server or pinning hopes on decentralized but unreliable alternatives. Yet here we are, in a world where chains are starting to hold not just hashes, but compressed essences of files, conversations, even logics, without choking on fees or sacrificing verifiability.

What Neutron does, at its heart, is rework how data lands on the Vanar Chain. Instead of dumping raw bytes into traditional storage—think bloated databases or off-chain buckets that demand constant upkeep and introduce points of failure—it layers in AI-driven compression. Semantic analysis picks apart meaning, heuristics trim redundancies, algorithms pack it tight into these things called Seeds: tiny, cryptographically signed packets that shrink a 25MB video down to 50KB or less, embeddable directly in transactions. Builders shift from wrestling with storage costs that scale linearly to handling data that's now lightweight, queryable, and native to the chain's environment. No more bridging to external systems; the network dynamics tilt toward self-containment, where gas fees for data ops drop because you're moving fragments, not wholes.

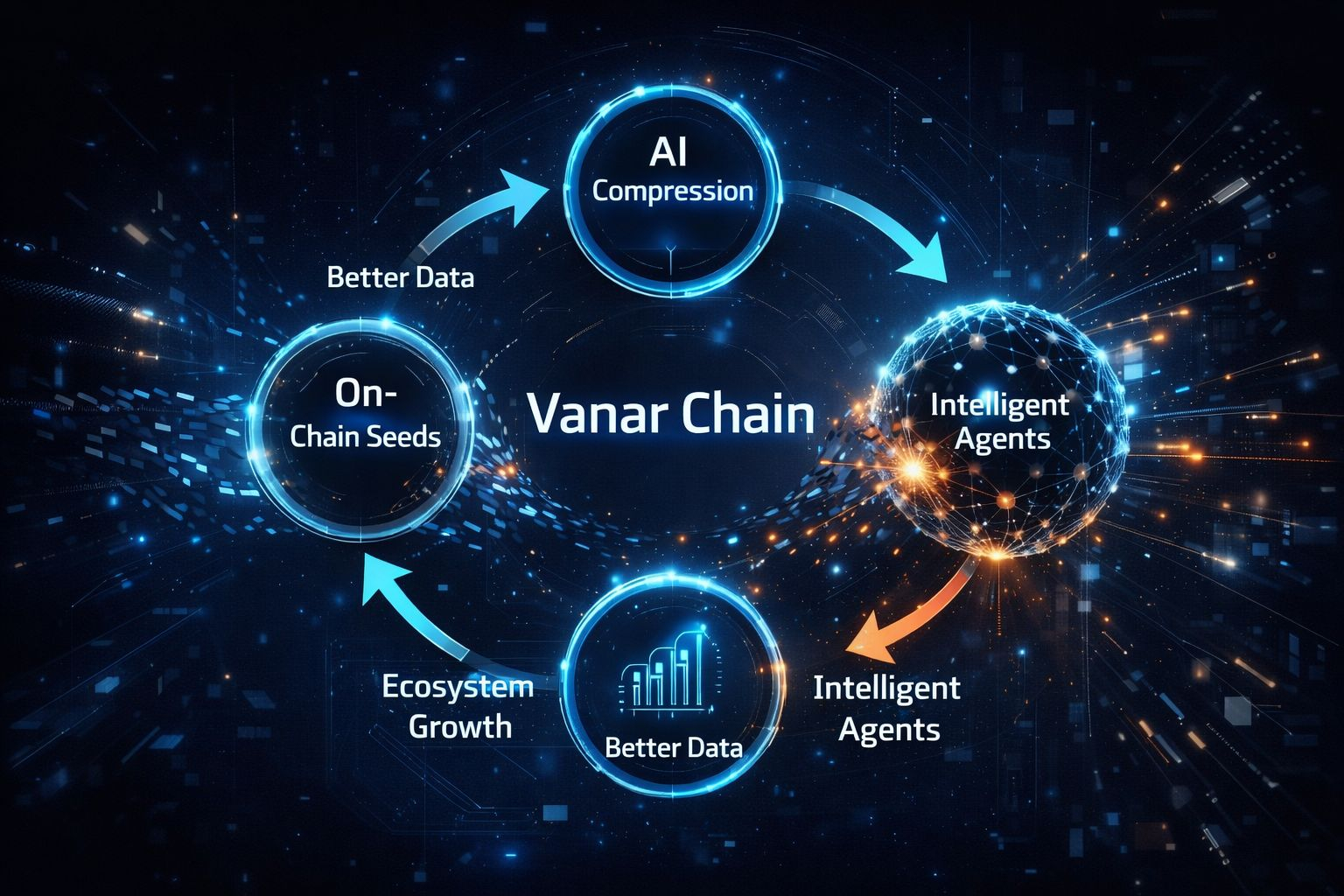

But the real shift hides in what follows. With data no longer inert—sitting there as dumb blobs—but restructured into semantic forms, agents and apps gain access to contextual memory. A financial document isn't just stored; it becomes a trigger for automated compliance checks or risk assessments, all on-chain. This quietly opens doors to ecosystems where AI doesn't pull from siloed clouds but draws from verifiable, shared ledgers. Persistent knowledge accumulates, turning isolated transactions into interconnected narratives that evolve with the network.

Still, let's not pretend this setup is flawless. Compression, by nature, risks losing nuance—fine for structured docs or videos, but what about highly variable data like raw sensor feeds where every bit counts? If the AI misinterprets semantics during packing, reconstruction could falter, leading to disputes or failed verifications. Adoption barriers loom large too: developers accustomed to familiar tools might balk at learning Neutron's APIs, especially if integration demands rethinking app architectures from scratch. And there's the assumption that on-chain everything is always better; in volatile markets, if Vanar's token economics wobble, those "affordable" fees could spike, undermining the whole pitch. These aren't minor quibbles—they could stall momentum if not addressed head-on.

From there, though, advantages start stacking. Lower storage hurdles mean more builders experiment with data-heavy apps, feeding the chain with diverse inputs. That density trains better AI models natively, sharpening semantic compression over time. Smarter compression pulls in even more complex use cases—think tokenized assets with embedded histories or games with persistent worlds—drawing users who value seamlessness. And as user activity climbs, network effects kick in: validators process richer data flows, token utility hardens, creating a loop where early efficiencies compound into defensible moats against competitors still chained to old models.

A trader who's seen cycles might push back: sure, but haven't we heard this before with IPFS or Arweave, promising cheap decentralized storage without the bloat? Fair point. Those handle permanence well, but they treat data as static artifacts—pin it, hash it, forget the rest. Neutron layers on intelligence: those Seeds aren't just archived; they're programmable, with embedded vectors for similarity searches or agent queries. It's the difference between a filing cabinet and a consultant who knows where everything is and why it matters. If you're building for AI, that semantic edge means your app reasons in real-time, not after fetching and parsing off-chain.

Picture a vast library condensed into a deck of cards. Each card holds not a summary, but the core logic of a book—enough to query, cross-reference, even infer new chapters without pulling the original volume. That's Neutron in miniature: turning blockchain storage from a warehouse of forgotten tomes into a dynamic index that thinks alongside you.

Timing sharpens the edge here. AI's surge has everyone scrambling for data sovereignty, but Web3's been lagging, still treating chains as dumb pipes while models train on centralized hordes. Vanar's phase now—post-launch integrations rolling out, with Neutron APIs hitting developer kits amid a market rebound—aligns perfectly. Regulatory pushes for verifiable AI add urgency; builders need tools that embed proofs from the start. Miss this window, and the narrative slips to whoever nails on-chain intelligence first.

Put simply: Vanar flips storage from a cost center into an intelligence engine, compressing data into on-chain seeds that let the blockchain not just record, but understand and act.

If I were watching Vanar Chain closely from here, I would track the volume of Neutron Seeds created monthly as a proxy for real adoption; the correlation between $VANRY token velocity and on-chain data throughput to gauge economic health; and the number of third-party integrations announced, since those signal ecosystem buy-in. Within the next 9 months, expect the first mainstream dApp to leverage Neutron for persistent AI memory, potentially in PayFi, where compressed compliance docs automate cross-border settlements at scale.