It took me a while to realize AI doesn’t care about TPS the way traders do.

For years, throughput was one of the loudest metrics in crypto. Transactions per second. Benchmarks. Stress tests. Leaderboards disguised as infrastructure updates. If a chain could process more activity faster, it was automatically framed as superior.

That framing made sense in a trading-heavy cycle. High-frequency activity, memecoin volatility, arbitrage bots all of that lives and dies on speed.

But AI doesn’t think like a trader.

When I started looking more closely at AI-focused infrastructure especially what Vanar is attempting it forced me to rethink what “performance” even means.

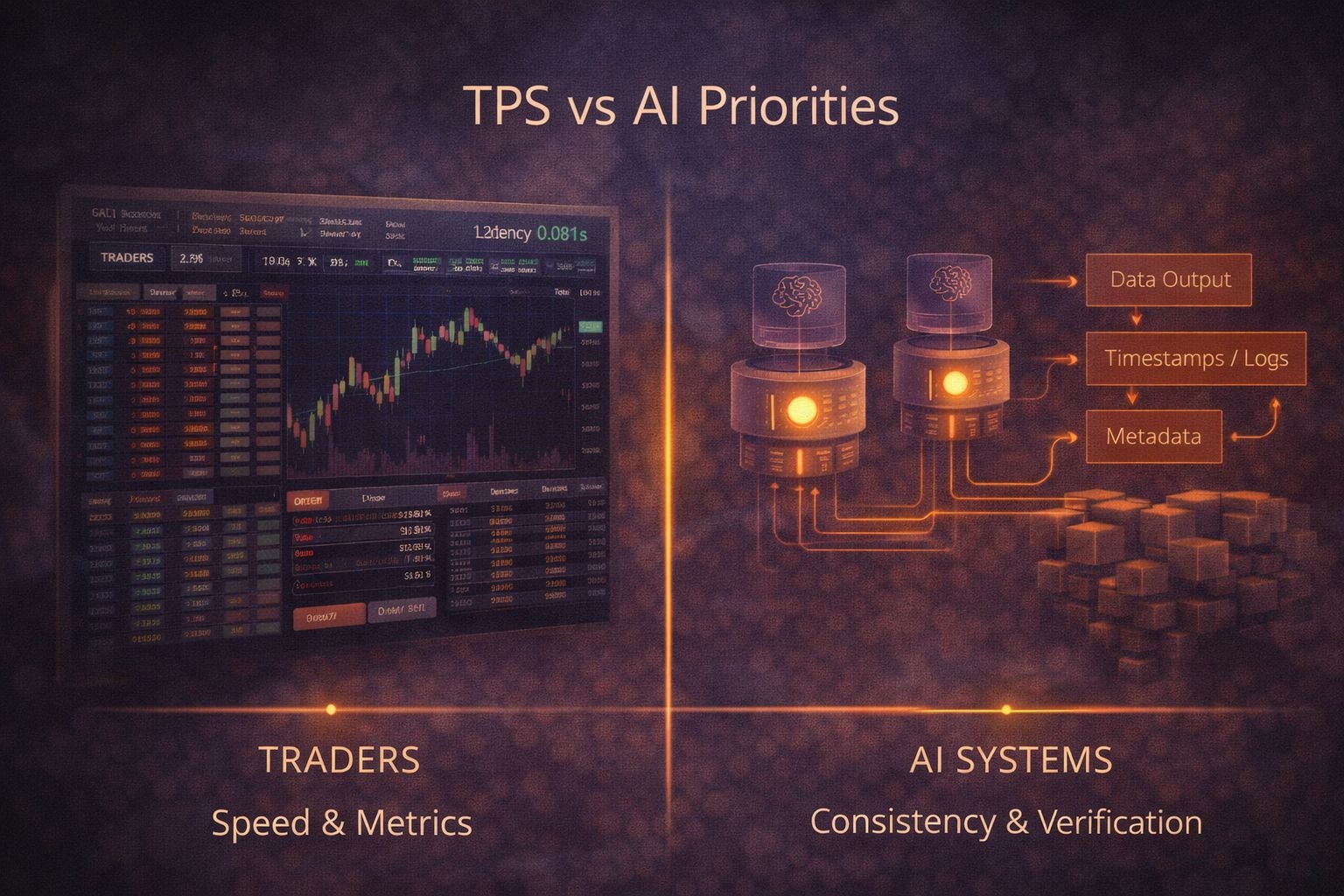

Traders care about TPS because every millisecond can affect price execution. AI systems care about something else entirely. They care about consistency, verification, traceability, and uninterrupted interaction. They care about whether outputs can be trusted, not whether a block was finalized two milliseconds faster.

That’s a different optimization problem.

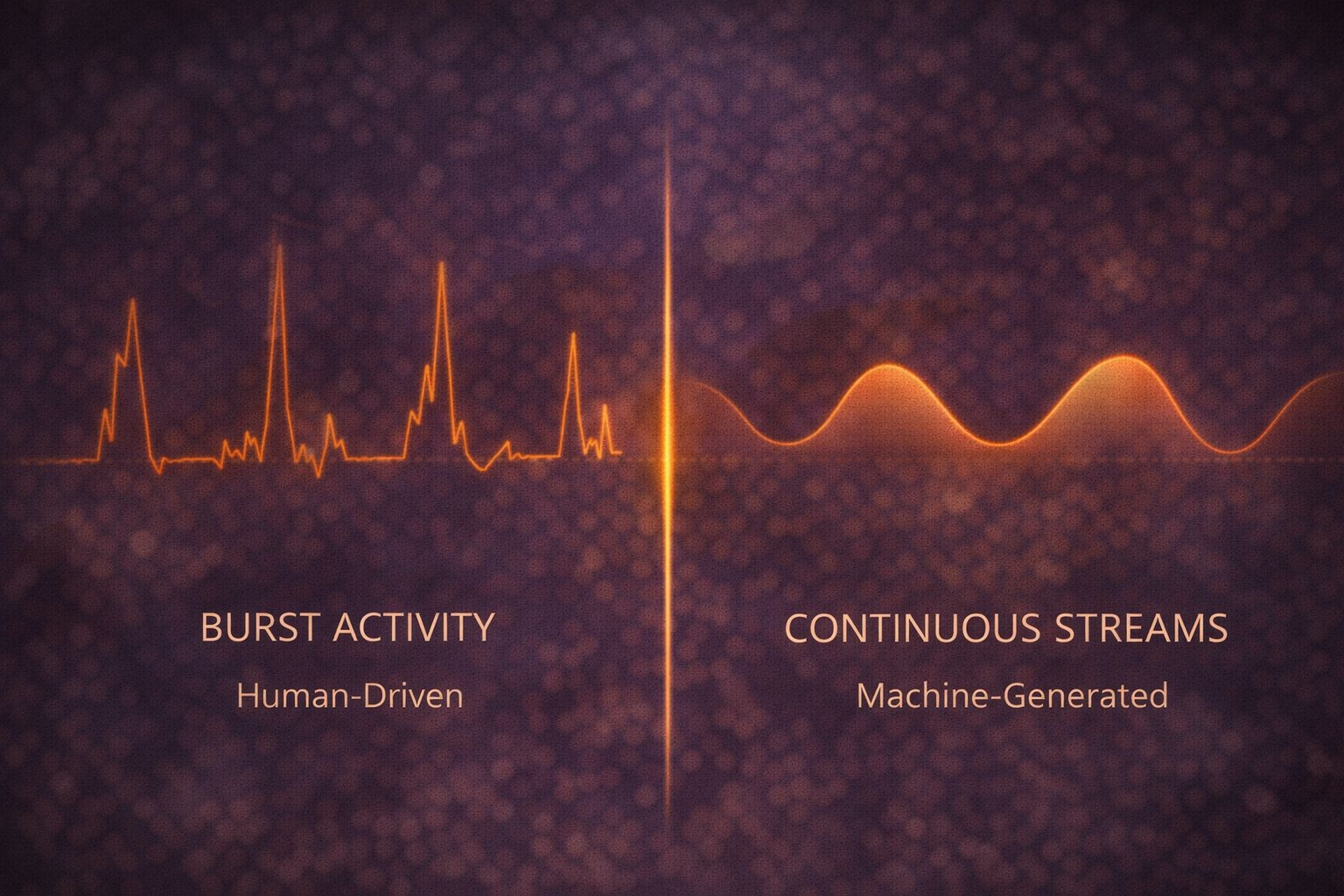

Most blockchains were designed around bursts of human activity. Users clicking, swapping, minting, voting. Even when bots are involved, they’re responding to price movements or incentives. The architecture evolved around episodic spikes.

AI systems operate differently. They generate continuously They process streams of data. They produce outputs whether markets are volatile or calm. Their interaction model isn’t burst-driven it’s persistent.

If infrastructure assumes sporadic, human-triggered activity, it starts to look incomplete in an AI-heavy environment.

That’s where the TPS obsession begins to feel narrow.

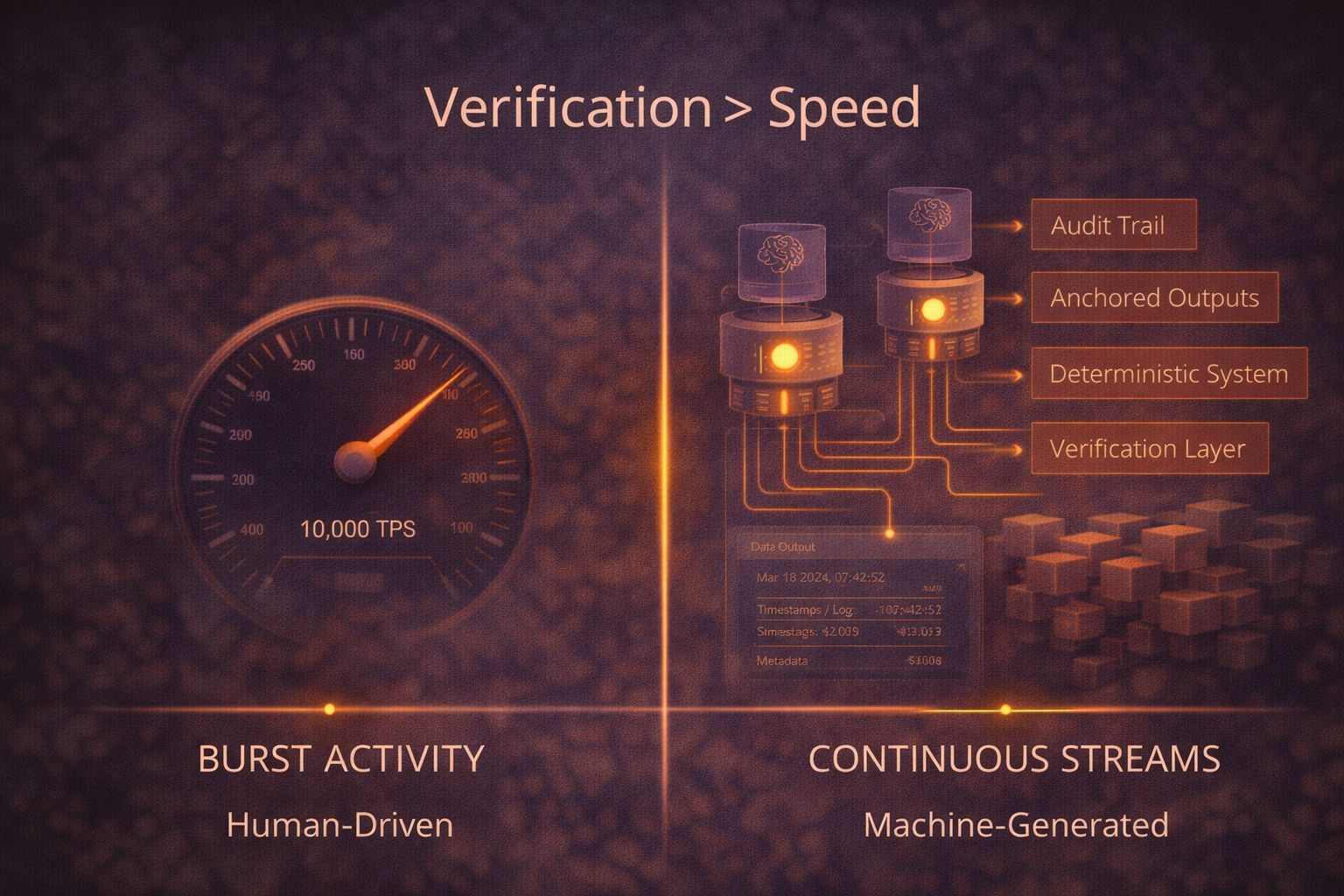

Throughput still matters, of course. No one wants congestion. But for AI systems, what matters more is whether the environment can reliably anchor outputs, log interactions, and provide verifiable records over time.

Imagine a system where AI is generating content tied to ownership executing automated agreements or influencing financial decisions. In that context, the ability to verify when and how something was produced becomes more important than shaving off a fraction of a second in confirmation time.

AI doesn’t care about bragging rights on a leaderboard.

It cares about operating without interruption and without ambiguity.

This is why the idea of AI-first infrastructure started to make more sense to me. Instead of building chains optimized primarily for speculative trading, the focus shifts to supporting machine-generated activity as a constant layer of interaction.

That requires different trade-offs.

You begin to focus more on sustained throughput under constant load and less on peak TPS. Less about single-block finality races and more about long-term integrity of data. Less about mempool competition and more about deterministic behavior.

It’s subtle, but it changes the design philosophy.

Another thing that becomes clear is how AI systems introduce new questions around accountability. If a model generates an output that triggers financial consequences, there needs to be a way to verify that interaction. If an automated agent executes logic on behalf of a user, there needs to be transparency around what happened.

High TPS doesn’t solve that.

Architecture does.

Vanar’s positioning around designing for AI rather than adding it later seems to revolve around this shift. The idea isn’t to win a throughput contest. It’s to anticipate a world where machine-generated activity becomes as normal as human-triggered transactions.

That world will stress infrastructure differently.

Instead of chaotic bursts of trading activity, you might see steady streams of AI-generated interactions. Instead of thousands of users competing for block space in a moment of volatility, you might have autonomous systems continuously logging outputs and verifying states.

That’s not as exciting to measure, but it might be more important to get right.

There’s also a cultural layer here.

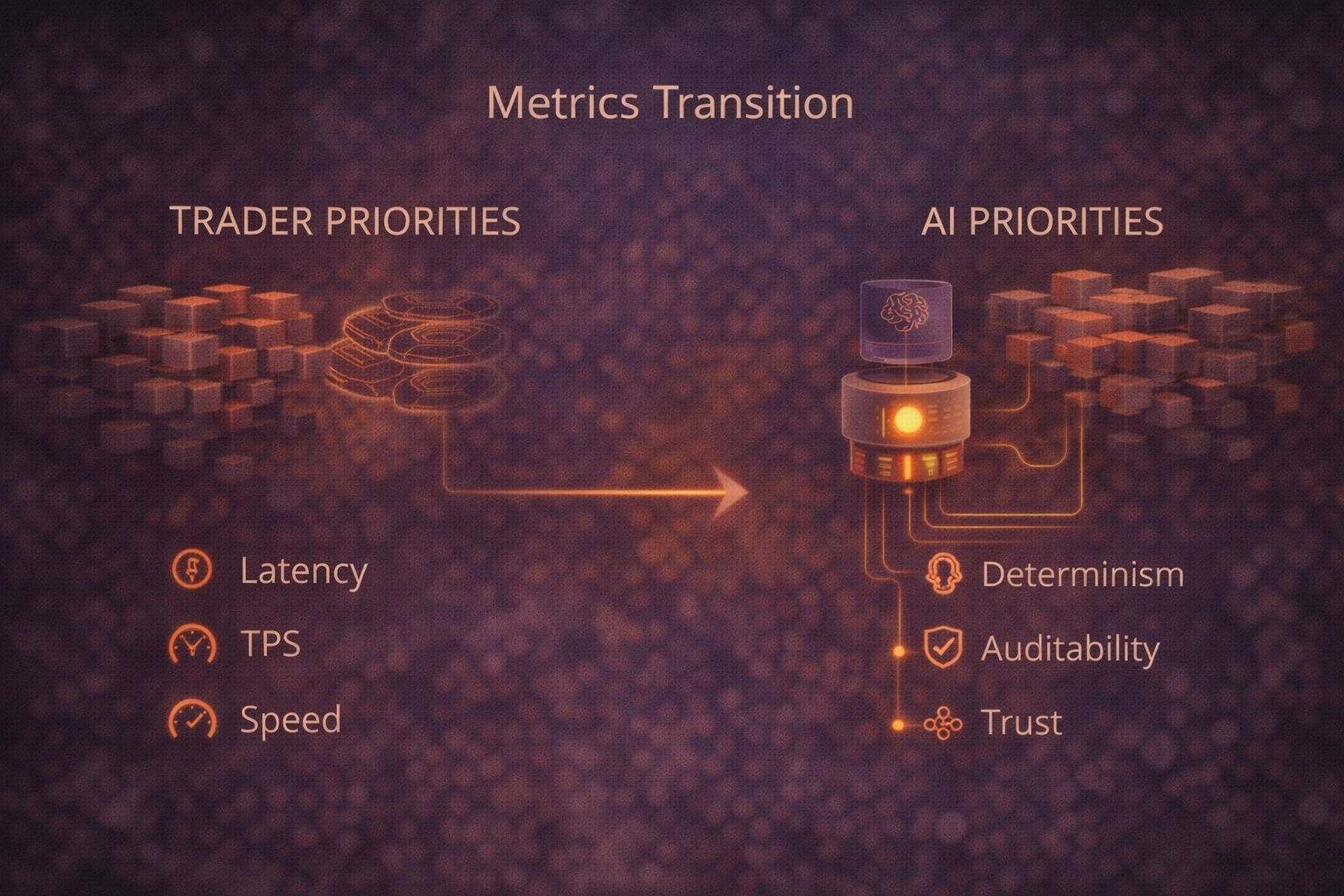

Crypto has been shaped heavily by traders. Metrics that matter to traders naturally dominate the conversation. Speed, liquidity, latency those become shorthand for quality. It’s understandable.

But if AI becomes a meaningful participant in digital economies, the priorities shift.

Stability becomes more important than spectacle. Determinism becomes more important than peak performance. Auditability becomes more important than headline numbers.

That doesn’t mean TPS stops mattering. It just stops being the main character.

I’m still cautious about how quickly AI-first infrastructure will be needed at scale. It’s easy to project exponential growth and assume every system must adapt immediately. Adoption often moves slower than narratives suggest.

But I do think we’re at a point where optimizing purely for human traders feels incomplete.

AI doesn’t care if a chain can handle 100,000 transactions per second during a memecoin frenzy. It cares whether its outputs can be anchored reliably. Whether its interactions can be verified later. Whether the system behaves predictably over time.

Those aren’t flashy benchmarks. They’re structural requirements.

It took me a while to separate the needs of traders from the needs of machines.

Once I did, a lot of infrastructure debates started to look different.

TPS still matters.

But if AI becomes a constant participant in digital systems, it might not be the metric that defines which chains matter next.

And that’s a shift worth thinking about before it becomes obvious.