When I say the first time execution felt predictable on Vanar, I’m not trying to romanticize it. I’m describing a moment where I didn’t have to brace myself.

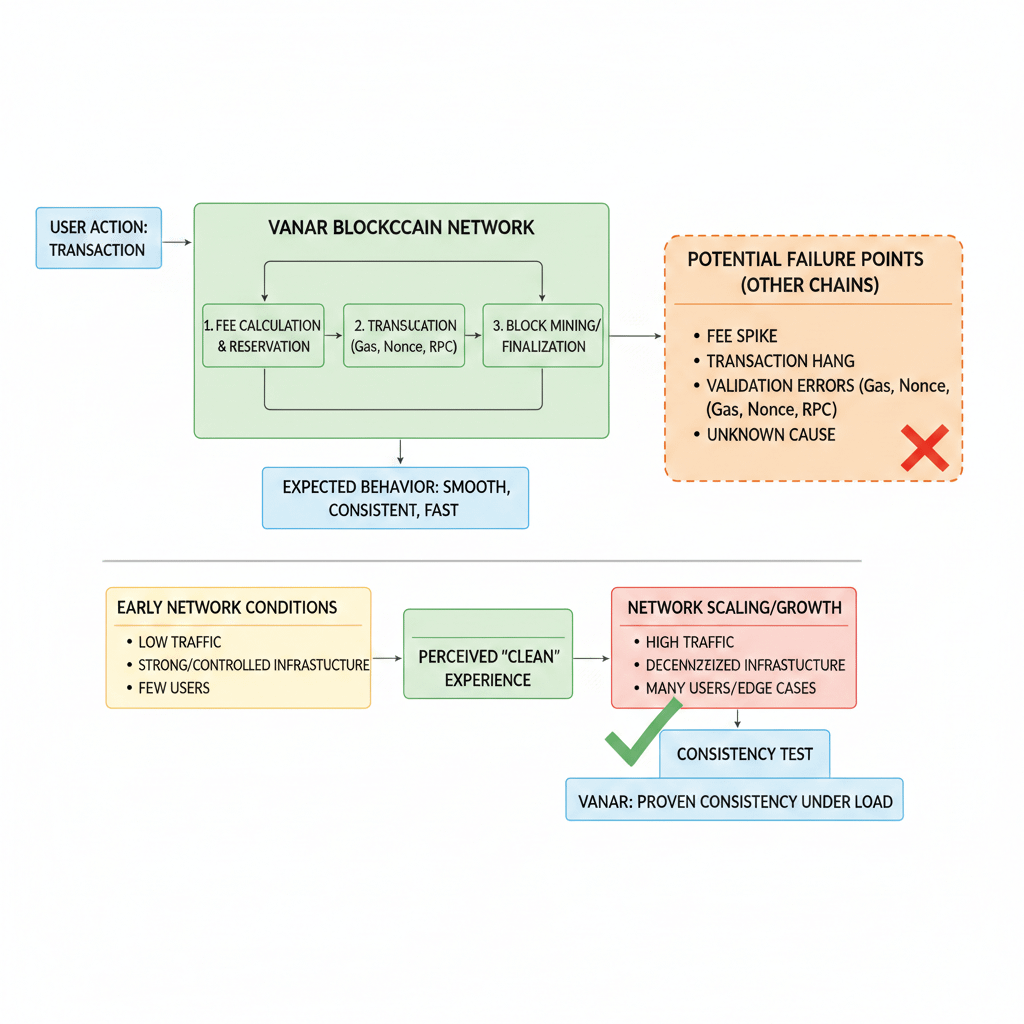

You know that feeling on a lot of chains — you click confirm and you’re already preparing for the usual mess. Maybe the fee jumps. Maybe the transaction hangs. Maybe it fails and you’re left guessing if it was gas, nonce, RPC, or just “one of those days.” On Vanar, that didn’t happen. It moved the way I expected it to move. That sounds small, but for me it’s a big deal, because consistency is the first thing that breaks when a network is fragile.

At the same time, I’m careful not to give the chain credit too quickly. Smooth first use can be misleading. Sometimes things feel perfect simply because the network isn’t busy. Sometimes it’s because the infrastructure you’re routed through is strong, controlled, and well provisioned. Sometimes it’s because there aren’t enough users yet for the ugly edge cases to appear. In those early conditions, almost anything can feel clean.

So I try to pin down what “predictable” actually meant in my hands. Was it the fee being stable? Was it the confirmation time being steady? Was it the lack of random failures? Was it the fact that everything behaved like a normal EVM experience instead of a weird custom environment?

Vanar being EVM compatible and built as a fork of Geth matters more than most people admit. Not because “EVM” is cool, but because it reduces the number of surprises. The transaction lifecycle tends to feel familiar. Wallet behavior feels familiar. The little things that cause stress — nonce weirdness, inconsistent gas estimation, odd RPC behavior — often show up less when the client foundation is mature.

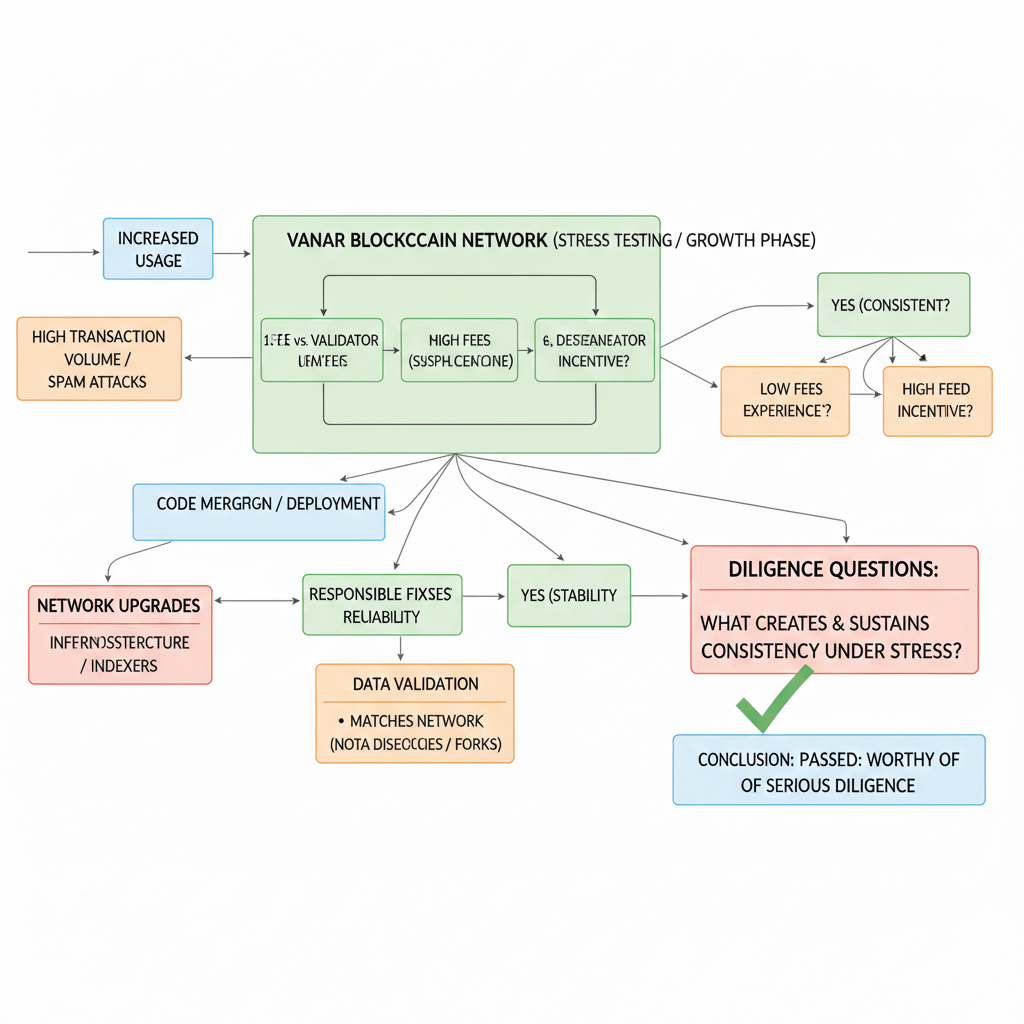

But that leads to a different kind of risk that I take seriously. A Geth fork is not a “set it and forget it” decision. It becomes a commitment to staying disciplined over time. Ethereum upstream changes constantly. Security fixes land. Performance fixes land. Behavior changes land. If Vanar diverges from upstream in meaningful ways, then they’re constantly choosing between merging fast and risking regressions, or merging slow and risking exposure. That’s where predictability can quietly decay — not because the chain is bad, but because maintenance is hard.

That’s why I don’t let myself get carried away by one clean transaction. Instead, I treat it like a prompt to dig deeper. If I’m going to commit capital, I need to know whether the smoothness I felt is something the network can hold onto when it stops being quiet.

There’s also the fee angle. When a network feels predictable to a user, it’s often because the fee environment is stable enough that you don’t have to think. And I love that. But as an investor, I immediately ask the annoying follow-up: what is the system doing to keep it stable?

Low and steady fees can happen because there’s headroom and low congestion. They can happen because parameters are tuned aggressively. They can happen because there’s a lot of control in block production and infrastructure. They can even happen because the system is effectively paying part of the cost elsewhere — through token emissions, subsidies, or central coordination. None of those are automatically “bad,” but they do change what I’m underwriting.

Then there’s the part of Vanar’s thesis that interests me more than “cheap EVM chain,” because that category is crowded. Vanar talks about deeper data handling and AI-adjacent layers like Neutron and Kayon. This is where I get both curious and strict, because these are the features that can either become real product leverage or become the reason predictability breaks later.

If Neutron is truly about compressing and restructuring data into compact on-chain forms, I need clarity on what that really means in practice. Is it storing something that can reconstruct the original data, or is it storing a representation that captures structure and meaning but not the full bytes? Or is it a hybrid where the chain stores a verifiable anchor and availability happens somewhere else? Those are three very different realities. They have different security models, different cost profiles, different limits, and different failure modes.

And this matters because data-heavy behavior is where networks start making uncomfortable choices. If developers begin pushing larger payload patterns, the chain has to deal with state growth, block propagation pressure, validator overhead, and spam risk. Predictability at the transaction layer can get sacrificed to keep the network alive. Or the network can stay “pleasant” for users while quietly centralizing. Either way, that’s where the real trade-offs live.

Kayon raises a different test in my mind. Anything described as a “reasoning layer” sounds useful, but usefulness alone doesn’t make it a moat. I want to know whether it changes how developers and enterprises operate in a sticky way, or whether it’s mostly a convenience wrapper around standard indexing and analytics. If it’s deep and trusted, great — but then I care about correctness guarantees, auditability, and conservative behavior under uncertainty. If it occasionally gives confident but wrong outputs, people won’t tolerate it for long. Trust doesn’t erode gradually in that kind of product. It breaks in one or two incidents.

All of this is why I keep coming back to that first “predictable” feeling. It can mean Vanar is building with a mindset I respect: fewer surprises, fewer random failure states, fewer moving parts that the user has to understand. That mindset can scale, if it’s real.

But I don’t assume it’s real until it survives real conditions.

I want to see what happens when usage ramps. I want to see what happens during upgrades. I want to see whether upstream fixes are merged responsibly. I want to see whether independent infra and indexers match what the network claims. I want to see how the system responds to spam, not in theory but in practice. And I want to see whether the “predictable” experience is still there when the chain has to choose between keeping fees low and keeping validators properly incentivized.

So my takeaway is simple: that first execution didn’t convince me to buy. It convinced me the project is worth serious diligence. It moved my question from “does it function?” to “what exactly is creating this consistency, and will it still be there when things get messy?”

That’s the point where an investor stops watching the surface and starts looking for the machinery underneath.