I’ll be honest: whenever a new Layer 1 shows up claiming it’s “fast,” my brain treats it the same way it treats a restaurant bragging about being “farm-to-table.” Sounds nice. Usually means nothing. Because speed isn’t a slogan. Speed is a system that has to survive bad internet routes, random packet loss, overloaded RPCs, validators spread across the planet, and the messy reality of what happens when a chain is under pressure.

That’s why Fogo (FOGO) caught my attention. Not because it says it’s fast, but because it’s trying to be fast in the specific way that actually matters for trading and DeFi: not just high throughput, but predictable confirmation. In markets, “average speed” is meaningless. What matters is whether the chain stays quick and consistent when everyone hits it at once.

Fogo’s own materials make a point that’s rare in crypto marketing: latency isn’t a small detail you polish later. It’s the base layer. Blockchains don’t run in a perfect lab. They run on a planet. And the planet has distance, traffic, weird network days, and “tail latency” where the slowest moments dominate how the system feels.

That difference in framing matters. Fogo isn’t really selling “more TPS” as the main story. It’s selling the idea that on-chain trading should feel closer to real-time infrastructure, where speed is not only fast, but reliably fast.

On the execution side, Fogo is basically borrowing a proven approach rather than reinventing everything. It stays compatible with Solana’s execution environment (the SVM), which is a practical move. Builders don’t love rewriting everything from scratch just to chase a new “faster” chain. Familiar tools and compatibility reduce friction. So the pitch isn’t “new VM, new world.” It’s “same vibe, tighter performance.”

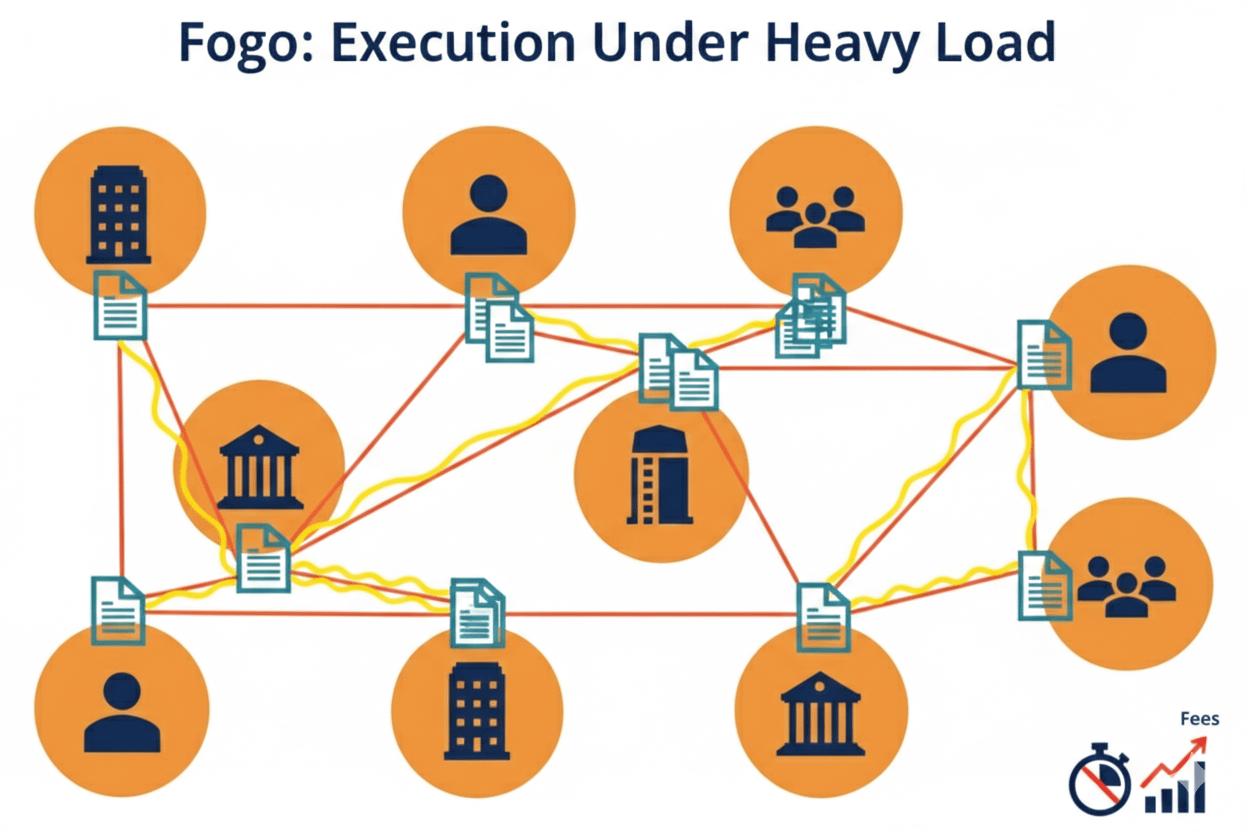

But here’s the thing: producing blocks quickly is the easy part to claim. The hard part is proving the chain remains fair and stable when blocks are packed, users are spamming transactions, network conditions are ugly, and infrastructure gets strained. Fogo talks about very tight block times in test setups. Fine. The real question is whether those numbers mean anything once real users show up with real chaos.

Where Fogo becomes genuinely distinctive is how it tries to beat physics. Instead of having the whole validator set spread out and participating equally all the time, it groups validators into “zones.” The idea is that validators inside a zone can be extremely close to each other, even in the same datacenter, which makes message round-trips faster. Then only one zone runs consensus in a given epoch, and the active zone rotates over time.

Conceptually, it’s clever. If the validators that matter right now are physically close, you can squeeze latency down hard. It’s almost the opposite of what many people instinctively imagine decentralization looks like. The usual mental picture is “validators everywhere, all the time.” Fogo’s answer is more like “tight cluster now, rotate later.”

But that comes with real trade-offs, and they’re not just theoretical. You get lower latency by tightening physical distance. You also create more operational complexity because the moment you switch zones becomes a critical event. And you naturally put pressure on validators to live in high-performance datacenters that can meet strict network requirements. None of that is automatically evil, but it does change what kind of network you’re building. If you push too far, you risk drifting into something that feels like “datacenter finance with tokens.”

And here’s where the story gets interesting in a way most projects avoid: Fogo has already had to deal with the dark side of going fast.

The most credibility-building thing in all the reading wasn’t a benchmark chart. It was an outage post-mortem. Fogo wrote up a testnet halt from August 13, 2025, and it wasn’t a cute “oops” incident. It was a long outage tied to networking instability during a zone transition, with more halts happening during later transitions too.

The failure mode is exactly the kind of thing that makes performance designs scary. During a zone transition, if connectivity between zones degrades, blocks can arrive late across the boundary. Validators in the new zone may start building as if certain slots were skipped. Then late blocks show up and create competing histories. And because stake weight changes during the transition, the usual ways of resolving forks can get stuck.

In slower systems, you sometimes have a little slack. In ultra-low-latency systems, you don’t. When you’re pushing the limits, the rare weird moments stop being “edge cases” and start becoming protocol-level events. The faster you go, the more your weakest links become everyone’s problem.

To Fogo’s credit, putting out a detailed incident report is exactly what a serious team should do. It’s the difference between “trust us” and “here’s what broke, why it broke, and what we learned.” But it also proves something important: latency advantage often isn’t separable from infrastructure dependency. If your design is built around tight coordination, your network will feel every wobble.

Fogo also makes another strong choice that’s common in speed-obsessed systems: it standardizes around one main validator client. The plan centers around Firedancer (starting with a hybrid approach and moving toward fuller adoption). This is a practical way to squeeze performance, because supporting many clients often means the network can only go as fast as the slowest implementation.

But there’s a tension. One client can mean smoother performance. One client can also mean monoculture risk: if a bug lands in the canonical client, everyone feels it. The best mitigation is serious security work and outside scrutiny. Firedancer has had third-party auditing work published, which doesn’t eliminate risk, but it does move the conversation toward something measurable rather than vibes.

So what’s the real takeaway?

If you ignore the branding and focus on the design philosophy, Fogo is basically saying: stop pretending latency is a minor detail. Treat it like the main constraint. Make on-chain execution feel closer to real trading infrastructure. Accept that physics is part of the protocol, and shape validator topology and software choices around predictable execution.

That’s a coherent thesis, and it targets a real pain point. On many general-purpose chains, latency spikes and congestion turn trading into a gamble. You don’t just lose speed; you lose confidence. And confidence is what traders pay for.

But the question Fogo still has to answer is the only question that matters: can it keep ultra-low latency while staying resilient when the world stops cooperating?

Because the network won’t be tested by glossy demos. It’ll be tested by the boring disasters: zone transitions that go sideways, cloud providers glitching, sudden surges of activity, broken routes, bad days on the internet, and unexpected edge cases nobody simulated.

If Fogo keeps doing the unglamorous stuff—publishing real incident reports, proving uptime over time, rolling out carefully, and keeping the validator stack under serious external scrutiny—it could end up as something valuable: a specialized execution layer built for latency-sensitive DeFi, not another chain trying to be everything for everyone.

I’m not crowning it. And I’m definitely not assuming that “40ms blocks” automatically means better outcomes. But I do respect the honesty of the direction: optimize for execution, admit the physics, and treat infrastructure as part of the protocol. If that mindset survives real users and real stress, Fogo’s speed story might grow into something much rarer in crypto than hype: reliability you can actually feel.