Let me start with a judgment I am increasingly certain of:

The real incremental users in the next wave of Web3 may not be people like us, but various "automated workers"—intelligent agents, scripts, automated processes, robots running in enterprise systems. You may not like this statement, but reality is pushing in this direction: transactions, reconciliation, risk control, content distribution, marketing investment, supply chain settlement... these tasks are inherently suited to be automated.

The question arises: If "automated workers" are to work on the blockchain, our current chain is quite unfriendly. For human users, enduring a bit, learning, clicking confirm, and swapping Gas tokens might be manageable. But for intelligent agents, these are all frictions, even fatal issues: they are not good at clicking around in a UI, nor should they spend a lot of energy on "how to pay fees, how to maintain context, how to explain why this is done."

This is also why I find Vanar Chain interesting: it doesn't treat AI as an ornament like many projects do, but addresses the hard problem of 'where agents will get stuck on chain.' What it aims to do is not an overly flashy narrative but to dismantle those friction points that are most prone to failure in advance.

1) The most common failure points in 'AI on chain': can't remember, can't articulate, can't stabilize.

You've seen many so-called 'AI + blockchain' demos: they look cool, can converse, can give suggestions, can generate strategies, and can even initiate transactions. But once you really make it work for two weeks, the problems will pile up:

Can't remember: Context breaks, what is said today won't be acknowledged tomorrow, permissions, preferences, and policy constraints must all be reiterated.

Can't articulate.: It gives you a result, but when you ask 'why,' it either fumbles or writes a seemingly reasonable explanation that cannot be verified.

Not stable: Automation can execute, but lacks guardrails, making it prone to overstepping, violating risk controls, or escalating a small problem into an incident.

Many chains are not unmotivated, but rather the underlying design does not align with this goal. If you ask it to add modules later, it's like continuously adding equipment to an old house: usable, but the more you add, the messier it becomes, and the higher the risk.

@Vanarchain 's approach is more like treating the agent as the primary user from the very beginning. Thus, it emphasizes that the chain itself must be able to support the long-term working model of agents, rather than just supporting one-off transactions.

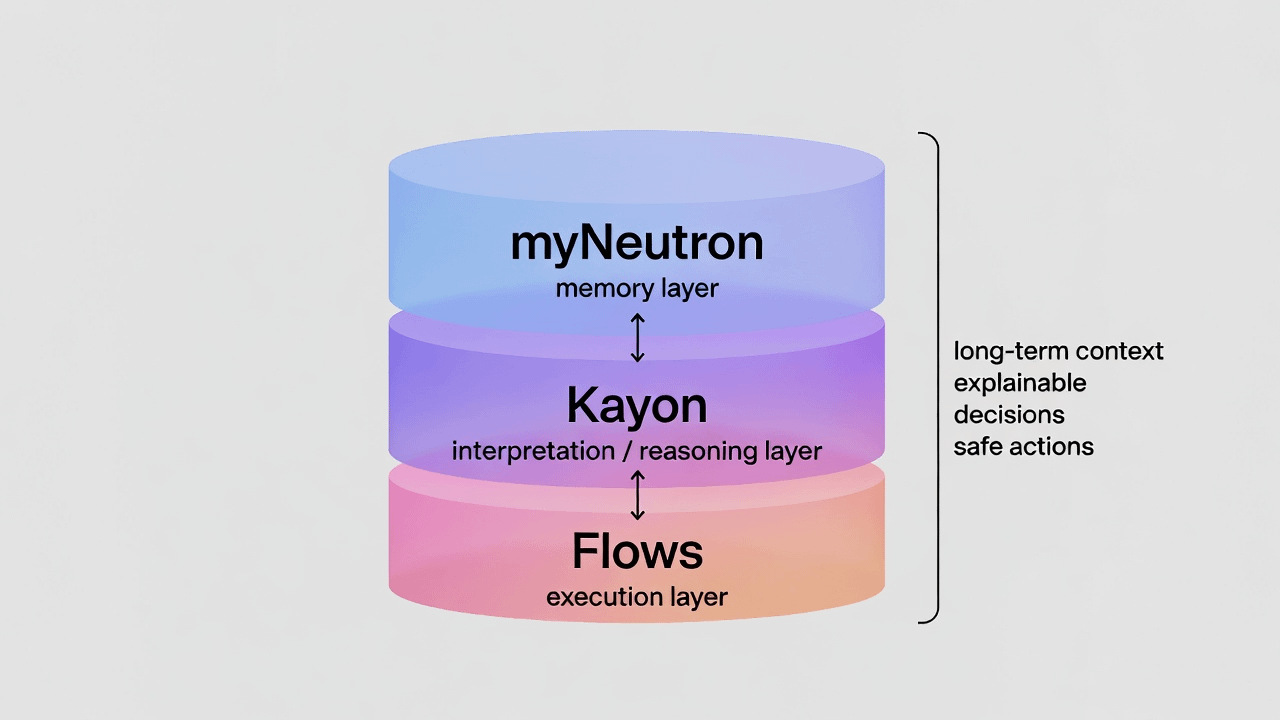

2) Why I place more importance on myNeutron: it addresses 'long-term use.'

I don't want to praise overly vague terms; let's speak plainly:

For the agent to be useful, it must continuously work; to work continuously, it must have long-term context; to have long-term context, it needs 'memory' that is not temporary cache, but something that can be solidified, reused, and ideally verifiable.

The significance of the myNeutron line lies here: it attempts to prove that 'semantic memory' and 'persistent context' can approach the infrastructure layer, rather than each app creating its own incompatible database.

What do you imagine it to be?

Like a combination of a 'work log + business knowledge base.' Each time the agent works, it doesn't start from scratch but can continue with history; and this history isn't just for its own use but can also become part of the system, reused by other processes.

Many people will say, 'I can also do it off-chain.' Of course, you can.

But you understand the issues with doing it off-chain: credibility, alignment, permissions, auditing, data consistency, which ultimately often becomes 'another centralized system,' and the chain is merely a settlement button. This is not a problem for certain scenarios, but if you genuinely want to make the agent's behavior a traceable infrastructure, a more foundational direction like myNeutron is unavoidable.

3) Kayon makes me feel like it's 'seriously doing work': in enterprise scenarios, explanation is not decoration, but a ticket.

When you put the agent into transaction or asset-related processes, the most sensitive issue is not 'can it calculate,' but 'how do you explain it when something goes wrong.' Especially for enterprises: audits, risk controls, and legal departments won't let it go just because you say 'the model is smart.'

Thus, 'reasoning + interpretability' is not just a bonus in my view, but a threshold for whether it can transition from demo to production environment.

What Kayon needs to prove is to make reasoning and explanation as native and transparent as possible—not by writing a segment of explanation afterwards but being able to bring the decision-making chain to a more verifiable level.

The reason I like this direction is simple: it doesn't pursue 'effects' like most AI narratives, but instead fills in the 'responsibility chain.' Only when the responsibility chain is complete can enterprises grant permissions, and agents can truly enter high-value scenarios.

4) Flows is the most easily overlooked yet most crucial link: can execute, but must 'execute in a controllable manner.'

Many people still understand automation as 'can it automate tasks.'

But the real challenge is 'can it automate tasks without causing chaos.'

Once the agent can trigger on-chain actions, the risk is no longer linear. One misplaced prompt, one misunderstood rule, can magnify asset actions into incidents. Hence, enterprises will focus on these issues:

How should permissions be layered?

Can a whitelist/limit/conditional trigger be set?

Can the execution process be audited and reviewed?

Can losses be mitigated in a timely manner when problems occur?

@Vanarchain The line of Flows aims to solve this by putting the agent's 'actions' within guardrails: allowing it to execute, but in a safe, controllable, and traceable manner.

You can understand it as 'transforming the agent from being able to 'speak' to being able to 'do,' while simultaneously putting a safety helmet on it.'

In reality, systems that have been successfully implemented often win in areas that 'look unsexy': they rely not on flashy techniques, but on reducing incidents, being controllable, and being stable.

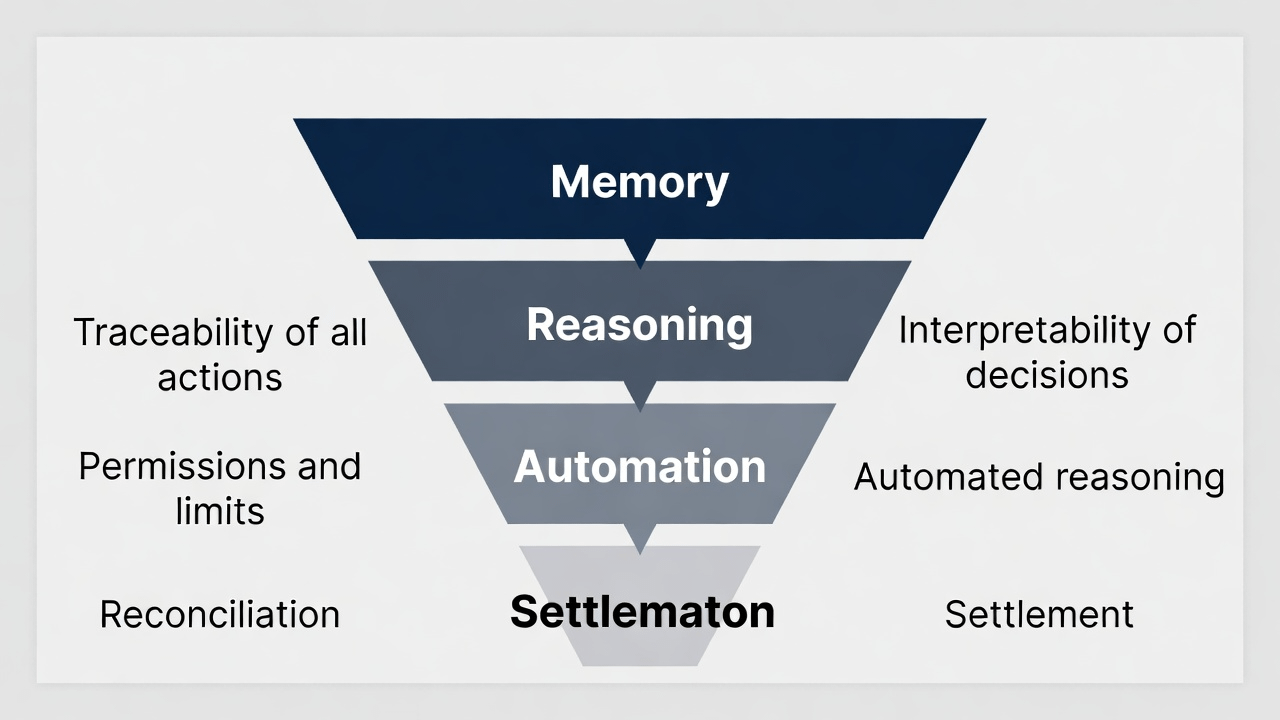

5) Why I believe 'payments/settlements' are the ultimate divide for AI infrastructure.

Here I want to say something quite straightforward:

Agent systems without settlement capabilities ultimately end up like toys.

Because the agent is not here to chat with you; it is here to 'do the work for you.' Doing the work necessarily involves money: payments, settlements, reconciliations, profit sharing, compliance.

Moreover, the agent will not use a wallet UI like a human— it won't open an app to confirm; what it needs are standardized, orchestrated settlement tracks.

So @Vanarchain seeing payments as a core requirement for AI-readiness seems very reasonable to me: it forces itself to create 'usable infrastructure' rather than just a bunch of demos. You can understand it as: as long as you want real economic activity, you must make settlements; otherwise, you'll always remain at the 'looks strong' stage.

6) Cross-chain starts with Base: don't underestimate this decision, it is more about solving the 'distribution' problem.

There is another point that I think is very realistic: if AI-first infrastructure only self-satisfies within one network, its value will be inherently compressed.

The agent ecosystem will not happen only on a single chain. Users, assets, applications, and toolchains are distributed across multiple chains. If you want real invocation volume, you must address the 'where to use it' issue.

The significance of cross-chain landing on Base is more like 'putting capabilities where there are more people.' It's not just a technical route; it's more like a distribution strategy: allowing more developers, more applications, and more users to encounter this set of capabilities. For $VANRY, this directly impacts the potential usage area— the more places it can be used, the easier real demand will grow.

7) So I prefer to consider $VANRY as 'preparedness' rather than 'story.'

Finally, back to $VANRY

I don't like the kind of token analysis that talks about narratives excessively because market sentiment comes and goes quickly. What's more worth noting is whether this system dismantles the 'hard problems of getting agents on chain' one by one and can be continuously invoked in real usage.

If Vanar's approach is viable, its value accumulation will not rely solely on hype, but will gradually build up based on usage frequency, invocation volume, and settlement activities.

Ultimately, infrastructure that can truly survive cycles is often quite 'boring': it does not rely on new stories every week, but rather on people using it every day.