Most people still see oracles as something very basic. A smart contract needs a price, the oracle gives a number, and the system moves on. But in real markets, data never behaves that nicely. Prices don’t match everywhere. Some sources update late. Some react too fast. And real-world information often changes after it’s already been used.

When I look at APRO lately, it feels like the project understands this problem. It doesn’t act like data is clean or final. It acts like data is usually messy, and that someone has to deal with that mess before money moves.

On the surface, APRO’s updates look normal. New chains. New integrations. Oracle services going live. But if you slow down and look at the direction, it feels like APRO isn’t trying to be “the fastest oracle”. It’s trying to become something more boring and more useful — a base layer that other apps rely on when data actually matters.

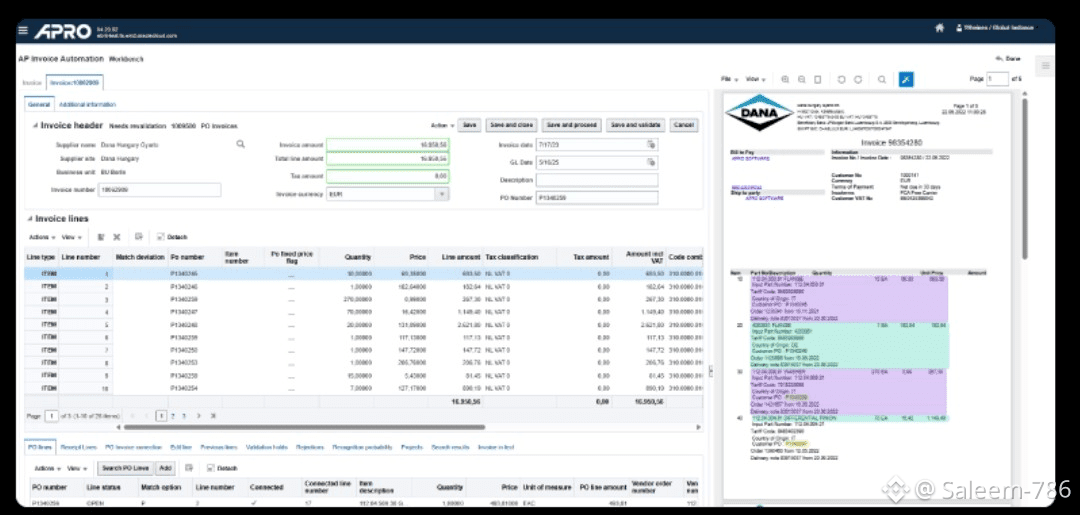

One important move was launching Oracle-as-a-Service on BNB Chain. That sounds technical, but the idea is simple. Developers don’t want to build trust systems again and again. They want something ready. Something that already assumes data can be wrong sometimes. APRO offering this as a service means apps can plug into a shared system instead of building fragile setups on their own.

Another thing people don’t talk about enough is that APRO isn’t focused only on prices anymore. Prices are easy compared to real-world data. Events, reports, documents, outcomes these things get delayed, corrected, even disputed. If smart contracts are going to touch this kind of information, the oracle layer has to slow things down a bit and ask questions first. APRO’s focus on verification feels like an attempt to do that.

APRO’s growth also isn’t loud. It doesn’t show up clearly in price charts. It shows up in usage. In how often data is requested. In how many chains it’s active on. In being added alongside other oracles instead of replacing them. Usually, when teams do this, it means they care about safety and backup, not marketing.

There’s also the backing side. APRO has support from institutions that deal with data problems in traditional finance. That doesn’t mean success is guaranteed. But it does mean the project isn’t being treated like a toy. These kinds of backers usually care about whether systems hold up when conditions aren’t perfect.

What really stands out to me is how APRO combines a few things that are usually separate. It works across many chains. It uses AI not just to move data faster, but to help verify information that isn’t clean. And it offers all of this in a way that developers can actually use without building everything from scratch. That combination quietly shapes its identity.

If this direction continues, APRO may stop being seen as just a data provider and start being treated as a trust layer. And a trust layer is different. When something goes wrong, people don’t just ask “what was the result?” They ask “why was this data trusted?”

Most oracle discussions still focus on speed. Who updates first. Who’s fastest. APRO seems to be betting on something else who can handle more types of data in a way that’s defensible and easy to integrate.

So the real APRO updates probably won’t be flashy. They won’t be airdrops or hype posts. They’ll show up quietly, in how many apps depend on it, how data is verified, and how information moves across chains without breaking trust.

That kind of progress is easy to ignore.

Until you really need it.