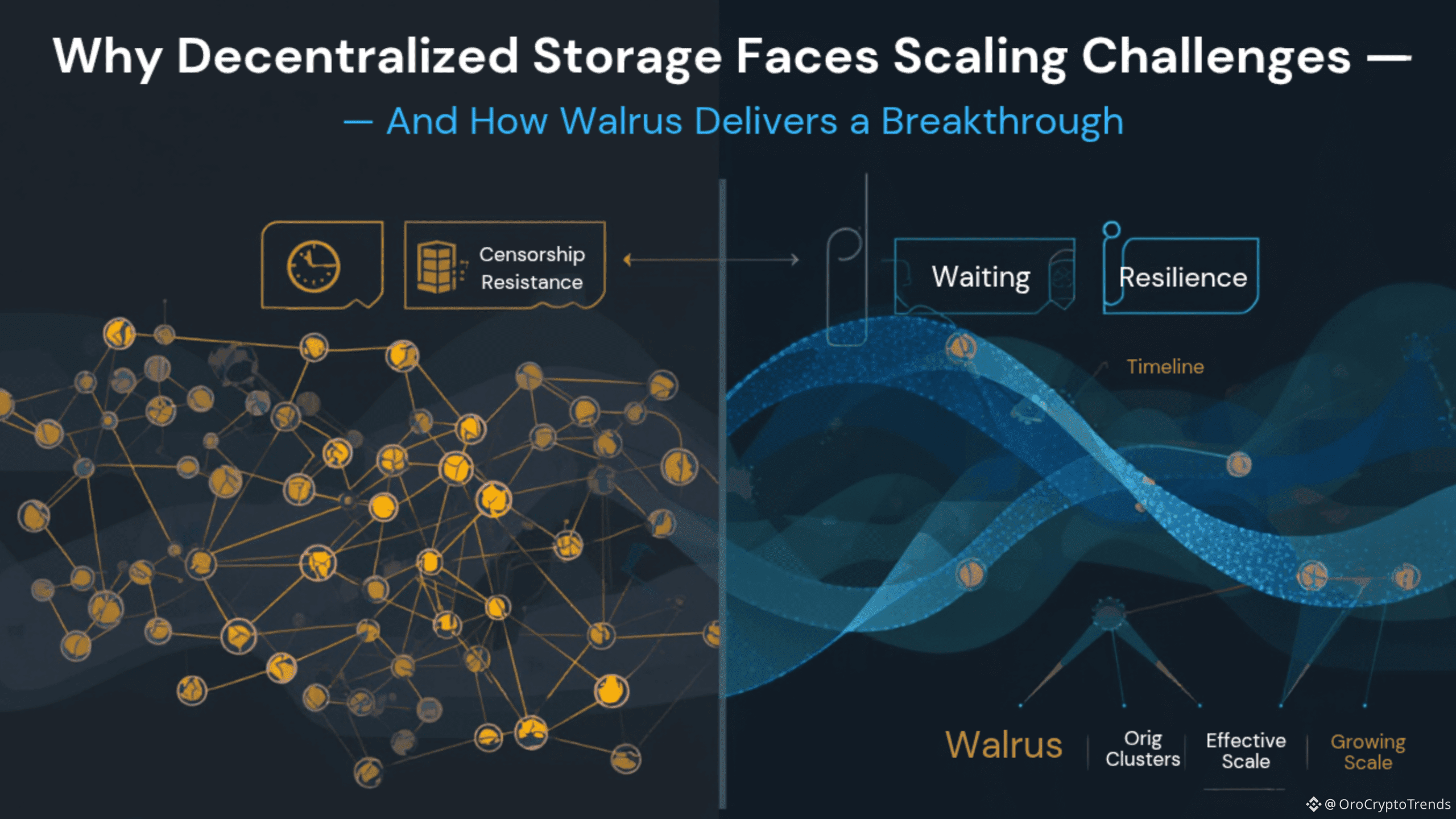

At first glance, decentralized storage appears to offer a simple solution: distribute data across many independent nodes so that no single point of failure can compromise access or integrity. This idea promises resilience, censorship resistance, and a future where your data isn’t held hostage by any one provider. But as these networks expand to accommodate real-world demands, fundamental issues begin to emerge—issues that traditional architectures simply aren’t equipped to solve at scale.

As Web3 projects mature and their user bases grow, the underlying decentralized storage networks often struggle to keep pace. Storage costs rise unexpectedly, data recovery in the event of node failures becomes sluggish, and the entire ecosystem can feel surprisingly brittle when stress-tested by outages or adversarial conditions. Under the hood, these problems stem from the way most decentralized storage systems approach redundancy and repair—a critical piece of the puzzle that’s all too often overlooked until things start to break.

The Two Main Approaches—and Their Pitfalls

Most current decentralized storage platforms rely on one of two basic strategies for data durability and availability.

The first is full replication. In this model, entire files are copied to multiple nodes—sometimes dozens or even hundreds—across the network. This guarantees that if one node disappears, the data is still available elsewhere. It’s conceptually simple and easy to reason about, but it comes at a steep price. As more users join and the volume of data grows, the network’s total storage requirements skyrocket. Costs scale linearly—or worse—with every new node or file added, making long-term sustainability a challenge.

The second approach is erasure coding, which slices files into smaller fragments and disperses them across nodes. Only a subset of these fragments is needed to reconstruct the original data, so the system is more efficient than pure replication. However, when nodes fail or go offline—a common occurrence in decentralized networks—the recovery process can become cumbersome. Many erasure coding schemes require the system to download substantial portions of the file to repair even a single lost fragment. This not only taxes network bandwidth but also increases latency, leading to slow recovery and higher operational costs.

In small or relatively static networks, these strategies can work adequately. But as node churn increases and the network grows more dynamic—a hallmark of any truly decentralized environment—the overhead required just to maintain data integrity starts to eclipse the benefits of decentralization itself. Instead of focusing resources on serving users, the network becomes preoccupied with continually patching itself up.

The Hidden Bottleneck: Recovery, Not Storage

It’s easy to assume that the main difficulty in decentralized storage is simply keeping data redundantly stored. But the real challenge lies in what happens when things go wrong. In live, distributed systems, node failures, intermittent connectivity, and malicious actors are the norm, not exceptions. The ability to quickly and efficiently repair lost data fragments is what separates robust, scalable systems from those that collapse under their own weight.

The unfortunate reality is that in many decentralized storage networks, recovery operations are disproportionately expensive. Each time a node loses data, the system may need to pull entire files from multiple locations just to restore a single missing piece. Over time, these repair cycles add up—sometimes consuming more bandwidth and resources than the original storage process itself. As a result, the cost and complexity of recovery can become the limiting factor that prevents decentralized storage from scaling to meet real-world needs.

Walrus: Rethinking Recovery from the Ground Up

Walrus introduces a paradigm shift with its Asynchronous Complete Data Storage (ACDS) architecture. Unlike conventional systems that treat recovery as a patchwork afterthought, Walrus is engineered from the very beginning with efficient, scalable repair in mind.

The core innovation lies in the use of two-dimensional erasure coding. Rather than simply slicing data in one direction, Walrus organizes fragments in a grid-like structure, distributing them both horizontally and vertically across the network. Each node is responsible for only a small slice of the overall data, and every fragment is cryptographically verifiable—ensuring integrity without the need to trust any individual operator.

This grid-based design unlocks powerful self-healing properties. When a node fails or a fragment is lost, the system doesn’t need to reconstruct the entire file or download massive amounts of data. Instead, it can rebuild missing pieces by exchanging tiny, targeted fragments with neighboring nodes. Even if several rows or columns in the grid go bad, the network can still recover the original data with minimal effort. This approach dramatically reduces the bandwidth and time required for repair, making recovery costs predictable and manageable even as the network scales.

Beyond Storage: The Broader Impact of Predictable Recovery

Walrus’s approach has profound implications for the future of decentralized infrastructure. By decoupling recovery costs from overall network size and tying them instead to the actual volume of data stored, Walrus enables networks to grow organically without hitting a wall of diminishing returns. The system remains resilient and responsive, with robust defenses against both random failures and targeted attacks.

This reliability is crucial not just for massive, enterprise-scale deployments but for any use case where data longevity and accessibility are paramount—from NFT storage to decentralized social media, archival services, and beyond. Efficient recovery also helps keep costs transparent for users and operators alike, fostering a healthier, more sustainable ecosystem.

Key Questions and Considerations

When evaluating decentralized storage platforms, it’s tempting to focus on headline metrics: total capacity, node count, or token rewards. But these figures only tell part of the story. The real test of any storage network is how it handles adversity. Can it gracefully recover from node failures, outages, or malicious actors? Will recovery costs balloon as the network grows, or remain stable and predictable?

Walrus’s design philosophy makes these considerations front and center. With cryptographic proofs, users don’t have to place blind trust in unknown third parties—every fragment can be independently verified. And because Walrus operates as a storage layer rather than a blockchain itself, it can integrate seamlessly with existing blockchain projects, enhancing data availability without introducing unnecessary complexity.

Conclusion: Building a Scalable Future for Decentralized Storage

The promise of decentralized storage isn’t just about distributing data—it’s about building systems that can thrive in unpredictable, adversarial environments. Most networks stumble not because the underlying idea is flawed, but because they underestimate the toll that recovery takes over time. Walrus demonstrates that, with the right architecture, it’s possible to overcome these hurdles and unlock truly scalable, resilient storage for the Web3 era.

So next time you assess a decentralized storage solution, dig deeper than surface-level metrics. Ask how the network recovers from inevitable failures. That’s where true scalability—and long-term value—are found.

FAQs

Q: Is efficient recovery only important for very large files or datasets?

No. Any persistent storage solution, regardless of file size, benefits enormously from efficient, predictable repair mechanisms. Over time, even small files can accumulate significant overhead if recovery is slow or bandwidth-intensive.

Q: Do users need to trust the nodes storing their data in Walrus?

No. Walrus employs cryptographic proofs of storage, so users can independently verify that their data is stored and intact, eliminating the need for trust in any specific node operator.

Q: Is Walrus a blockchain?

No. Walrus functions as a decentralized storage layer that works alongside blockchains, enabling robust data availability and integrity without being a blockchain itself.

Disclaimer: Not Financial Advice.