There is a quiet assumption embedded in many blockchain designs: that data and execution should live close together. Early systems reinforced this idea by necessity. Transactions were small, state was manageable, and the cost of storing everything directly on-chain felt acceptable. Over time, that assumption has become less a design choice and more a constraint.

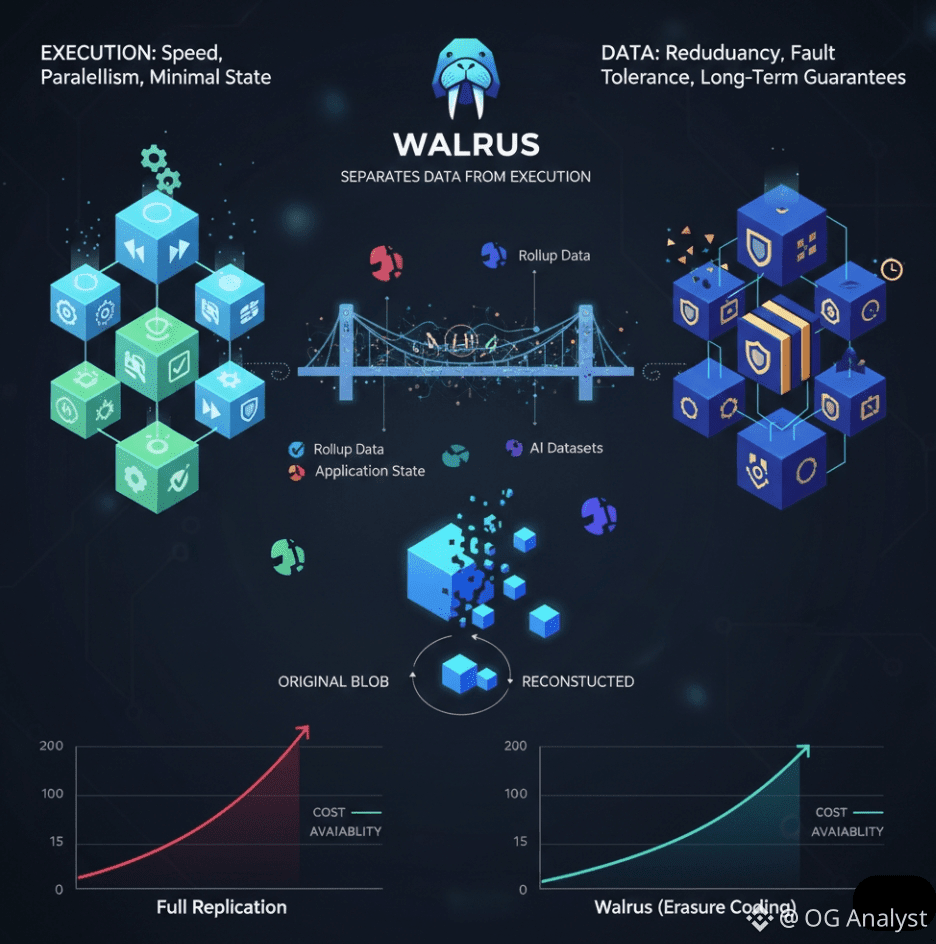

Walrus starts from a different premise. It treats data and execution as distinct responsibilities that should evolve independently. Execution is about validating state transitions. Data is about persistence, retrievability, and guarantees over time. Mixing the two creates friction, inefficiency, and unnecessary risk. Separating them creates room to scale.

This separation is not an abstraction for its own sake. It is a response to the reality that modern decentralized systems generate far more data than execution layers are designed to handle.

Execution Scales Differently Than Data

Execution benefits from speed, parallelism, and minimal state. Data benefits from redundancy, fault tolerance, and long-term guarantees. When both are forced into the same layer, trade-offs become unavoidable. Either execution slows down to accommodate data, or data becomes fragile to preserve execution performance.

Walrus removes that tension by letting execution layers—such as Sui smart contracts or rollups—focus on computation, while Walrus focuses on keeping data available, verifiable, and economically enforced. The two interact through cryptographic commitments and on-chain coordination, not through raw data storage.

This design allows blockchains to remain lean without pushing data responsibility onto opaque off-chain systems.

The Blob-Centric View of Data

To understand Walrus, it helps to forget files, transactions, and blocks for a moment and think in terms of blobs. A blob in Walrus is a large, opaque unit of data that the protocol does not try to interpret. It does not matter whether the blob contains images, rollup data, AI datasets, or application state snapshots. Walrus treats all of it the same.

This blob-centric architecture simplifies the system in an important way. By refusing to specialize around data type, Walrus avoids assumptions that would limit future use cases. The protocol is concerned only with three questions:

Was the blob paid for?

Is it still available?

Can misbehavior be proven and penalized?

Everything else is left to higher layers.

Blobs are referenced on-chain through metadata objects, not stored directly. This keeps coordination transparent while keeping data weight off the execution layer.

Why Erasure Coding Changes the Economics Entirely

Most people understand decentralized storage through replication. Copy the same data across many nodes, and availability improves. The problem is that cost scales linearly with redundancy. At some point, decentralization becomes prohibitively expensive.

Walrus takes a different path by using erasure coding. Instead of storing full copies, data is split into fragments. Any sufficiently large subset of those fragments can reconstruct the original blob. The system no longer depends on every node behaving correctly—only on enough of them doing so.

This has two economic consequences that are easy to overlook:

First, storage providers do not need to store everything to contribute value. Partial responsibility is enough.

Second, users are no longer paying for redundant copies; they are paying for availability guarantees.

Cost efficiency emerges not from cutting corners, but from designing redundancy more intelligently.

Full Replication vs Walrus: A Practical Comparison

Full replication is simple to reason about but inefficient in practice. If ten nodes store the same file, nine of them are redundant in normal operation. That redundancy only becomes useful during failures, yet users pay for it continuously.

Walrus spreads risk instead of duplicating it. Because each node stores only a fragment, the system can tolerate multiple failures without incurring the constant overhead of full duplication. Storage costs drop, but reliability remains high.

More importantly, this approach aligns incentives more cleanly. Storage providers are accountable for their specific responsibility. Failure is measurable. Penalties are targeted. In fully replicated systems, accountability is often diluted because any single node’s failure rarely causes immediate harm.

Designing for Modular Blockchains, Not Monoliths

Walrus feels most natural in a modular blockchain world. Execution layers, settlement layers, and data availability layers each specialize instead of competing for resources. In such systems, data availability is not optional—it is foundational.

Rollups, for example, can execute transactions off-chain efficiently, but they still depend on data being available for verification and dispute resolution. If data disappears, trust collapses. Walrus provides a way to externalize that responsibility without externalizing trust.

Because Walrus integrates with Sui as a coordination layer rather than a storage medium, it fits cleanly into this modular vision. Applications can reference data objects, verify availability guarantees, and compose logic around them without bloating execution state.

Economic Enforcement as a Design Philosophy

A recurring theme across Walrus’ architecture is enforcement through economics rather than assumptions. Storage providers stake WAL. Availability is challenged. Failures are penalized. The protocol does not rely on reputation systems or informal agreements.

This is especially important for data, where failure often shows up too late. A missing dataset is not always obvious at the moment it disappears. Walrus is designed to make unavailability detectable and costly, even when no single user is actively watching.

That shifts reliability from social expectations to protocol rules.

A Layer You Notice Only When It Fails

Good infrastructure is rarely visible when it works. Walrus is designed to be a layer that most users never think about directly. Applications continue functioning. Data remains accessible. Execution layers stay responsive.

Its value becomes obvious only in failure scenarios—when nodes drop out, when censorship pressure appears, or when centralized services quietly vanish. In those moments, separating data from execution stops being an architectural preference and becomes a necessity.

Walrus is built for that reality. Not as a storage product to market, but as a data availability layer to depend on.