In decentralized infrastructure, speed and reliability are often treated as opposing goals. Systems that move fast are assumed to cut corners, while systems that emphasised reliability are expected to slow down under the weight of safeguards. This framing has shaped many design decisions in Web3, leading to architectures that optimize aggressively for one dimension while quietly sacrificing the other.

The problem is that real-world systems do not get to choose between speed and reliability. They are expected to deliver both, consistently, and under changing conditions.

@Walrus 🦭/acc is built around that reality. Rather than accepting the trade-off as inevitable, Walrus approaches speed and reliability as properties that must be designed together, not balanced after the fact. Its architecture reflects a simple insight: speed that cannot be trusted is useless, and reliability that cannot keep up with real workloads eventually becomes irrelevant.

Understanding how Walrus balances these forces requires looking beyond surface metrics and into how decentralized storage actually behaves over time.

Why the Speed vs Reliability Trade-Off Exists

In many decentralized systems, speed is achieved by simplifying assumptions. Data is replicated broadly, availability is assumed rather than proven, and verification is deferred or minimized. These shortcuts allow systems to respond quickly, but they introduce hidden fragility. When conditions change—nodes leave, incentives weaken, or demand spikes—the system struggles to maintain its guarantees.

On the other hand, systems that prioritize reliability often rely on heavy coordination, frequent verification, and conservative redundancy. These measures improve safety but add latency and operational overhead. As a result, such systems can feel sluggish, especially when serving interactive applications.

The mistake is treating these outcomes as inherent. In reality, they are artifacts of design choices.

Walrus starts by questioning where latency actually comes from and what reliability truly requires.

Reliability Is About Guarantees, Not Redundancy

A common misconception is that reliability comes from storing more copies of data. While redundancy helps in small systems, it quickly becomes inefficient and difficult to verify at scale. More copies mean more storage overhead, more verification work, and more economic pressure.

Walrus replaces blind redundancy with provable availability. Instead of assuming data is reliable because it exists in many places, the system requires participants to demonstrate that they still hold their assigned data fragments. These proofs are lightweight and can be verified quickly, allowing the network to maintain strong guarantees without excessive overhead.

This shift is critical. Reliability is no longer tied to how much data is stored, but to how confidently the system can assert that the data is available when needed.

By making reliability measurable rather than assumed, Walrus removes a major source of uncertainty—and uncertainty is what slows systems down.

Speed Comes From Structure, Not Shortcuts

In decentralized storage, latency often arises from disorganization. When data placement is ad hoc, retrieval becomes unpredictable. When verification is infrequent, recovery processes become reactive rather than proactive.

Walrus addresses this by structuring how data is encoded, distributed, and tracked. Data is broken into fragments using erasure coding and placed according to clear rules. Because the system knows exactly which fragments are required for reconstruction and where responsibility lies, retrieval paths are well-defined.

This structure reduces the work needed to locate and reconstruct data. Instead of searching for intact copies, the system retrieves a sufficient subset of fragments. That predictability translates directly into faster access.

Speed, in this context, is not about rushing. It is about removing unnecessary steps.

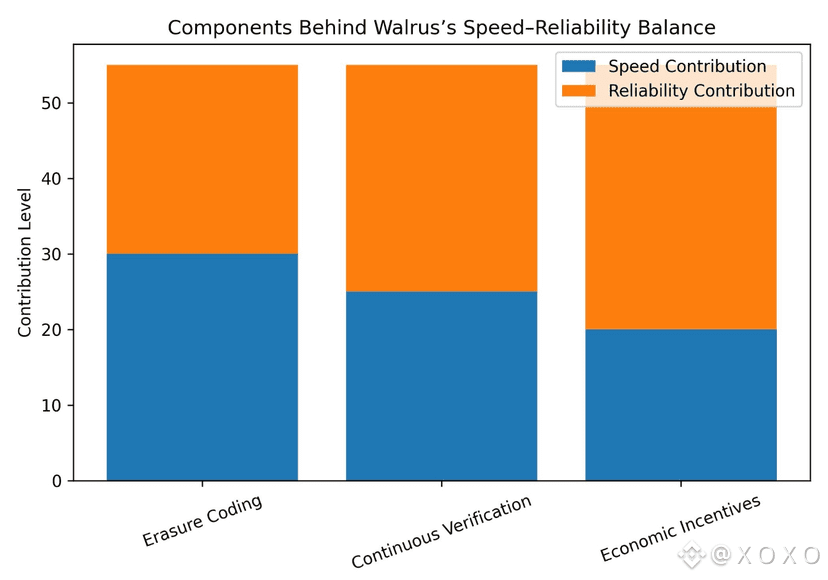

Why Verification Can Be Fast

Verification is often blamed for slowing decentralized systems. If every check requires scanning large datasets or coordinating many nodes, latency increases quickly.

Walrus avoids this by designing verification to be incremental and fragment-based. Storage providers prove availability for small, well-defined pieces of data. These proofs are cheap to generate and verify compared to checking full replicas.

Because verification is continuous rather than episodic, the system maintains an up-to-date view of availability. Problems are detected early, long before they affect retrieval. This proactive approach prevents the slow, cascading failures that plague systems relying on occasional audits.

Reliability improves, and speed is preserved.

Balancing Through Economic Incentives

Speed and reliability are not purely technical properties. They are shaped by incentives.

If storage providers are rewarded simply for participation, they may behave opportunistically, storing data inconsistently or prioritizing short-term gain. This behavior introduces variability, which slows the system when corrections are needed.

Walrus aligns incentives with desired outcomes. Providers are rewarded for demonstrable availability and integrity. Because their compensation depends on verifiable behavior, it becomes rational to maintain data correctly and consistently.

This consistency matters. Predictable behavior at the participant level leads to predictable system performance. When the system can rely on its participants, it does not need heavy-handed safeguards that introduce latency.

Economic discipline becomes a performance feature.

Why Erasure Coding Matters for Both Dimensions

Erasure coding is often discussed as a storage efficiency technique, but its impact on speed and reliability is just as important.

By reducing the amount of data that must be retrieved to reconstruct content, erasure coding lowers retrieval latency. Instead of waiting for specific replicas, the system can accept any sufficient subset of fragments. This flexibility increases the probability of fast retrieval, even under partial failures.

At the same time, erasure coding improves reliability by allowing the system to tolerate node loss without panic. Fragment loss does not immediately threaten availability, and recovery can happen gradually rather than urgently.

Speed improves because the system is not constantly reacting to emergencies. Reliability improves because emergencies are less likely to occur.

Avoiding the Cost of Over-Coordination

Another hidden source of latency in decentralized systems is over-coordination. When systems require frequent global agreement or centralized checkpoints, responsiveness suffers.

Walrus minimizes unnecessary coordination by localizing responsibility. Each storage provider is accountable for specific fragments. Verification and incentives operate at this granular level rather than requiring network-wide synchronization.

This design allows many processes to run in parallel. Verification, repair, and retrieval can proceed independently across different parts of the dataset. The network does not need to pause or slow down to maintain consistency.

Parallelism is a key ingredient in balancing speed and reliability.

Reliability Over Time, Not Just at a Moment

Many systems appear reliable in the short term. The real test comes over months and years, as data accumulates and conditions change.

Walrus is designed with long-term behavior in mind. Its economic model assumes that incentives will fluctuate and participation will change. By keeping storage overhead low and verification efficient, the system reduces the cost of maintaining reliability over time.

This matters because long-term reliability supports speed indirectly. Systems that are burdened by historical liabilities often slow down as they grow. Walrus’s efficiency prevents this drag, allowing performance to remain stable as usage increases.

Why Speed Without Reliability Is a Trap

Fast systems that occasionally fail may be acceptable in experimental environments. They are not acceptable for governance records, enterprise data, or institutional memory.

Walrus treats reliability as a prerequisite for speed. There is no attempt to optimize for headline performance metrics at the expense of guarantees. Instead, performance emerges from the absence of crises.

When data is consistently available, retrieval paths are known, and incentives are aligned, the system does not need to recover from shocks. It simply operates.

That steady-state operation is where real speed lives.

Why Reliability Without Speed Is Also Insufficient

The opposite extreme—systems that are reliable but slow—also fails to meet real needs. Data that cannot be accessed in a timely manner might as well be unavailable.

Walrus avoids this by ensuring that reliability mechanisms do not dominate the critical path. Verification happens continuously in the background. Redundancy is controlled rather than excessive. Retrieval uses minimal necessary data.

As a result, reliability does not impose a heavy tax on responsiveness.

Implications for Governance and Enterprise Use Cases

The balance between speed and reliability is especially important for governance and enterprise contexts. Governance decisions often need to be referenced quickly, but they must also be accurate and immutable. Enterprises require timely access to data, but they cannot tolerate uncertainty.

Walrus’s design supports these requirements by ensuring that reliability is always present, even when speed is demanded. Data retrieval does not become a gamble, and verification does not become a bottleneck.

This combination is what makes decentralized storage viable beyond experimentation.

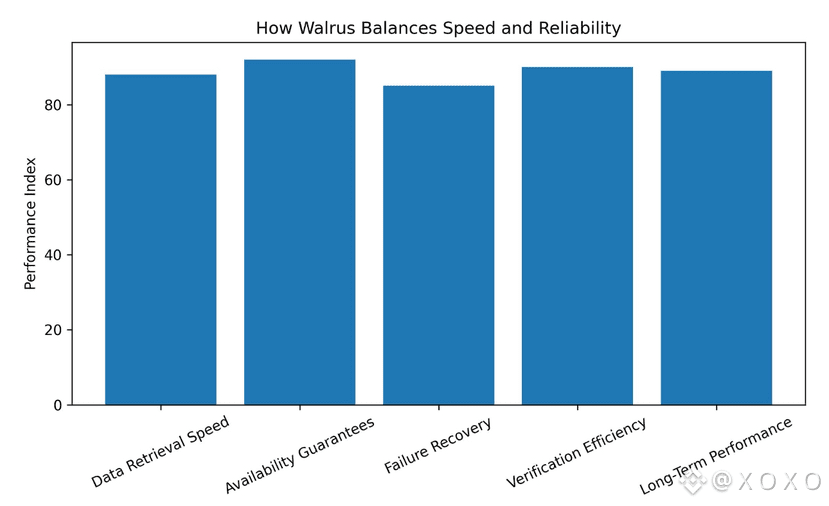

A Different Definition of Performance

In many Web3 conversations, performance is reduced to throughput or latency under ideal conditions. Walrus adopts a broader definition.

Performance includes how quickly data can be retrieved under partial failure.

It includes how smoothly the system operates during incentive shifts.

It includes how much effort is required to maintain guarantees as data grows.

Under this definition, balancing speed and reliability is not a compromise. It is a necessity.

Why This Balance Scales

Perhaps the most important aspect of Walrus’s approach is that the balance between speed and reliability improves with scale rather than degrades.

As data grows, erasure coding becomes more effective. As participation increases, verification becomes more distributed. As incentives mature, behavior becomes more predictable.

Instead of amplifying weaknesses, scale reinforces strengths.

Closing Reflection

Speed and reliability are not enemies. They become enemies only when systems rely on excess, assumptions, or shortcuts. Walrus demonstrates that with disciplined design, the two can reinforce each other.

By structuring data intelligently, aligning incentives carefully, and treating verification as a continuous process, Walrus delivers storage that is both responsive and dependable. Speed emerges from order. Reliability emerges from proof.

In decentralized infrastructure, that combination is rare.

And as decentralised systems take on more responsibility, it is exactly what will matter most.