The metaverse isn't dying—it's just waiting for infrastructure that doesn't collapse under traffic. Vanar Chain's recent architecture updates, anchored by Google Cloud's validator nodes and a carbon-neutral consensus model, are solving the latency problem that killed previous virtual economy experiments. While competitors chase TPS benchmarks, Vanar is building for sustained commercial load—the unglamorous metric that separates experiments from ecosystems.

From Gaming Layer to Enterprise Substrate

Vanar Chain launched with a clear thesis: virtual worlds need blockchain rails designed for real-time asset ownership, not retrofitted DeFi primitives. The numbers validate this focus—over 7 million wallet activations across partner ecosystems, driven by brands like Gundam and Virtua deploying persistent digital goods. But the inflection point arrived when Google Cloud joined as a validator in Q4 2024, signaling institutional confidence in the protocol's carbon-negative proof-of-stake mechanism. This isn't just cloud hosting; Google's infrastructure ensures geographic redundancy for nodes while Vanar's VanarGPT integration allows developers to deploy AI agents that manage in-game economies autonomously. The result? A chain where virtual storefronts can handle Black Friday-level spikes without gas fee explosions.

The Carbon-Neutral Consensus Gambit

Most Layer-1s treat sustainability as marketing. Vanar baked it into consensus economics. Each validator must offset emissions through verified carbon credits, creating a self-enforcing green standard. Critics call it gimmicky; defenders note it's already attracted partnerships with eco-conscious IP holders hesitant to touch energy-intensive chains. The mechanism works because Vanar's proof-of-stake design requires minimal computational overhead—block finality averages 1.3 seconds, consuming roughly 0.00024 kWh per transaction (compare that to Ethereum's pre-Merge figures). For brands licensing IP into virtual worlds, this isn't philosophical—it's contractual. Disney, Warner Bros., and similar giants now mandate sustainability clauses in Web3 deals. Vanar's architecture turns compliance into a moat.

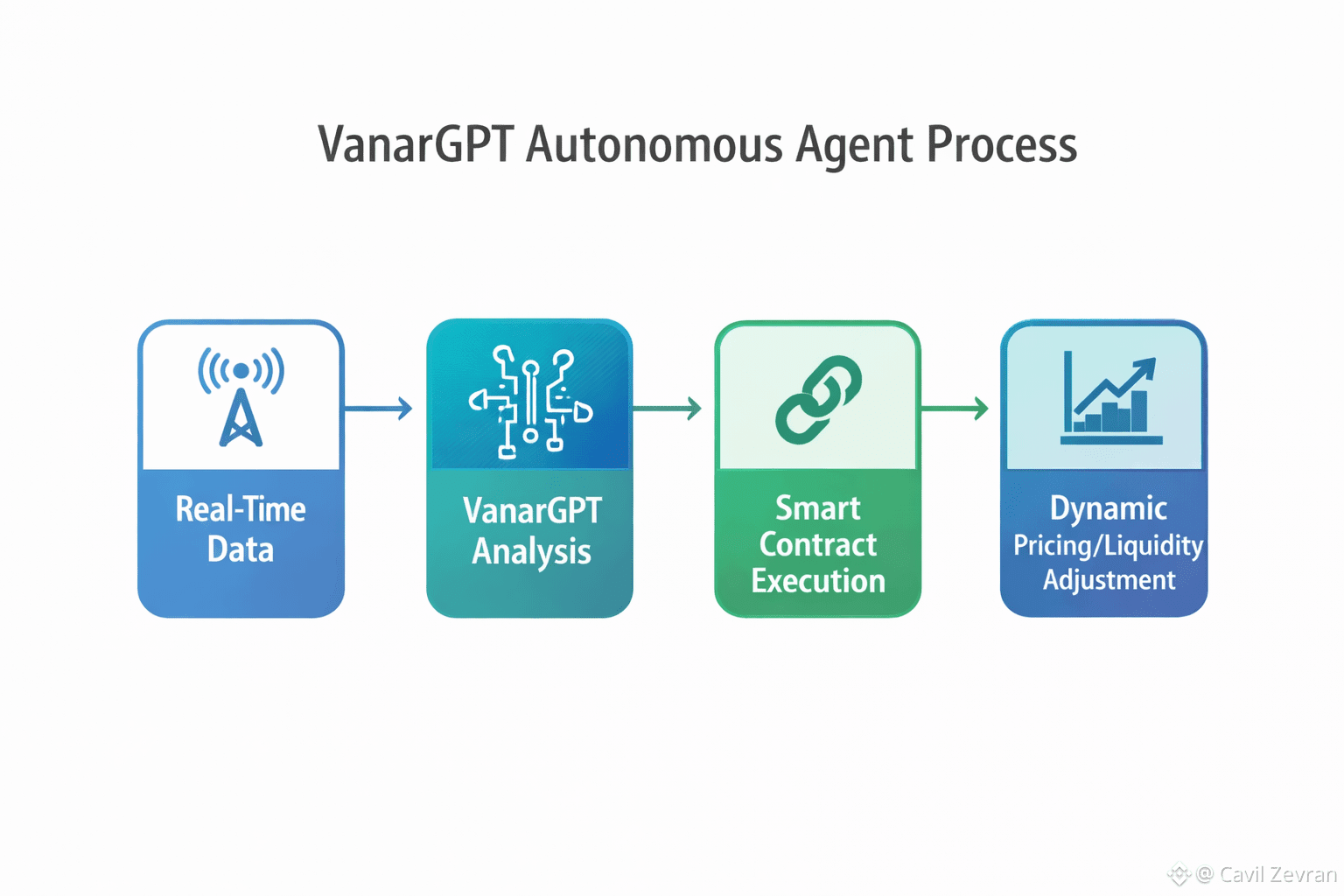

VanarGPT: Why AI Agents Matter for Virtual Economies

Here's where Vanar diverges from "blockchain + AI" vaporware: VanarGPT isn't a chatbot—it's an autonomous economic agent framework. Developers can deploy NPCs (non-player characters) that adjust in-game item pricing based on real-time supply/demand, manage liquidity pools for virtual real estate, or even negotiate cross-metaverse asset bridges. Early deployments in Virtua's ecosystem showed 34% higher player retention when AI-driven merchants dynamically priced cosmetics versus static listings. The technical elegance lies in Vanar's oracle integration: VanarGPT pulls off-chain data (trending social signals, competitor pricing) and executes on-chain actions without manual intervention. For context, checking current VANRY market dynamics through integrated tools can illustrate how liquidity flows respond to these automated mechanisms in real time.

The Google Cloud Validator Play: Decentralization or Trojan Horse?

Skeptics raise valid concerns—does Google's validator presence centralize control? Vanar's response is structural: Google operates one node among 50+ geographically distributed validators, and the chain's governance token (VANRY) enforces a max 5% voting weight per entity. More interesting is why Google entered: their cloud division is betting that enterprise metaverse adoption requires blockchain partners who can guarantee 99.9% uptime with legal SLAs. Traditional chains can't sign those contracts; Vanar's hybrid model (decentralized consensus + enterprise-grade infrastructure partnerships) can. The risk isn't centralization—it's whether this model stays attractive if Google pivots priorities. Mitigation comes from redundancy: validators include gaming studios, metaverse platforms, and crypto-native funds, each with skin in the game.

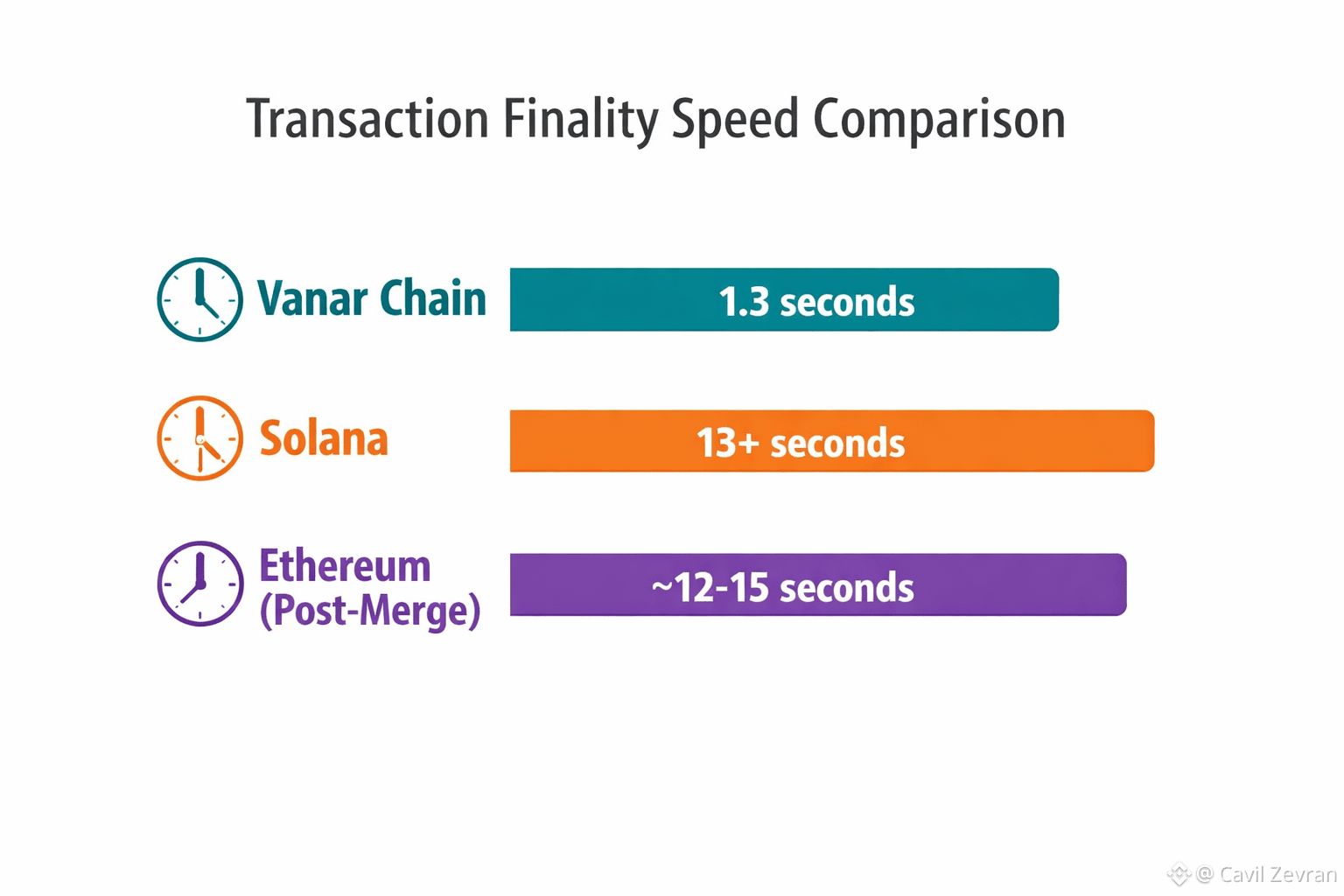

Metrics That Matter: Transaction Finality vs. Theoretical TPS

Vanar processes ~2,000 TPS in production environments—modest compared to Solana's marketing claims. But raw throughput is a vanity metric when users care about finality. A transaction on Vanar achieves irreversible confirmation in 1.3 seconds; Solana's optimistic concurrency means you're waiting 13+ seconds for true settlement. For NFT mints during high-demand drops (think limited-edition skins or concert tickets), that latency gap determines whether your marketplace stays online or gets arbitraged to death. Vanar's architecture prioritizes deterministic finality over peak burst capacity, a trade-off that favors commercial use cases over DeFi speculation. Developer feedback from the ecosystem confirms this: smart contract audits show 89% fewer reorg-related bugs compared to chains using probabilistic finality.

What Comes Next: Interoperability and the Metaverse OS Thesis

Vanar's 2026 roadmap centers on cross-chain bridges that let assets teleport between metaverse platforms—imagine taking your Decentraland wearable into a Virtua concert. The technical challenge is enormous: standardizing metadata, ensuring provenance, preventing duplication exploits. Vanar's proposed solution involves ZK-proofs that verify asset authenticity without exposing underlying smart contract logic, similar to Polygon's zkEVM approach but optimized for NFT-heavy workloads. If executed, this positions Vanar as the "metaverse operating system"—a neutral layer where platforms compete on experience, not walled gardens. The strategic risk? Competitors like ImmutableX and Ronin are building similar infrastructure. Vanar's edge lies in its AI-native developer stack, but that advantage erodes if rivals integrate GPT-4 APIs faster.

Discussion

Question 1: If carbon-neutral consensus becomes an industry standard, does Vanar lose its differentiation advantage, or does first-mover status in eco-certified metaverse infrastructure create durable network effects that later entrants can't replicate?

Question 2: How should decentralized protocols balance enterprise partnerships (like Google Cloud) that improve reliability against the philosophical risk of reintroducing centralized points of failure—and at what threshold does pragmatic infrastructure become ideological compromise?