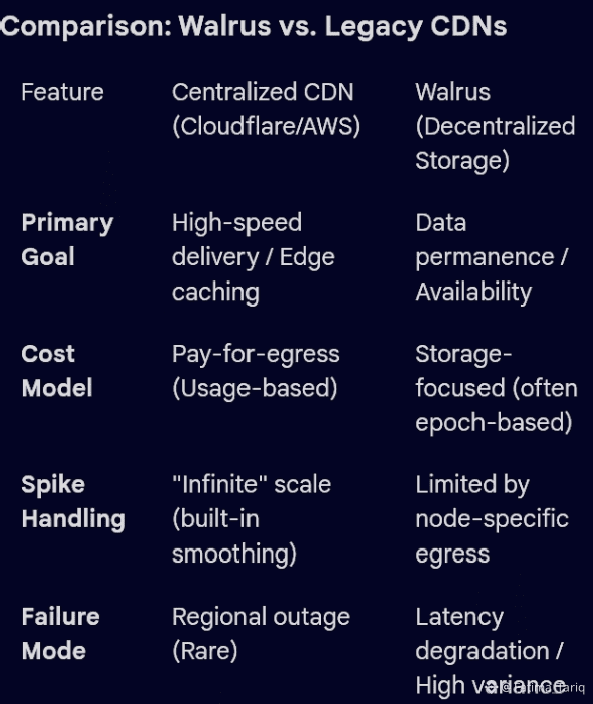

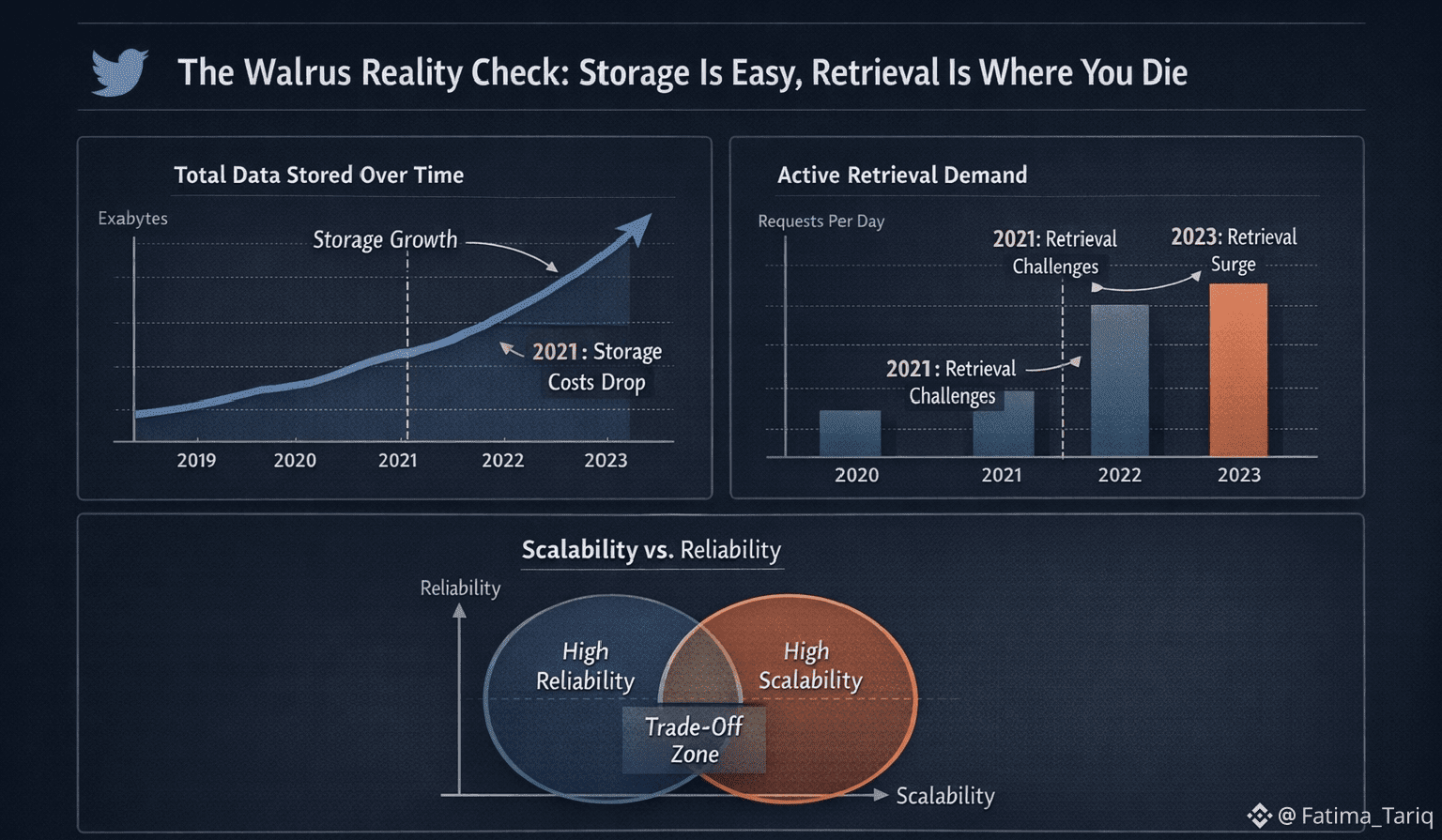

Most teams moving to Walrus or any decentralized storage protocol are operating under a dangerous delusion. They think they’re buying a decentralized Cloudflare. They aren’t. They are buying a decentralized hard drive, and in a viral moment, that distinction becomes a fatal one.We need to stop talking about "Proof of Availability" as if it solves the user experience. It doesn't. Proving a file exists on-chain is a far cry from delivering that file to 50,000 concurrent users during a mint or a breaking news event.

The CDN Hangover

We’ve been spoiled by centralized CDNs. We’ve spent a decade relying on cache smoothing and global edge delivery to hide our architectural sins. When you move to a protocol like Walrus, that safety net is gone.The "network" isn't a magical, infinite resource that absorbs your success. It is a collection of nodes with very real, very physical limits on bandwidth, disk I/O, and egress. If you haven't explicitly designed for how your content gets out, you haven't designed a system; you’ve just built a digital hoarding shed.

The Anatomy of a Crash (It’s Not What You Think)

When decentralized media fails under load, it doesn't look like a database crash or a 404 error. It looks like latency variance.It starts with a few extra hundred milliseconds. Then a second. Then the UI hangs. Because the system is decentralized, users don’t just wait—they refresh. They retry. Each "refresh" is a fresh punch to the throat of the gateway. You end up with a thundering herd problem that turns a "hot" asset into a DoS attack against your own infrastructure.Failures happen in the "pipes," not the proofs. If the node operator isn't incentivized to handle massive egress spikes—and spoiler: most PoA incentives focus on storage, not delivery—then your "viral moment" is just an expensive way to break your dApp.

Who Pays for the Cache?

This is the operational question no one wants to answer: Where does the cache live, and who is footing the bill?

If you’re relying on your app backend to act as a stealth CDN for Walrus, you’ve just re-centralized your "decentralized" app. If you’re relying on client-side caching, you’re gambling on the user’s browser. If you’re just hoping "the network will absorb it," you are relying on infrastructure you haven't actually paid for. That is a strategy based on hope, and hope is not an operational plan.

Designing for the Spike

If you want to survive a "hot" content moment on Walrus, you have to stop thinking like a developer and start thinking like an operator.

Pre-warm your assets: Don't let the first 10,000 users be the ones to discover the latency.

Strict Retry Budgets: If a fetch fails, don't let the client hammer the gateway into oblivion.

Acknowledge the Economics: High demand equals sudden cost. Whether that cost is paid in latency or in gateway fees, someone pays.

Plan for Epoch Churn: Traffic spikes don't care if the network is in the middle of a re-balancing act.

Walrus is a powerful tool, but it is not a magic wand. It forces you to confront the reality of physical hardware and bandwidth limits. If you assume it will behave like a centralized CDN just because it's "the cloud," you are walking into an operational post-mortem that hasn't happened yet.Stop optimizing for "available" and start optimizing for "retrievable." Because at the end of the day, a file that takes 30 seconds to load doesn't exist to the user. #Walrus $WAL @Walrus 🦭/acc #LearnWithFatima