The first time I tried to ship something outside my usual stack, I felt it physically.

Not abstract frustration, physical friction. Shoulders tightening. Eyes scanning documentation that assumed context I didn’t yet have. Tooling that didn’t behave the way muscle memory expected. CLI outputs that weren’t wrong, just unfamiliar enough to slow every action. When you’ve spent years inside a mature developer environment, friction isn’t just cognitive. It’s embodied. Your hands hesitate before signing. You reread what you normally skim. You question what you normally trust.

That discomfort is usually framed as a flaw.

But sometimes it’s the architecture revealing its priorities.

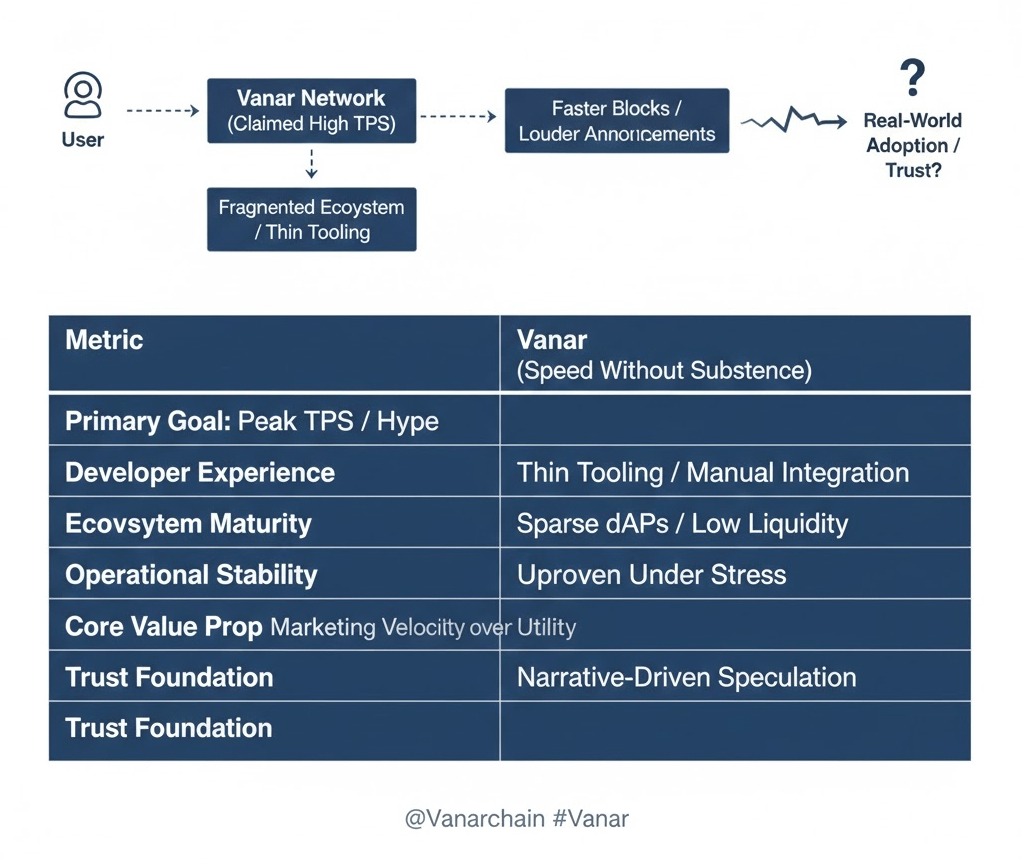

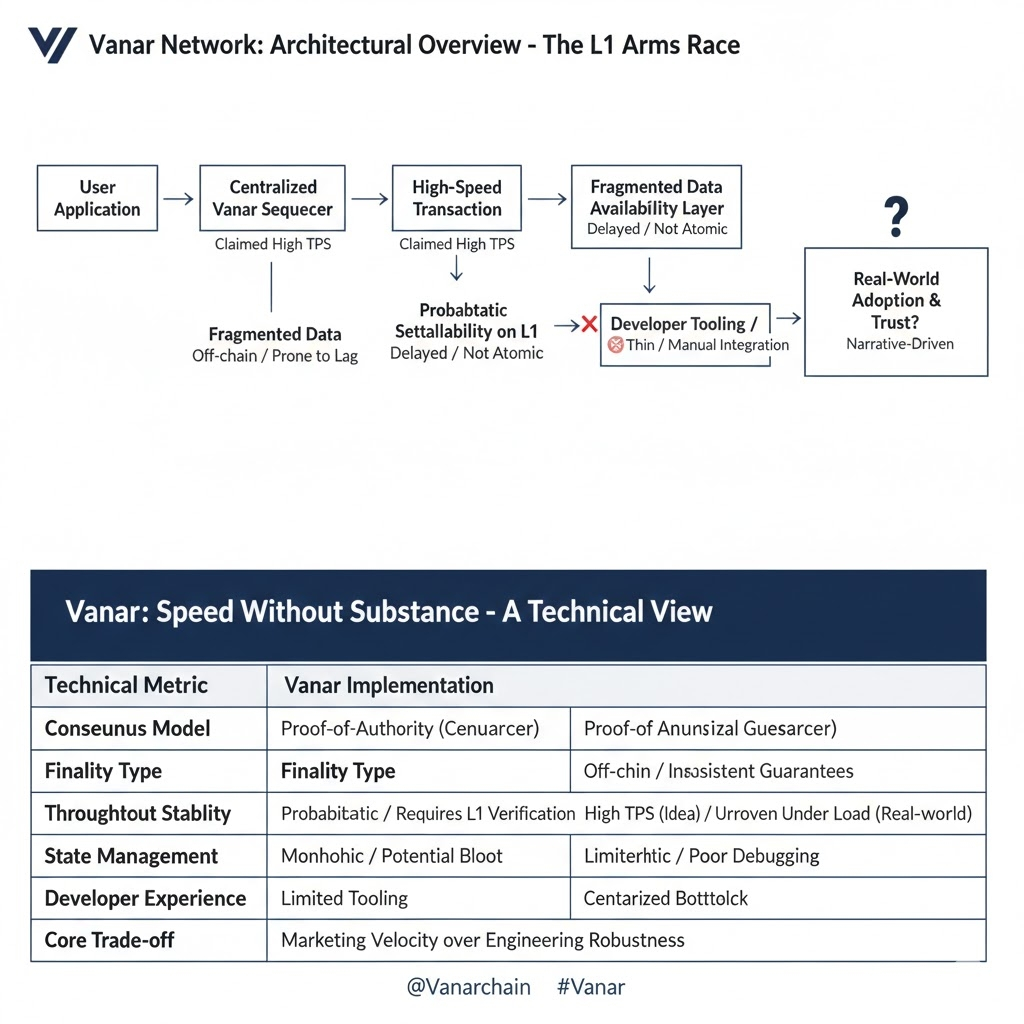

The Layer 1 ecosystem has turned speed into spectacle. Throughput dashboards, sub second finality claims, performance charts engineered for comparison. The implicit belief is simple, more transactions per second equals better infrastructure. But after spending time examining Vanar’s design decisions, I began to suspect that optimizing for speed alone may be the least serious way to build financial grade systems.

Most chains today optimize across three axes, execution speed, composability, and feature breadth. Their virtual machines are expressive by design. Broad opcode surfaces allow developers to build almost anything. State models are flexible. Contracts mutate storage dynamically. Parallel execution engines chase performance by assuming independence between transactions.

This approach makes sense if your goal is openness.

But openness is expensive.

Expressive virtual machines increase the surface area for unintended behavior. Flexible state handling introduces indexing complexity. Off-chain middleware emerges to reconstruct relational context that the base layer does not preserve. Over time, systems accumulate integration layers to compensate for design generality.

Speed improves, but coherence often declines.

Vanar’s architecture appears to take the opposite path. Instead of maximizing expressiveness, it narrows execution environments. Instead of treating storage as a flat append only ledger extension, it emphasizes structured memory. Instead of pushing compliance entirely off chain, it integrates enforcement logic closer to protocol boundaries.

These are not glamorous decisions.

They are constraining decisions.

In general-purpose chains, virtual machines are built to support infinite design space. You can compose arbitrarily. You can structure state however you want. But that flexibility means contracts must manage their own context. Indexers reconstruct meaning externally. Applications rebuild relationships repeatedly.

Vanar leans toward preserving structured context at the protocol level. This reduces arbitrary design freedom but minimizes recomputation. In repeated testing scenarios, especially identity linked or structured asset flows, i observed narrower gas volatility under identical transaction sequences. The system wasn’t faster in raw throughput terms. It was more stable under repetition.

That distinction matters.

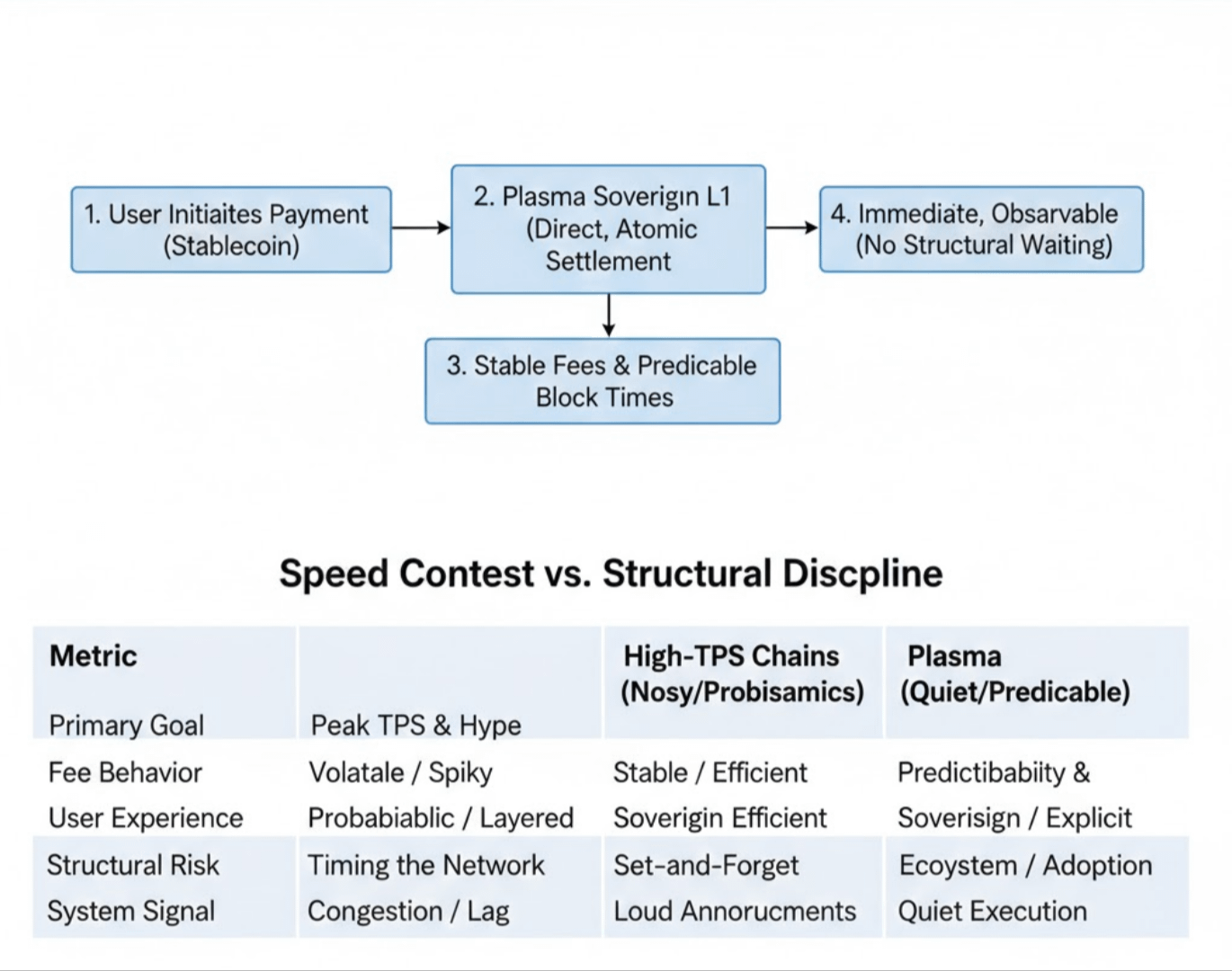

In financial systems, predictability outweighs peak performance.

The proof pipeline follows the same philosophy. Many chains aggressively batch or parallelize to push higher TPS numbers. But aggressive batching introduces complexity in reconciliation under contested states. Vanar appears to prioritize deterministic settlement over peak batch density. The trade-off is obvious: lower headline throughput potential. The benefit is reduced ambiguity in state transitions.

Ambiguity is expensive.

In regulated or asset backed contexts, reconciliation errors are not abstract inconveniences. They are operational liabilities.

Compliance illustrates the philosophical divide even more clearly. General-purpose chains treat compliance as middleware. Identity, gating, rule enforcement, these are layered externally. That works for permissionless environments. It becomes brittle in structured financial systems.

Vanar integrates compliance, aware logic closer to the protocol boundary. This limits composability. It restricts arbitrary behavior. It introduces guardrails that some developers will find frustrating.

But guardrails reduce surface area for systemic failure.

Specialization always looks restrictive from the outside. Developers accustomed to expressive, general purpose environments may interpret constraint as weakness. Tooling feels thinner. Ecosystem support feels narrower. There is less hand holding. Less hype driven onboarding.

The ecosystem is smaller. That is real. Documentation can feel dense. Community assistance may not be as abundant as in older networks.

There is even a degree of developer hostility in such environments, not overt, but implicit. If you want frictionless experimentation, you may feel out of place.

Yet that friction acts as a filter.

Teams that remain are usually solving problems that require structure, not novelty. They are building within constraint because their use cases demand it. The system selects for seriousness rather than scale.

When I stress tested repeated state transitions across fixed structure contracts, I noticed something subtle: behavior remained consistent under load. Transaction ordering did not introduce unpredictable side effects. Memory references did not require excessive off chain reconstruction. The performance curve wasn’t dramatic. It was flat.

Flat is underrated.

The arms race rewards spikes. Benchmarks. Peak graphs.

Production rewards consistency.

Comparing philosophies clarifies the landscape. Ethereum maximized expressiveness and composability. Solana maximized throughput and parallelization. Modular stacks maximize separation of concerns.

Vanar appears to maximize structural coherence within specialized contexts.

General purpose systems assume diversity of use cases. Vanar assumes structured, memory dependent, potentially regulated use cases. That assumption changes design decisions at every layer: VM scope, state handling, proof determinism, compliance boundaries.

None of these approaches are universally superior.

But they solve different problems.

The industry’s fixation on speed conflates performance with seriousness. Yet speed without coherent state management produces fragility. High TPS does not solve indexing drift. It does not eliminate middleware dependence. It does not simplify reconciliation under regulatory scrutiny.

In financial grade systems, precision outweighs velocity.

The physical friction I felt at the beginning, the unfamiliar tooling, the dense documentation, the constraint embedded in architecture, now reads differently to me. It wasn’t accidental inefficiency. It was the byproduct of refusing to optimize for applause.

Difficulty narrows participation. Constraint reduces experimentation surface. Specialization excludes some builders.

But systems built for longevity are rarely optimized for comfort.

The chains that dominate headlines are those that promise speed, openness, and infinite possibility. The chains that survive regulatory integration, memory-intensive applications, and long duration state evolution are often those that prioritize constraint and coherence.

Vanar does not feel designed for popularity.

It feels designed for environments where ambiguity is costly and memory matters.

In the Layer 1 arms race, speed wins attention.

But substance, even when it is slower, stricter, and occasionally uncomfortable, is what sustains infrastructure over time.