The Real Limitation Isn’t Action. It’s Memory.

OpenClaw agents can act. They can read, write, search, and execute tasks across tools. That part works.

But action alone does not build long-term intelligence. Memory does.

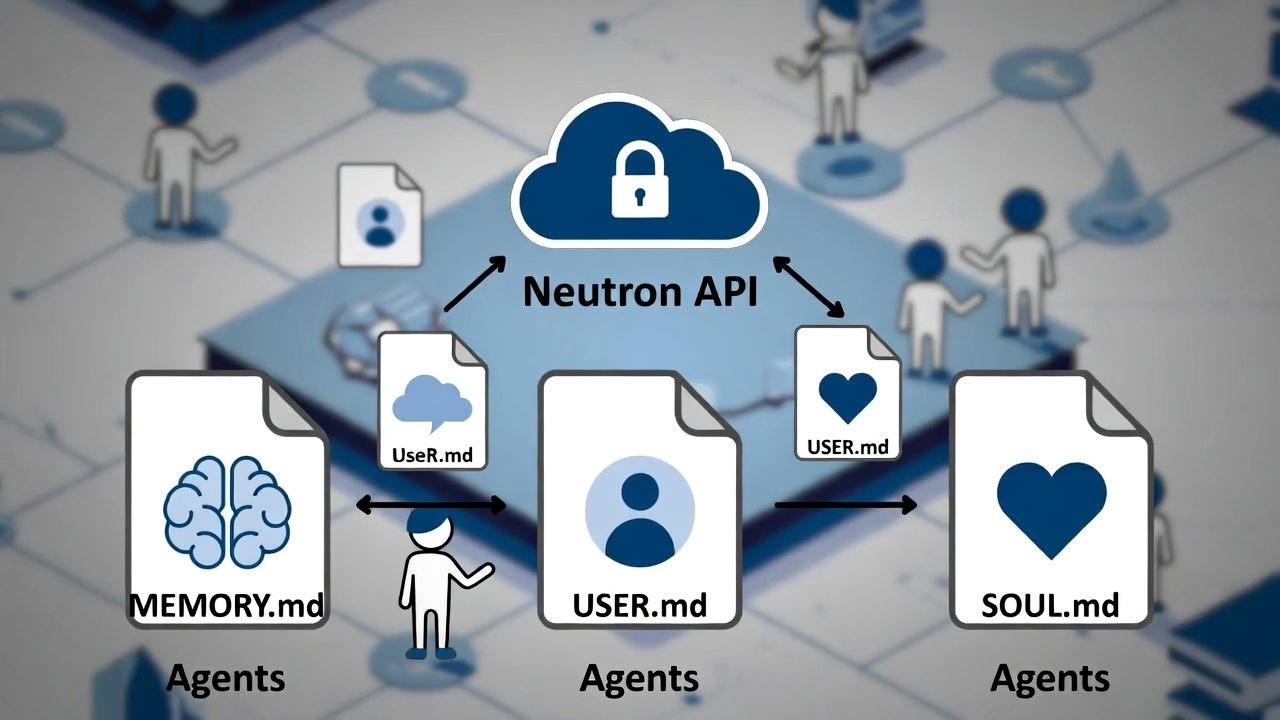

Right now, OpenClaw agents store memory in local files like MEMORY.md, USER.md, and SOUL.md. It’s simple. It’s familiar. And for small experiments, it’s enough.

The problem shows up later.

Restart the agent. Move it to another machine. Run multiple instances. Let it operate for weeks. Suddenly, memory becomes fragile. Context bloats. Files get overwritten. What once felt flexible starts to feel unstable.

This is where Neutron changes the conversation.

Neutron is a memory API. It separates memory from the agent itself. Instead of tying knowledge to a file or runtime, it stores memory in a persistent layer that survives restarts, migrations, and replacements.

The agent can disappear. The knowledge remains.

That shift sounds subtle. It isn’t.

When Memory Lives in Files, Intelligence Stays Temporary

Imagine hiring a new employee every morning who forgets everything at night.

That’s what file-based agent memory feels like over time.

Local files are mutable. They can be overwritten. Plugins can change them. Prompts can accidentally corrupt them. There’s often no clear history of what was learned, when it was learned, or why the agent behaves differently today than it did yesterday.

This creates silent risk.

If an agent is handling workflows, user preferences, or operational logic, losing or corrupting memory can break consistency. It also makes debugging harder. When something goes wrong, you don’t just fix a bug. You question the memory itself.

Neutron addresses this by treating memory as structured knowledge objects rather than flat text files. Each memory entry can have origin metadata. You can trace when it was written and by what source.

That doesn’t make systems perfect. But it makes them observable.

And observability matters when agents gain more autonomy.

Intelligence That Survives the Instance

A key idea behind Neutron is simple: intelligence should survive the instance.

In traditional setups, the agent and its memory are tightly coupled. If the runtime ends, memory resets unless carefully preserved. Scaling across machines becomes messy. Multi-agent systems struggle with consistency.

Neutron decouples this relationship.

Memory becomes agent-agnostic. It is not owned by a single runtime or device. An OpenClaw agent can shut down and restart elsewhere, and it can query the same persistent knowledge base.

Think of it like cloud storage versus local storage on a laptop. When your laptop breaks, your files don’t vanish if they live in the cloud.

This enables something bigger: disposable agents.

Instead of treating each agent instance as precious, you can treat it as replaceable. Spin one up. Shut one down. Swap models. Upgrade logic. The knowledge layer continues uninterrupted.

That is infrastructure thinking.

Changing the Economics of Long-Running Agents

There’s also a cost and efficiency angle that often gets overlooked.

When agents rely on full history injection into the context window, prompts grow heavy. Token usage rises. Context windows fill with noise. Over time, performance slows and costs increase.

Neutron’s approach encourages querying memory rather than dragging entire histories forward.

Instead of saying, “Here’s everything I’ve ever known,” the agent asks, “What do I need right now?”

That keeps context windows lean. It reduces unnecessary token usage. It makes long-running workflows more manageable.

For example, imagine a customer support agent running continuously. Instead of reloading every past conversation into each new prompt, it retrieves only relevant knowledge objects tied to that user or task.

The result is cleaner reasoning. Lower overhead. More predictable behavior.

It doesn’t guarantee efficiency. But it makes efficiency possible at scale.

Memory with Lineage Builds Trust

One of the quiet risks in agent systems is memory poisoning.

Local memory is often silent and mutable. A plugin could overwrite something. A prompt injection could store misleading instructions. Over time, the agent’s behavior shifts, and you may not know why.

Neutron introduces the concept of lineage. Memory entries can have identifiable origins.

This allows teams to answer practical questions:

What wrote this memory?

When was it written?

Was it user input, system logic, or a plugin?

With this information, you can decide who has permission to write to memory. You can limit write access to trusted sources. You can audit changes.

For teams deploying agents in production environments, this is not just a feature. It’s operational hygiene.

Transparency builds confidence. And confidence is essential when agents interact with real workflows.

Supermemory vs. Neutron: Recall vs. Architecture

It’s useful to clarify how Neutron differs from recall-focused services like Supermemory.

Supermemory focuses on recall. It injects relevant snippets into context. It’s convenient and practical, especially for retrieval use cases.

Neutron goes deeper.

It rethinks how memory itself is structured and owned. Instead of renting memory from a hosted recall service, the system treats memory as infrastructure that can outlive specific tools.

This distinction matters for long-term strategy.

If memory is tied to a vendor, portability becomes limited. If memory is structured as agent-agnostic infrastructure, different agents and systems can consume the same knowledge layer over time.

For example, OpenClaw might use the memory today. Another orchestration system might use it next year. The knowledge does not need to be rebuilt from scratch.

Agents come and go. Knowledge persists.

From Experiments to Infrastructure

There’s a difference between a demo and infrastructure.

A demo can tolerate fragility. Infrastructure cannot.

As agents move from experimental tools to business-critical systems, durability becomes non-negotiable. Memory must survive restarts. It must be queryable. It must be governable.

Neutron does not promise perfect intelligence. It does not eliminate all risks. But it addresses a structural bottleneck: temporary memory tied to runtime.

By separating memory from execution, OpenClaw can evolve from an impressive agent framework into something more durable.

Imagine background agents that run for months. Multi-agent systems coordinating tasks. Workflow automation that accumulates lessons over time instead of resetting.

Those systems require persistent memory infrastructure.

Without it, every restart is a soft reset of intelligence.

The Bigger Picture: Compounding Knowledge

At its core, this is about compounding knowledge.

An agent that forgets behaves like a short-term contractor. It performs tasks but does not accumulate experience.

An agent with durable memory behaves more like an organization. It builds internal knowledge. It learns from previous interactions. It refines its behavior over time.

Neutron positions memory as that compounding layer.

It turns what agents learn into reusable assets. It enables controlled growth rather than chaotic accumulation. It provides visibility into how knowledge forms and evolves.

That doesn’t automatically make OpenClaw dominant. Execution still matters. Design still matters. Governance still matters.

But memory is foundational.

OpenClaw proved agents can act. Neutron ensures what they learn can persist.

And in the long run, systems that remember responsibly tend to outlast systems that don’t.