@Vanarchain I ended up in a hotel lobby at 7:10 a.m., waiting on a first coffee and scrolling through a demo link someone swore was “the future.” My laptop sat on a beat-up table, and the agent looked great—until it met actual customer data and started throwing errors and nonsense in the same breath. I shut the lid for a second and wondered: how do we separate proof from confidence?

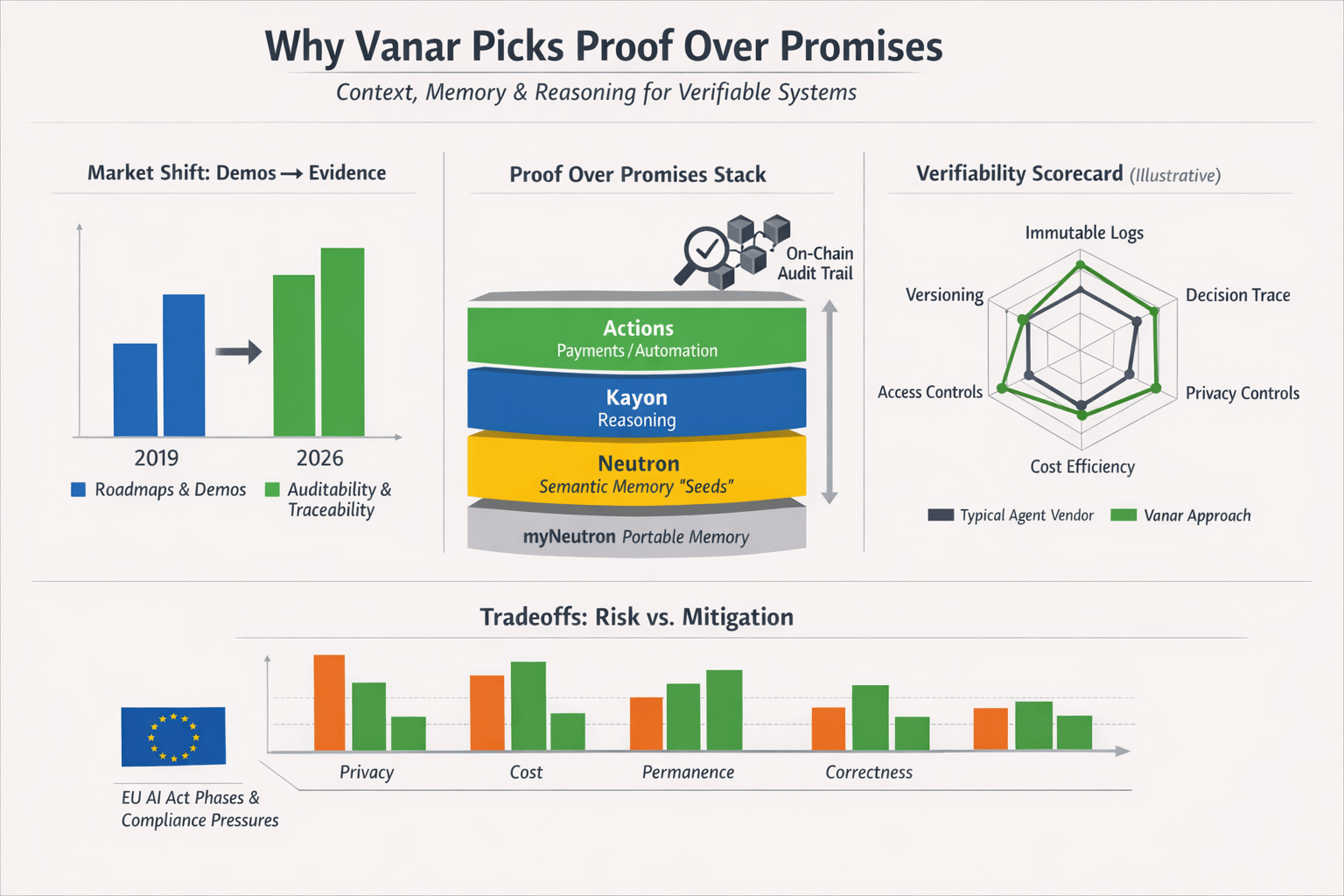

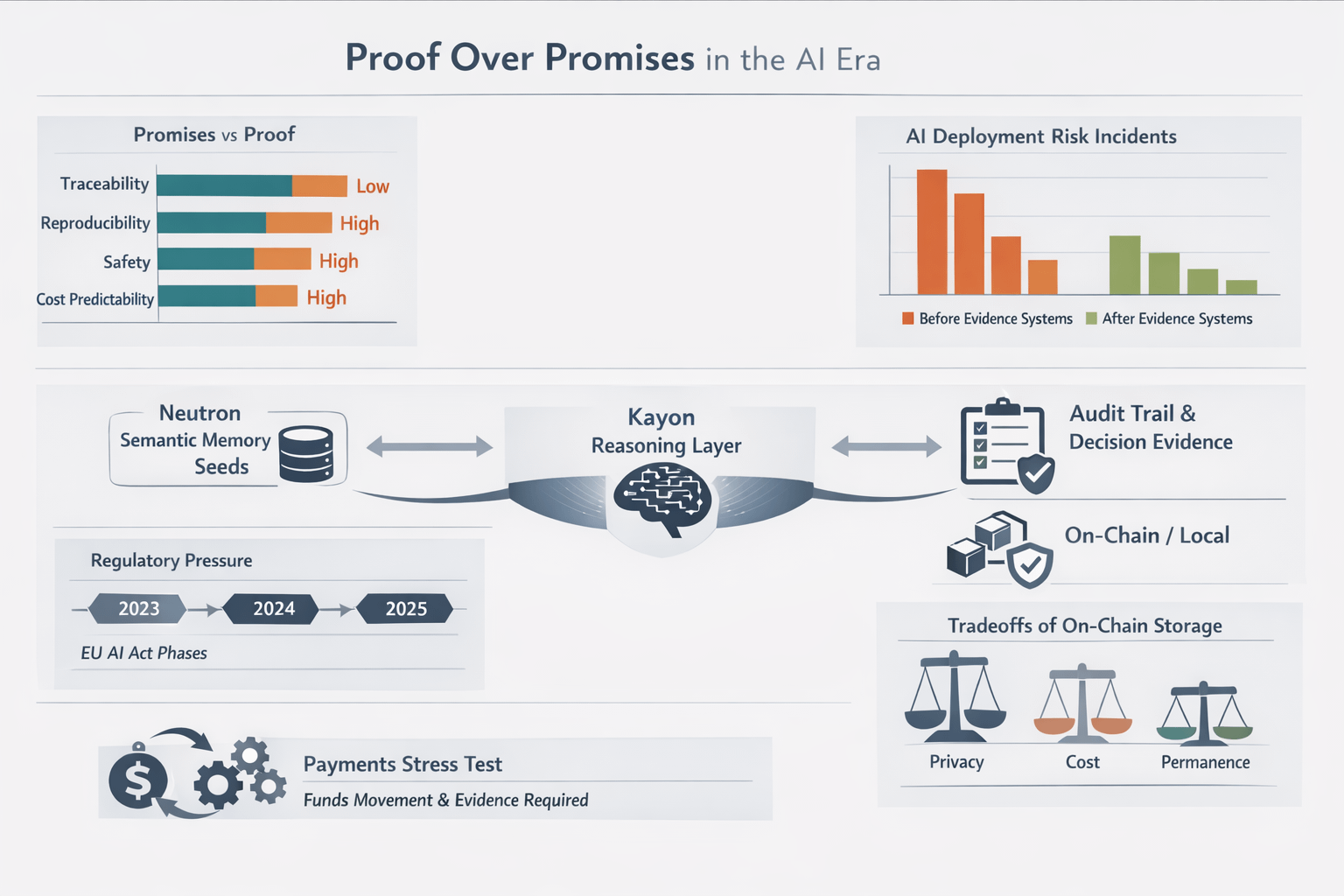

This is why the topic is everywhere right now. AI has slipped into normal work, and the standards are changing. People used to tolerate bold claims because the tech felt early. Now it’s being deployed quickly, and the cracks show up fast—budget surprises, safety gaps, weird corner cases nobody planned for. The mood has shifted. The hunger isn’t for more demos. It’s for evidence that holds up after the applause.

It’s changed how I listen. I’m less impressed by roadmaps and more interested in systems that can be checked. I’ve also seen enough “agent washing” to be skeptical when a vendor calls simple automation an agent. If everyone is selling autonomy, the differentiator becomes plain: can you show what happened, and can someone else verify it without trusting your story?

Regulation is part of why this is getting sharper. The EU’s AI Act is rolling out in phases, and even teams outside Europe are adjusting because customers and partners are asking for traceability and oversight. In practice, that means audit trails, not explanations after the fact.

This is the frame I use when I look at Vanar’s “proof over promises” posture. Vanar presents itself as an AI-native blockchain stack and talks openly about memory and reasoning as core layers. Neutron is positioned as semantic memory. Kayon is described as a reasoning layer that can use that memory. I don’t care about the branding. I care about the stance: keep context and records durable enough to inspect later.

That matters because the industry often outsources “truth” to off-chain storage and logs that aren’t designed for disputes. It works until it doesn’t. Then the questions are predictable: which document version, which inputs, which rules, whose change, and when? Vanar’s Neutron material focuses on compressing and restructuring information into “Seeds” that are meant to stay programmable and verifiable. Even if that approach isn’t right for every workload, the intent is clear—make evidence part of the system, not an add-on.

The myNeutron idea aims at a more human problem: context that disappears when you switch tools, devices, or teams. Vanar describes it as portable memory that can be anchored on-chain or kept local. That flexibility matters, because persistent memory can help, but it can also hurt if it’s unmanaged.

Kayon is where the “proof” argument becomes practical. If a reasoning layer can pull supporting material, connect it to a decision, and leave a legible trail, the burden of proof shifts. Instead of “trust the agent,” a team can point to what it referenced and why it acted.

None of this is effortless. Putting more truth on-chain raises tradeoffs—privacy, cost, and the fact that permanent storage makes mistakes harder to undo. On-chain records don’t make reasoning correct. They can make reasoning reviewable, and reviewability is what I see organizations asking for as pilots turn into production.

Payments are a useful stress test, and it’s one reason Vanar’s talk about agentic payments and settlement stands out. If an automated flow moves funds, it should also carry evidence for why it moved funds, so disputes don’t devolve into guesswork.

When I step back, differentiation in the AI era looks narrower than people want it to be. It’s not who has the boldest agent. It’s who can show their work when it matters. Vanar’s bet is that verifiability is the product. I’m cautious about any promise, including that one, but I’ll keep watching the builders who try to make trust testable.

@Vanarchain #vanar $VANRY #Vanar