Spotlight Edition #13: Two Projects Catching My Attention

Most traders never stop to ask how a price actually exists.

It just shows up. You refresh a chart, a figure appears, you place a trade. End of thought. But that figure isn’t magic. It’s the final output of data feeds, economic incentives, intermediaries, verification layers, cryptographic checks, and human assumptions all stitched together. If any link breaks, damage spreads fast. Bad data triggers forced sells. Lag creates easy prey. Corruption turns price discovery into theater.

That’s why this week’s focus isn’t hype cycles or shiny sectors.

It’s the plumbing underneath markets. The stuff nobody praises when it works, and everybody blames when it doesn’t.

We’ll begin with the dependable foundation, then shift to the higher-risk upside play.

Focus Asset: Pyth Network (PYTH)

At its core, Pyth is addressing a problem that predates blockchain entirely.

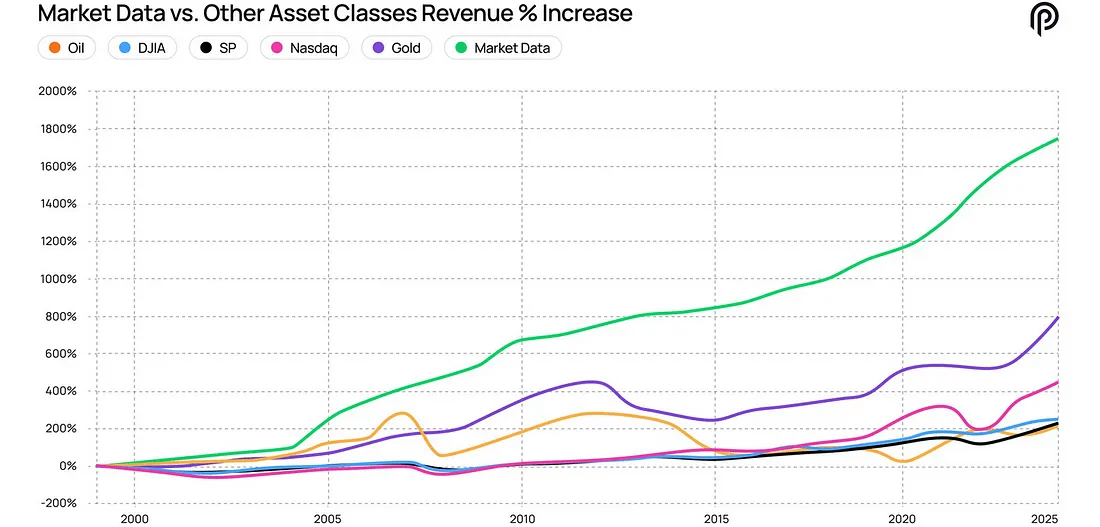

Market data is a massive business, roughly a $50 billion ecosystem built on redistribution. Trades happen on exchanges. Data providers collect them. Aggregators reshape them. Distributors package the result. Institutions then pay multiple tolls to access information that originated upstream.

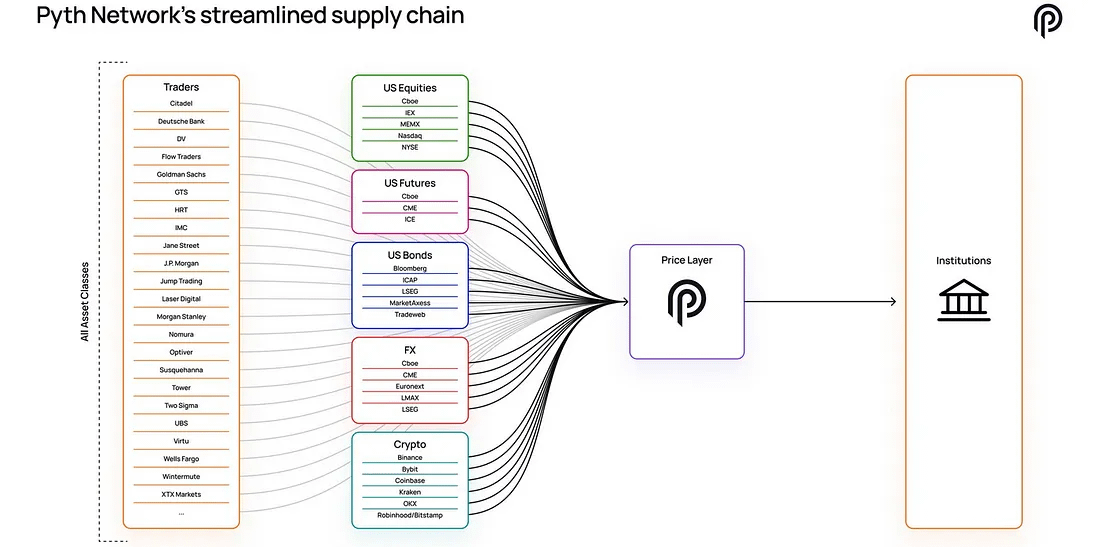

Pyth bypasses that structure.

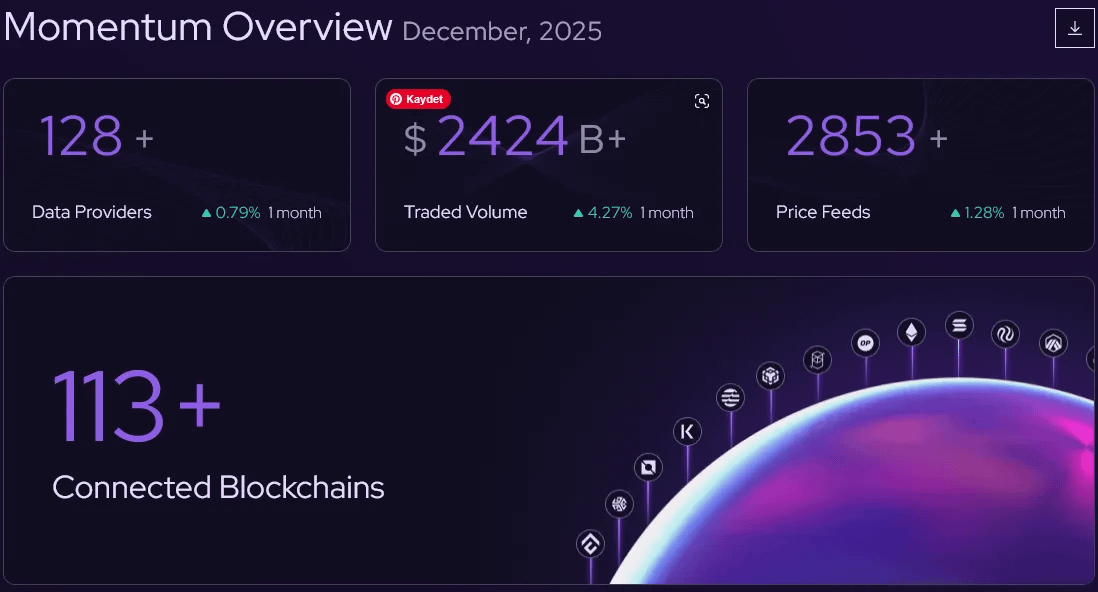

Rather than purchasing data from middlemen, it pulls prices straight from the source. Trading firms, exchanges, and market makers publish data directly into the network. There are more than 128 contributors, including firms like Jane Street, Cboe, and Binance. These inputs are combined inside Pyth, verified through cryptographic signatures, and then delivered to over 100 blockchains in near real time.

That approach reshapes two fundamentals at the same time: who pays, and who decides.

Today, Pyth delivers more than 2,800 live price feeds spanning crypto, stocks, foreign exchange, commodities, and even macro data points. Stocks alone account for close to 60 percent of active feeds, which makes one thing clear. This has moved beyond being just a DeFi oracle. It is positioning itself as a broader financial data layer.

And this is not theoretical adoption.

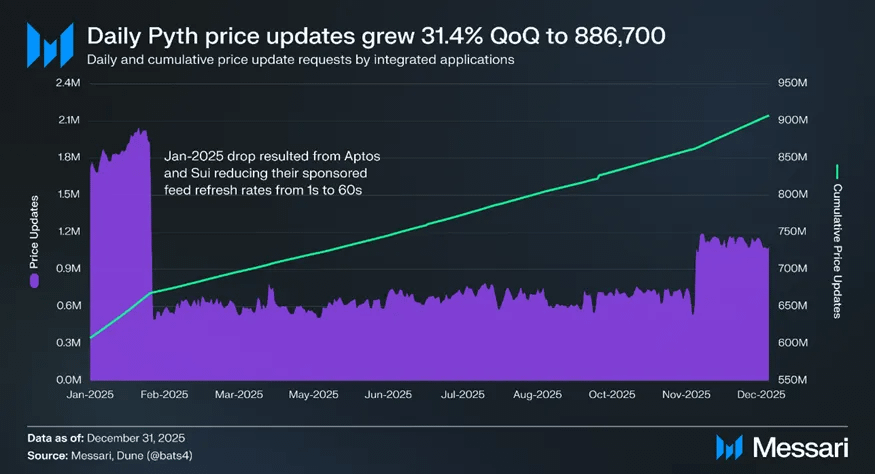

In Q4, the network handled roughly 886,700 price updates per day, representing more than 31 percent growth compared to the previous quarter. Total updates have now crossed 900 million. That level of activity signals sustained usage, with real applications continuously pulling verified prices onchain rather than infrastructure sitting idle.

The market still tends to fixate on TVS, or total value secured, even though it dipped in Q4 along with the broader downturn. Pyth’s TVS declined from $6.2 billion to $4.2 billion, which at first glance can read like shrinking relevance.

The problem is that TVS does not reveal who is actually relying on the oracle. Transaction activity does.

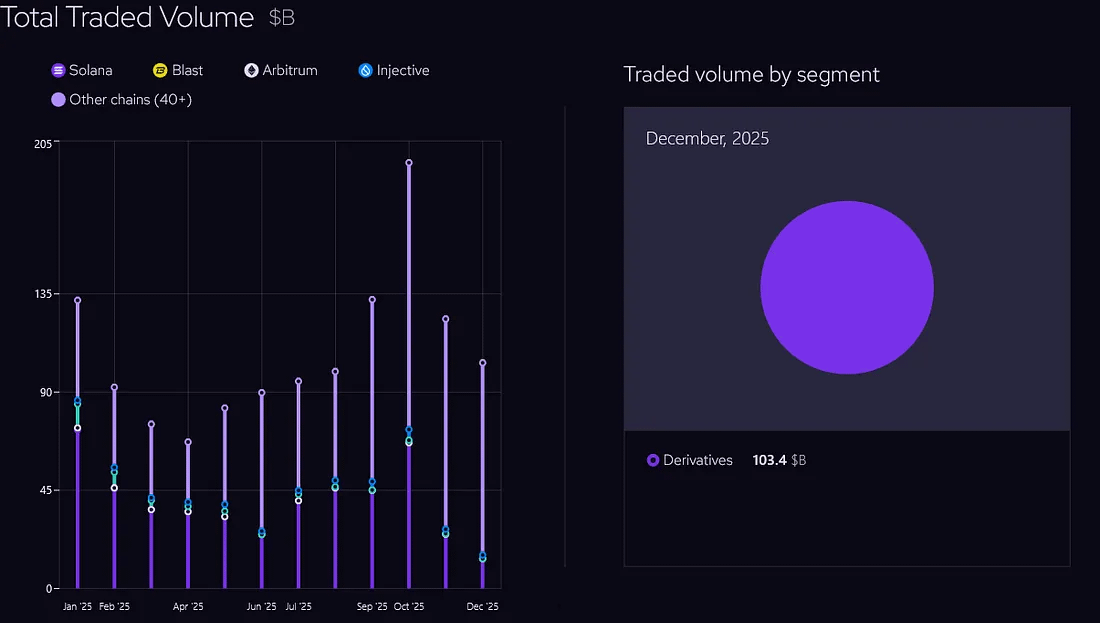

By total traded volume, Pyth supports close to 60 percent of DeFi derivatives markets, with monthly TTV often exceeding $100 billion throughout 2025. Derivatives trading cannot function with delayed or inaccurate prices. When data breaks, protocols take losses immediately. Capturing that share of volume is not a coincidence.

The institutional shift

The most notable change is not happening within DeFi itself.

It is happening with Pyth Pro.

Released in September, the product delivers millisecond level updates across more than 2,800 data feeds, priced at $10,000 per month for institutional clients. Within its first month, it surpassed $1 million in annual recurring revenue. In Q4 alone, revenue reached $352,600, and the year closed with 54 paying subscribers.

But the significance goes beyond subscription income.

What matters is that firms that originally contributed data to Pyth began subscribing to consume that same data. Publishers turned into customers.

That reverses the traditional data pipeline. Instead of crypto protocols sourcing prices from legacy financial data providers, institutions are now considering blockchain-native distribution as a viable alternative to bundled, legacy terminals.

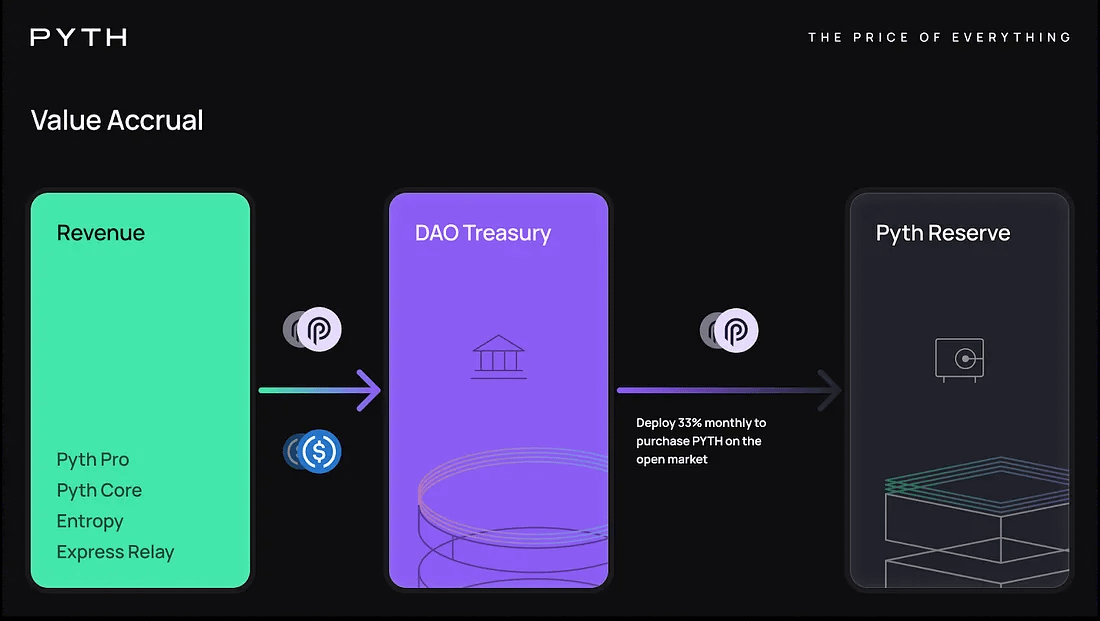

Then the Reserve entered the picture.

In December, the DAO authorized the PYTH Reserve, allocating 33 percent of monthly protocol revenue to repurchase PYTH directly from the open market. The first buyback took place in January, totaling roughly 2.16 million PYTH.

For a long time, PYTH’s role was limited to governance participation and Oracle Integrity Staking. That has changed. Revenue generated from Core, Pro, Entropy, and Express Relay now flows into the DAO treasury, with a clearly defined mechanism that feeds value back into the token itself.

This is a meaningful shift.

Market activity is now linked to real revenue, not token emissions or incentives.

Where things stand today, questions remain.

There is still supply pressure.

TVS leadership in the oracle sector is not established.

Entropy and Express Relay received less focus in Q4.

And the biggest unknown is whether Pyth Pro can seriously challenge long standing incumbents in a data market dominated by legacy vendors.

But for this Spotlight, the takeaway is simple.

Pyth is no longer just an onchain oracle.

It is evolving into a global financial data backbone that is built natively on blockchain infrastructure.

Public sector data such as GDP figures and non farm payrolls are already being published onchain through Pyth integrations. Regulated prediction platforms like Kalshi are routing compliant datasets through the network. Institutions are no longer limited to one role either. They are contributing data and consuming it.

None of this promises immediate upside for the token.

What it does create is a rare setup among infrastructure assets, where usage can be tracked, revenue streams are forming, and token buybacks are already in motion.

This is not a narrative driven trade.

It is a conviction play that the data layer beneath global markets grows in importance as finance continues moving onchain, and that Pyth captures a larger share of that infrastructure than the market currently prices in.

Speculative Focus: Lagrange (LA)

Some projects borrow the AI label for attention.

Others are focused on validating AI itself.

Lagrange falls into the second group, and that is what makes it a high risk bet.

At a fundamental level, Lagrange is not creating another inference marketplace or selling compute access. Its focus is on cryptographic verification of AI execution. The question it answers is not whether an output looks good, but whether the model was executed exactly as claimed, using the correct inputs, while keeping the underlying data private.

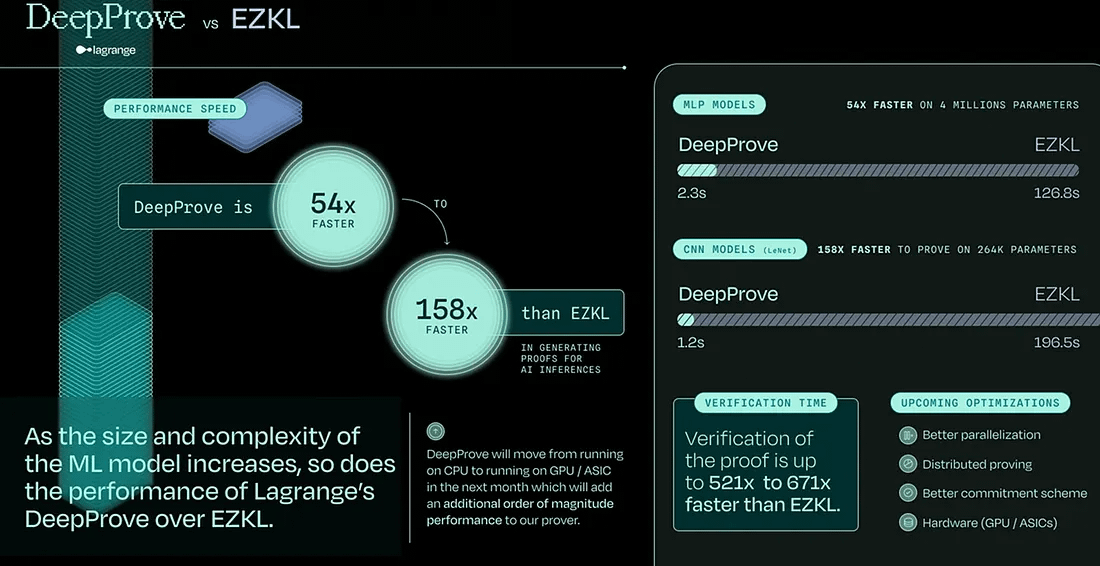

That verification framework is called DeepProve.

In 2025, the team showed complete end to end inference proofs for GPT 2, and later expanded compatibility to more recent model families such as Gemma3. That milestone matters because much of the zkML narrative never moves beyond simplified demos. Proving inference for full scale language models operates on an entirely different level.

They did not stop there. The roadmap expanded into dynamic zk SNARKs, incremental proof generation, patent filings, and research that meets academic cryptography standards. From a technical standpoint, this work is substantive.

What makes LA both compelling and high risk, however, is the direction they are aiming for.

Defense.

In 2025 alone, Lagrange moved well beyond theory.

DeepProve was integrated into Anduril’s Lattice SDK demonstration pipeline, where zero knowledge proofs were attached to autonomous decision outputs. The team also entered supplier ecosystems tied to General Dynamics, Raytheon, Lockheed Martin, and Oracle’s sovereign cloud environments. At the same time, DeepProve was positioned as a verification layer for C4ISR architectures, autonomous aerospace systems, and secure communications.

This is not a Web3 storyline.

This is proximity to U.S. defense infrastructure.

Now, it is important to slow down.

Being listed as a supplier does not equal having live contracts. The Vulcan SOF listing explicitly does not indicate deployment. Demo integrations are not the same as systems in active use. A large portion of early stage defense technology exists in the gap between promising capability and actual procurement.

That uncertainty is where the risk sits.

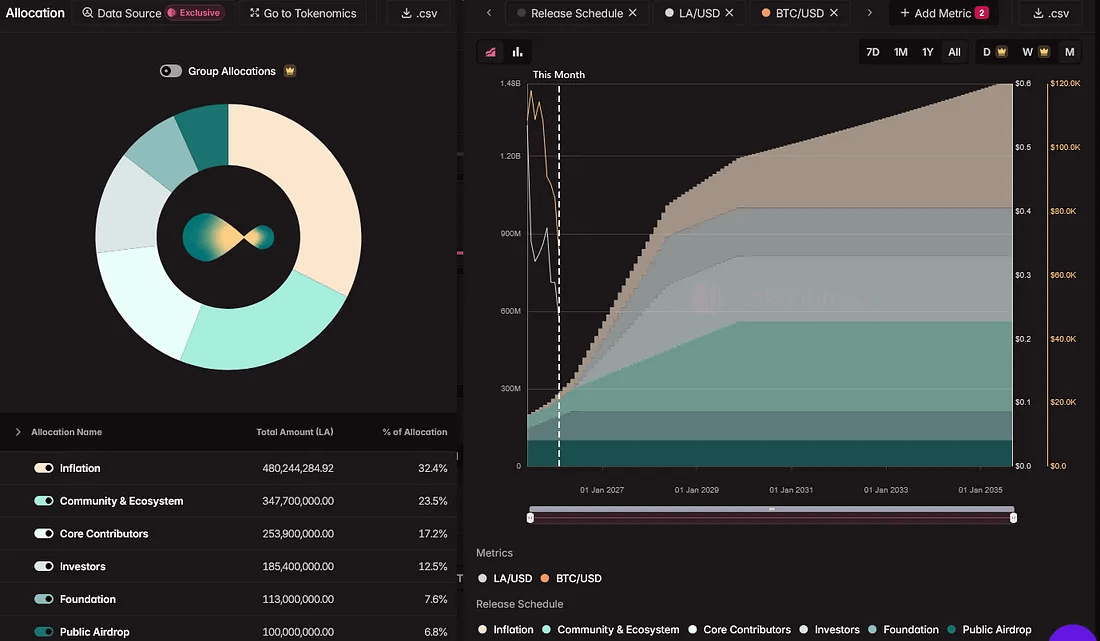

On the token mechanics side, LA is more than a governance asset.

Demand for the token is linked to proof generation. Clients pay to generate proofs. Provers must stake LA to participate, with staking acting as eligibility collateral. The economic logic is simple. As proof volume grows, token demand should increase.

So far, the network has produced millions of AI inferences and millions of zero knowledge proofs, which points to genuine technical momentum.

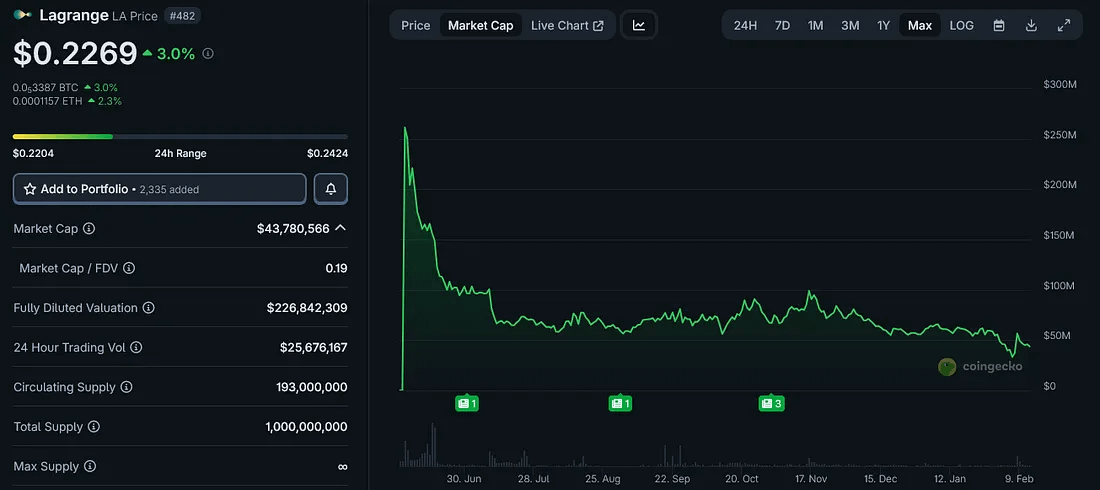

At the same time, the warning signs are clear.

Token ownership is highly concentrated, with a small number of wallets holding most of the supply.

The number of holders remains low relative to the valuation.

Token unlocks begin to apply pressure starting mid year.

Staking yields are high enough to attract short term capital rather than long term participants.

This is not a slow build infrastructure asset like Pyth.

This is early phase exposure with wide outcome dispersion and heavy influence from major backers.

Founders Fund has invested.

Intel has supported it through its accelerator.

Nvidia has included it in the Inception program.

Those relationships explain why the token reached top tier exchanges quickly. They do not ensure long term resilience.

The thesis here is not that AI is fashionable.

It is far more specific.

If defense systems and sovereign cloud environments begin to mandate cryptographic verification of AI execution as a compliance requirement, Lagrange is exceptionally well positioned.

If that requirement remains conceptual or materializes slower than market expectations, LA behaves like a narrative driven asset facing unlock pressure.

There is not much room between those outcomes.

Why this fits the high risk category

Lagrange is solving a problem that makes sense on a multi year horizon.

The market, however, prices assets on a much shorter timeline.

That disconnect creates sharp volatility. The technology is legitimate. The integrations are tangible. The ambition is real. The story of verifiable AI for government and defense use cases is compelling.

But reality still applies. Procurement cycles move slowly. Enterprise adoption takes time. And token supply mechanics are indifferent to research progress. That is why LA sits in this category. The payoff profile is asymmetric, both to the upside and the downside. If verifiable AI becomes standard infrastructure for mission critical systems, this positioning will look extremely early.

If it does not, or if adoption drags out, the market will not remain patient.

That is the trade. Pyth is already embedded in live systems. It processes volume, monetizes data, and actively recycles revenue into token buybacks. It does not need a step change event. It only needs continued execution.

Lagrange does not have that advantage. It is targeting a larger outcome further in the future. If verifiable AI shifts from discussion to requirement, the timing looks favorable. If not, it becomes another ambitious project that ran ahead of its adoption curve. That is the framework. One asset compounds quietly. The other is attempting to reshape the trajectory.

Different names next week. Same objective. Separate substance from surface level narratives.