@Dusk and the Art of Being Provable Without Being Exposed

Most people only notice privacy when it’s missing. They notice it when a wallet address becomes a personality, when a balance becomes a target, when a trade becomes a rumor that moves faster than any settlement system ever could. Dusk is built around the idea that markets don’t become fair by making everyone naked. They become fair when the rules are enforceable, the outcomes are verifiable, and the people inside the system can still breathe.

The real problem isn’t that regulators want to see everything. It’s that they can’t accept “trust me” in places where harm is expensive. In traditional finance, auditability exists because someone can subpoena records, compare statements, and punish lies. On most public ledgers, auditability exists because everyone can watch everything, forever. Dusk takes a different path: it treats visibility as a controlled instrument, not a default condition, and it treats proof as something you can carry without dragging your private life behind it.

To understand what that feels like in practice, imagine you’re a real participant in a regulated environment—an issuer, a broker, a venue, a compliance officer—trying to do the boring work that keeps markets honest. You don’t want to publish customer identities. You don’t want competitors tracking your flows. You don’t want a single screenshot, a single leak, or a single sloppy export turning into a breach that follows you for years. But you do want a system where, when someone asks “did you follow the rules,” you can answer with something stronger than confidence and weaker than exposure.

This is where Dusk’s design becomes emotionally different from most systems people call “transparent.” It aims to let a transaction be accepted by the network because it carries a mathematical argument that it is valid, while the sensitive parts stay sealed unless a legitimate authority must inspect them. The public side learns that the rules were satisfied. It does not automatically learn who you are, what you hold, or why you moved it.

That distinction sounds philosophical until you picture the moments when things go wrong. A dispute between counterparties. A suspicion of market abuse. A compliance review triggered by an external event you didn’t cause. In those moments, the question isn’t “can the chain show everything,” it’s “can the chain help separate truth from noise without turning privacy into collateral damage.” Dusk is built to make that separation possible, because in regulated finance, being unable to prove you behaved correctly can be as destructive as being caught doing something wrong.

The most misunderstood part is that auditability isn’t a single act. It’s a relationship across time. It’s the ability to explain a sequence of decisions months later, after staff has changed, after systems have been upgraded, after memories have become stories. Dusk leans into that reality by treating verifiable history as something that must remain queryable and coherent, even as the network evolves. You can see that mindset reflected in late-2025 node releases that strengthened the way finalized activity can be queried at scale and how metadata can be retrieved without fragile, manual workarounds.

When you live in this ecosystem, you start caring less about dramatic moments and more about quiet continuity. A regulator doesn’t want your narrative; they want a reproducible view. A compliance team doesn’t want a heroic engineer; they want a process that still works when the engineer is on a plane. Dusk’s approach—proofs where the network can enforce correctness, and controlled disclosure where authorized parties can inspect specifics when legally required—turns those human needs into system behavior instead of policy documents.

Off-chain reality is messy in ways blockchains don’t like to admit. Documents are incomplete. Records disagree. People make errors that aren’t malicious, just human. Someone types a wrong identifier. Someone uses a stale data source. Someone follows an internal checklist that’s out of date by one week. The usual response is to widen visibility and hope that public scrutiny will catch mistakes. Dusk tries to do something more mature: it assumes mistakes will happen and focuses on containing the blast radius—keeping private data from becoming a permanent punishment for ordinary failure, while still making the outcomes provable.

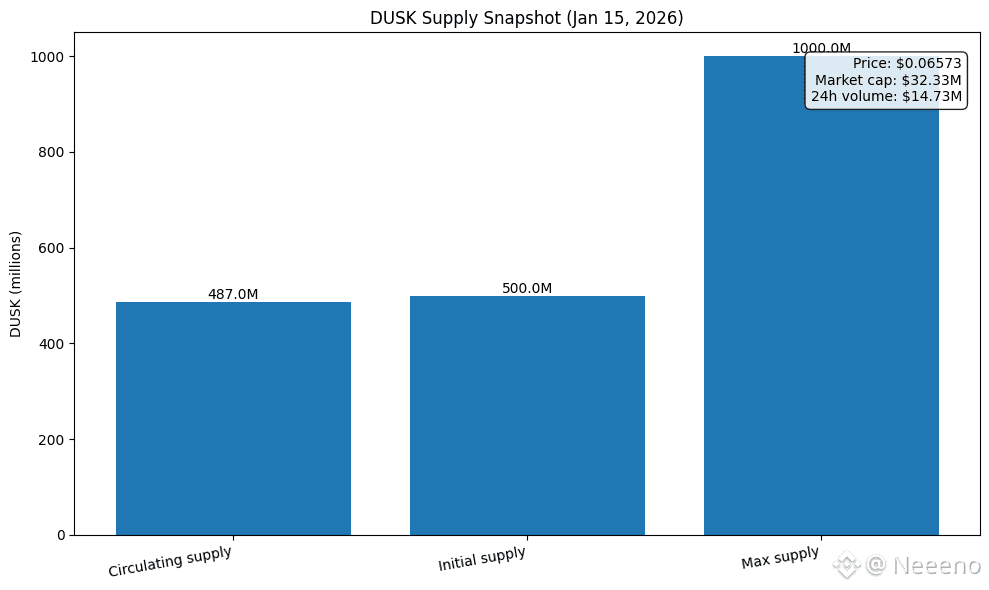

That containment has an economic dimension, not just a cryptographic one. If you want a system where participants don’t cut corners when attention fades, you have to pay for honest behavior and make dishonesty expensive. Dusk’s token economics are structured around long-lived incentives: a maximum supply of 1,000,000,000 DUSK, with 500,000,000 as initial supply and 500,000,000 emitted over a long schedule to reward network participation over decades, not months. That timeline matters because compliance isn’t seasonal. It’s continuous.

And that continuity has a price tag you can actually observe. As of January 15, 2026, DUSK is trading around $0.066, with circulating supply shown as 500,000,000 and 24-hour trading volume around $14M—numbers that don’t “prove” success, but do show an economy large enough that reliability failures would be painfully real, not theoretical. In other words: Dusk isn’t asking people to rely on a toy. It’s asking them to rely on infrastructure while money is on the line

Recent network work also signals what Dusk prioritizes when it tightens bolts. The December 10, 2025 Layer-1 upgrade cycle, framed publicly as a performance and data-availability improvement ahead of the next phase of programmability, is the kind of update you do when you expect more serious flows and more demanding observers. It’s preparation for stress, not decoration for marketing. The system is quietly positioning itself for the moments when throughput spikes and scrutiny sharpens at the same time.

Where this becomes most real is in regulated asset flows that can’t afford ambiguity. If an on-chain representation of an off-chain instrument is going to be trusted, someone must be able to reconcile what the chain says with what legal reality says—without forcing every market participant to publish sensitive details to the entire world. Dusk’s work with regulated entities in the Netherlands—like the collaboration involving NPEX and the EURQ electronic money token initiative—matters because it drags the blockchain out of theory and into environments where audits, licenses, and accountability are normal, not optional.

There’s a subtle emotional safety that comes from that. Not the safety of “nothing can go wrong,” but the safety of knowing that when something goes wrong, the system won’t demand that you sacrifice privacy to prove innocence. In most environments, the innocent still get harmed by exposure: counterparties get profiled, customers get doxxed, strategies get front-run in slow motion. Dusk is trying to make proof separable from publicity, so the process of accountability doesn’t become a second kind of damage.

This is also why Dusk’s idea of verification is naturally compatible with disagreement. Real investigations are not clean. Two parties will tell two different stories. A venue will have one dataset; a broker will have another; an issuer will point to legal documents; a regulator will point to obligations. Dusk’s approach gives these disputes a shared reference point: not “trust the loudest,” but “recompute the validity.” When the truth can be checked without demanding full exposure, conflicts become more solvable and less theatrical.

None of this eliminates trust. It changes where trust lives. Instead of trusting that everyone will behave because they’re visible, you trust that the system will reject invalid actions and preserve the option of targeted inspection when it matters. Instead of trusting a platform’s promise that it can produce audit trails later, you trust that the chain’s history was built to be provable now, and queryable later, without requiring a privacy bonfire. That’s a quieter kind of trust, but it’s the kind regulated markets actually run on.

The point, in the end, is not to impress anyone. It’s to let serious users operate without fear—fear of exposure, fear of misinterpretation, fear that one mistake will become permanent public punishment, fear that a future audit will become a scramble for missing context. Dusk’s design aims to make accountability feel less like surveillance and more like responsibility: provable behavior, controlled disclosure, and incentives that reward the people who keep the system steady when nobody’s clapping. Reliability is not attention. It’s a promise you keep when the room is empty, and Dusk is trying to price—and protect—that promise.

$