@Walrus 🦭/acc is Mysten Labs’ decentralized storage protocol. Mysten Labs is the same team that’s behind the Sui Network. Walrus’ latest partnership is with io.net (IO). This is a decentralized computing network. Together, they offer an option to save and train AI models.

That’s with IO’s Bring Your Own Model (BYOM) platform that it builds on Walrus Protocol.

• Walrus Protocol and io.net Partnership:

The partnership between Walrus Protocol and io.net offers start-ups a unique option. Besides building AI models, they can also train and run them. That happens on IOs Bring Your Own Model (BYOM) platform. IO uses Walrus Protocol to store these custom AI models. All this is decentralized and not centralized, like traditional cloud models.

This has various advantages:

✓ Start-ups or AI devs don’t have to own their own hardware or set up a data center. That’s all done for them and available to them.

✓ Traditional centralized cloud models are more expensive than this decentralized option. A great example is the pay-per-use option. In other words, you only pay for the computing power and storage you actually use. This makes it more affordable for teams with small budgets.

✓ There’s no need to use pre-approved models. Devs can build their custom models.

✓ This decentralized option has less privacy risks. Data ownership is also much more clearly defined. A decentralized option is also censorship resistant. You control your data and model instead of Google or OpenAI.

Another advantage is when potential outages happen. In mid-June, Google experienced such an outage. AWS, Cloudflare, and Firebase were also down. As a result, big parts of the internet were down. However, Walrus Protocol stayed active and didn’t experience any downtime.

IO supplies the CPUs and GPUs. It offers well over 30k GPU, on demand. On the other hand, a big company like Apple struggles to find enough GPUs to scale its AI efforts effectively. But here is IO, offering their GPU, which is 70% cheaper as well.

• Which Models Will Work Well with This Partnership?

This partnership between Walrus Protocol and io.net works best with good quality models. However, they need to be lightweight enough for a full download. To assist you with this, you can use the IO Intelligence platform. This is a free-inference platform. Inference in AI is where the AI model takes new information and makes predictions or decisions with it. It currently offers access to over 30 free and open-source models. This should get you well on your way. Here are three examples, but there are more out there. So, make sure to DYOR (do your own research).

✓ Deepseek. IO Intelligence offers four different Deepseek versions that you can use. These are reasoning models that come with variations.

✓ Qwen. This also comes in various models. Qwen also offers language models.

✓ Llama. There are also various Llama models available.

IO Intelligence also offers Google products, like Gemma, or Microsoft’s phi models. These are mostly lightweight models, so you can fully download them. Take a look and find the model that suits you best.

What all this means for Walrus is that it is now a serious hub for decentralized AI activity.

• What Else is Up with Walrus Protocol ?

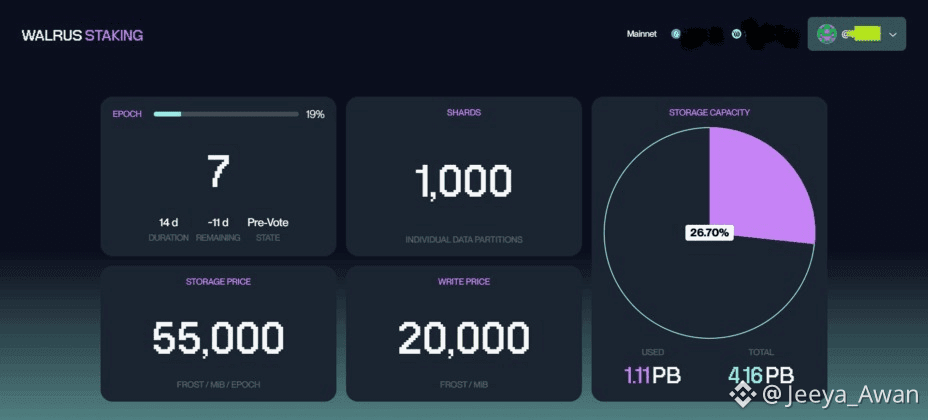

So, this partnership with io.net is exciting, but Walrus Protocol has more brewing. One of its biggest achievements is its actual usage by other protocols. Currently, already 145 projects call Walrus their storage home. New projects are finding their way to Walrus all the time. But this also led to Walrus currently being at 26.7% of capacity. Out of 4.16PB (petabyte), 1.11PB is already in use. The picture below shows this usage:

Seal is another project built by Mysten Labs. It is a decentralized secrets management service. In other words, it offers privacy. You can integrate it into Walrus, but also into other storage protocols. Private data for your storage solutions.

We can also add Nautilus to this equation. This is Sui’s framework to build oracles. So, let me explain. With Seal, you can store your data securely and with privacy on Walrus. Nautilus can fetch this data for you. It utilizes Trusted Execution Environments (TEEs). These perform computations off-chain. However, verification is on-chain.

So, as we can see, Walrus Protocol keeps marching on. The new partnership with io.net opens new ways to save and train your own AI models. However, that’s not all. Walrus sees more and more projects joining. Currently, it hosts 145 projects that use 26.7% of its storage capacity.

To make full use of the Sui stack, we can also integrate Seal and Nautilus to Walrus. Respectively a privacy platform and for oracles.