A lot of protocols talk about “interoperability” as if it’s a bridge with a logo on it. Walrus treats interoperability as something more basic: data should survive its chain of origin. In a world where applications sprawl across ecosystems—EVM, Move, modular stacks, microchains, rollups—data becomes the shared substrate. And if the substrate is fragile, every app becomes fragile. Walrus is designed as a storage and data availability protocol for blobs, focusing on large unstructured files and making them durable, verifiable, and accessible even when the network is under stress.

The fundamentals begin with a clear critique: storing blobs on a fully replicated execution layer is a tax you shouldn’t pay unless you must compute on that data. In the Walrus whitepaper, the authors point out that state machine replication implies every validator replicates everything, pushing replication factors into the hundreds or more. That’s defensible for consensus and computation, but it’s wasteful for data that just needs to exist, be retrievable, and be provably the same data over time. Walrus responds with erasure coding and distributed slivers, aiming for robust reconstruction even when a large fraction of slivers are missing.

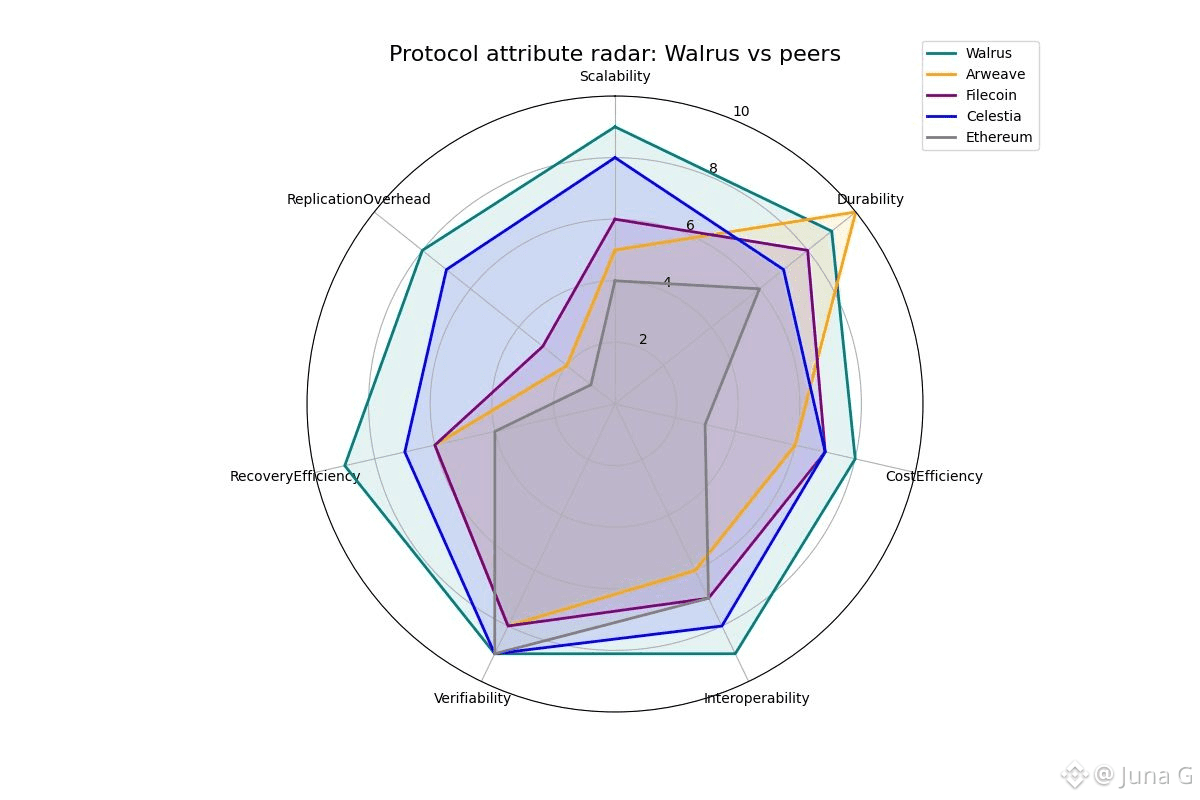

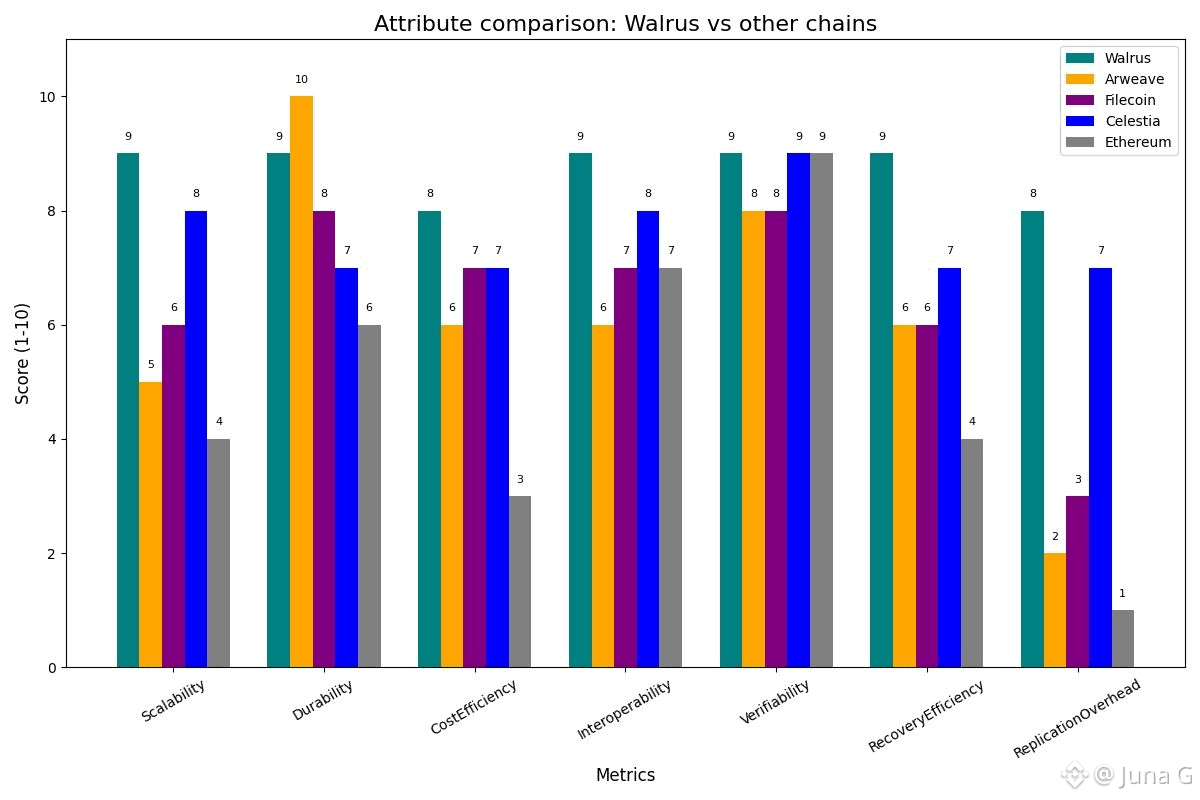

The protocol’s scalability claims are anchored in specific mechanisms. Red Stuff, the two-dimensional erasure coding protocol at the heart of Walrus, is described as achieving high security with a 4.5x replication factor while enabling self-healing recovery where bandwidth scales with the amount of lost data. That last phrase is the difference between “works on paper” and “works in the wild.” If your recovery requires re-downloading a full blob every time a node disappears, churn eats your efficiency. If recovery scales with what you lost, you can be permissionless without being brittle.

Walrus also addresses the uncomfortable truth that storage verification is hard when networks are asynchronous. Red Stuff is described as supporting storage challenges in asynchronous networks, preventing adversaries from using network delays to pass verification without storing data.

In plain language: it’s designed so “I can’t reach you right now” can’t be weaponized as an excuse. And where probability matters, the paper gives a concrete example of challenge sizing: it describes a setting where a node holding 90% of blobs would still face less than a 10^-30 probability of success in a 640-file challenge, illustrating how challenge parameters can be tuned to make cheating statistically hopeless.

Interoperability shows up in architecture: Walrus uses Sui as a secure control plane for metadata and proof-of-availability certificates, but it is explicitly chain-agnostic for builders. Walrus’ own blog notes that developers can use tools and SDKs to bring data from ecosystems like Solana and Ethereum into Walrus storage, and the mainnet launch post calls Walrus “chain agnostic,” positioned to serve virtually any decentralized storage need across Web3. This is not just marketing—using a control plane for coordination while keeping storage consumption open to many ecosystems is how you avoid fragmenting storage into chain-specific silos.

Tokenization is the bridge between interoperability and programmability. Walrus represents blobs and storage capacity as objects on Sui, making storage resources immediately usable in Move smart contracts and turning storage into an ownable, transferable, programmable asset. Once you can tokenize storage rights, you can compose them with the rest of onchain finance: lending against storage entitlements, auctioning reserved capacity, automating renewals, and building marketplace primitives where datasets have enforceable rules.

The token $WAL is the protocol’s economic anchor. WAL is used for storage payments, and Walrus explicitly aims to keep storage costs stable in fiat terms, despite token price volatility, by designing how payments are calculated and distributed. Users pay upfront for a fixed duration, and the WAL paid is distributed across time to storage nodes and stakers. This encourages a longer-term incentive horizon, which is especially important when your product promise is “your data will still be here later.”

Scalability and security are reinforced by delegated staking. WAL staking underpins network security, allowing token holders to participate even if they don’t run storage nodes, and nodes compete to attract stake, which influences data assignment and rewards based on behavior. The whitepaper adds that rewards and penalties are driven by protocol revenue and parameters tuned by token governance, and it describes self-custodied staking objects (similar to Sui) as part of the staking model. In other words, “security” isn’t a mystical property; it’s an incentive schedule with enforcement hooks.

Governance is where interoperability meets accountability. Walrus governance adjusts system parameters through WAL, and nodes vote on penalty levels proportional to stake. The mechanism is intentionally operator-informed: nodes bear real costs when other nodes underperform, especially during migration and recovery, so they have incentives to set penalties that protect the network. Walrus also introduces deflationary elements via burning: short-term stake shifting that causes expensive data migration can be penalized with fees partially burned, and future slashing would burn part of slashed amounts to reinforce performance discipline.

Walrus’ operational realism shows up in its network observations. The whitepaper’s testbed includes 105 independently operated storage nodes and 1,000 shards, with nodes distributed across at least 17 countries and a stake-weighted shard allocation model. And in public product messaging, Walrus highlights that the network employs over 100 independent node operators and that, with its storage model, user data would still be available even if up to two-thirds of nodes go offline. That is the sort of resilience claim that matters when you’re trying to store something consequential: not just NFT art, but compliance records, datasets, and the raw inputs that feed AI systems.

The conclusion is simple: Walrus is trying to make data infrastructure composable, verifiable, and economically sustainable across ecosystems. Fundamentals give it purpose, tokenization gives it programmability, interoperability gives it reach, scalability gives it credibility, and governance gives it the ability to evolve without losing discipline. Whether you’re building an AI data marketplace, a rollup availability pipeline, or a media-rich consumer dapp, #Walrus is betting you won’t want to “choose a chain” for your data—you’ll want your data to choose reliability. This is not financial advice, but it is an architectural bet with teeth, and $WAL is the teeth that bite: pricing, staking security, and governance all converge there.