When I first came across VanarChain, what caught my attention wasn’t the usual toolkit, the grants, or the flashy developer incentives so common in Layer 1 blockchain launches. It was quieter than that almost subtle but unmistakable once you started reading between the lines. The chain’s architecture felt less concerned with recruiting developers today and more focused on preserving what happens tomorrow. That difference might sound small, but it fundamentally reshapes how you read the system.

Most blockchains still measure success by developer activity. That made sense in an era when blockspace was scarce and applications were straightforward: more developers meant more apps, more transactions, and ultimately more fees. But under that logic lies an implicit assumption—that computation is the scarce resource. Increasingly, that assumption no longer holds.

What’s becoming scarce is memory. Not storage in the sense of dumping data somewhere cheap, but contextual memory—information that persists, can be reasoned over, and informs future action. In an AI-driven world, forgetting isn’t just inconvenient; it’s expensive. Relearning consumes time, compute, and trust. Most blockchains are designed to forget by default. They record transactions, not understanding. They execute deterministically, but every smart contract call wakes up with amnesia. Context exists off-chain, stitched together by indexers, applications, or centralized services that act as memory surrogates for the chain itself.

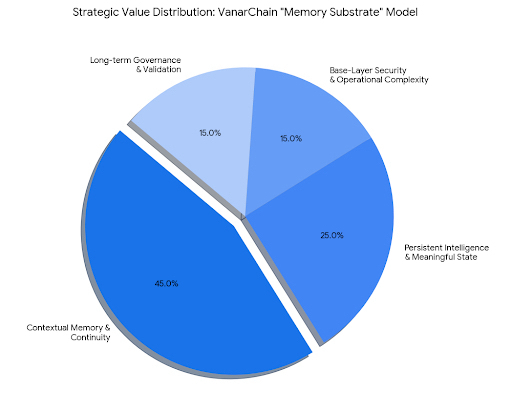

That model works when on-chain logic is simple. It breaks down when intelligence becomes continuous. VanarChain appears to recognize this and bets in a different direction: it’s not competing for the largest developer ecosystem. It’s competing to become a memory substrate—a network where context can accumulate, be referenced, and meaningfully influence future execution. Developers aren’t irrelevant here. Their work doesn’t vanish; it shifts. Instead of building memory stacks from scratch off-chain, they can tap into a chain that already preserves context.

The implications for developers are both liberating and demanding. On one hand, building sophisticated AI-driven systems becomes easier when you don’t have to constantly recreate context. On the other hand, mistakes persist. Bad data doesn’t disappear at the end of a session. That permanence raises stakes, requiring better validation, governance, and accountability. It’s a chain that rewards discipline and long-term thinking, not speed or virality.

Persistent memory changes the economics of blockchain too. Tokens stop being purely gas or speculative instruments; they begin to represent access to continuity. Value accrues not from sheer throughput but from the network’s ability to retain and propagate meaningful state over time. That’s harder to hype in traditional crypto narratives, but potentially far more durable. If you think in terms of AI agents, autonomous systems, or multi-step simulations, a chain that forgets is a brittle chain. Continuity is the quiet enabler.

This design choice doesn’t come without risk. Embedding memory at the infrastructure layer increases attack surfaces and operational complexity. Governance becomes harder. Questions arise: how do you prune memory? Correct errors? Resolve conflicting states? There’s a balance between permanence and flexibility, and VanarChain is deliberately leaning toward persistence. Whether that balance holds under scaling pressures remains to be seen.

Timing also matters. Global AI compute spending surpassed $200 billion last year, but most of that goes toward training and inference, not long-term memory infrastructure. Models are growing larger, but their ability to retain and reason over long-term context is constrained. Anyone who has watched an AI agent repeat errors understands the cost of forgetting. Every reset burns time, compute, and trust. Blockchains mirror that problem. Deterministic execution is not equivalent to meaningful continuity. VanarChain’s approach—embedding memory closer to the base layer—is a direct response to that gap.

It’s worth noting that this isn’t a guaranteed winning strategy. Competing for memory is slower than competing for developer counts or hackathon metrics. Adoption curves may look muted. If AI systems continue to prioritize off-chain memory solutions, the demand for on-chain persistence could stall. And even with growing interest, the technical complexity of persistent memory—ensuring consistency, security, and scalability—poses a significant challenge.

But there are larger forces at play. Autonomous agents are no longer theoretical; they are interacting with real economies, executing payments, scheduling tasks, and optimizing workflows. These agents don’t just need logs—they need context. They don’t just need state—they need understanding. Better models alone don’t solve that problem. Continuity does. And that’s precisely where VanarChain is placing its bet.

From a market perspective, this is a subtle but important distinction. Developer activity across crypto has declined by roughly 20 percent year over year, depending on whose data you trust. Meanwhile, AI-related narratives continue to attract disproportionate attention, even when products remain incomplete. VanarChain seems to be targeting the space between hype and infrastructure: building quietly beneath the noise, enabling intelligence to operate continuously, rather than selling features that don’t exist yet. It’s less flashy, more steady, and intentionally under the radar.

For the broader crypto-AI ecosystem, this approach raises interesting questions. If persistent memory becomes a key enabler, it could redefine what we value in blockchain infrastructure. We might start measuring chains not by TPS or developer adoption, but by continuity, reliability, and the depth of context they preserve. That’s a shift from throughput to retention, from ephemeral activity to lasting intelligence.

Yet, it’s impossible to ignore the uncertainties. Persistent memory amplifies mistakes as well as successes. Security risks are magnified. Governance becomes more critical—and more complex. Adoption may remain slower as the market continues to reward immediate metrics over long-term compounding advantages. And if AI systems consolidate around off-chain memory solutions or alternative architectures, the promise of on-chain persistence may not find the traction it needs.

Still, VanarChain’s approach feels deliberate. It isn’t a reaction to short-term trends or a bid for visibility. It’s a bet on what infrastructure will matter in a future where AI systems increasingly interact with economic and social systems. In that world, forgetting is expensive, relearning is slow, and continuity is scarce. Competing for memory may not make headlines today, but it positions the chain for challenges that others haven’t even started to anticipate.

At the end of the day, VanarChain isn’t really competing for developers at all. It’s competing for something quieter, harder to replicate, and far more costly to rebuild once lost: memory. If blockchain infrastructure is indeed maturing alongside AI, then chains that forget by design may struggle to support systems that are supposed to learn. And in that light, VanarChain’s quiet, deliberate focus may prove more consequential than any hackathon metric or developer count ever could.