Vanar is one of those Layer 1 projects that keeps coming back to a simple idea: if Web3 is ever going to feel normal for everyday people, the chain underneath has to be built for the real world first, not for traders first. That’s the core vibe behind Vanar’s “next 3 billion” direction, and it actually makes sense when you look at what they focus on, because they’re not trying to win by being the most complicated tech on paper, they’re trying to win by being the easiest foundation for mainstream products that already have users, like games, entertainment experiences, brand activations, and consumer apps that need to run smoothly without making people think about gas, wallets, or blockchain jargon.

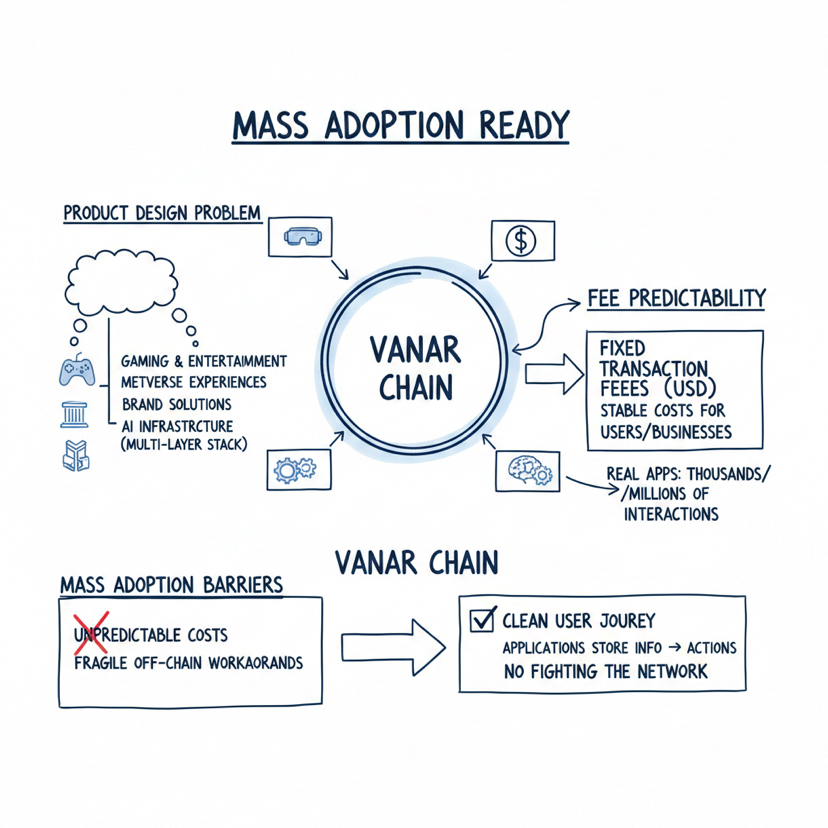

What makes Vanar feel different is the way they talk about adoption as a product design problem rather than a philosophy. They consistently position themselves around the same mainstream verticals, with gaming and entertainment being the obvious center of gravity, and then expanding outward into metaverse experiences, brand solutions, and more recently an AI-driven infrastructure narrative that they describe as a multi-layer stack. The idea here is that a modern chain should not just process transactions, it should also support how applications store meaningful information and turn that information into actions without relying on fragile off-chain workarounds that break under real user load.

If you zoom out, Vanar is basically saying this: a chain can’t be “mass adoption ready” if costs are unpredictable and if developers have to fight the network every time they try to build a clean user journey. That’s why their published design focuses on a couple of very practical decisions, and one of the biggest ones is fee predictability. In their whitepaper, they describe a model built around fixed transaction fees priced in USD terms, which is their way of tackling the problem where users and businesses can’t plan around a token that moves aggressively in price. When you’re building real apps, especially ones that might run thousands or millions of small interactions, predictable costs become more important than theoretical maximum throughput.

They pair that with EVM compatibility, which is not a flashy choice but it’s a strong one for onboarding builders, because it means existing smart contract tooling and developer skills can transfer over more easily. For a project that wants to onboard mainstream apps, it’s rational to reduce migration pain, because mainstream apps don’t want to rebuild their entire stack for a new chain unless there’s an obvious benefit. Vanar’s approach is basically to keep the developer entry point familiar, then differentiate with how the network behaves, how fees are structured, and how the “extra layers” of the stack support real products.

Where the story gets more interesting, and where you can see the “behind the scenes” strategy, is in how Vanar is pushing beyond the usual L1 narrative into what they describe as intelligence infrastructure. Their public materials describe a layered system, and two names appear again and again: Neutron and Kayon. Neutron is presented as a semantic memory layer, meaning it’s not just about storing data, it’s about storing meaning in a compressed, verifiable format, and they describe outputs called “Seeds” that are meant to preserve useful context while reducing data size dramatically. Kayon is presented as a reasoning layer that can query and interpret this verifiable memory to enable smarter application logic, which is basically their attempt to make the chain useful not only for transactions, but also for decision-making and automation that still stays anchored in verifiable records.

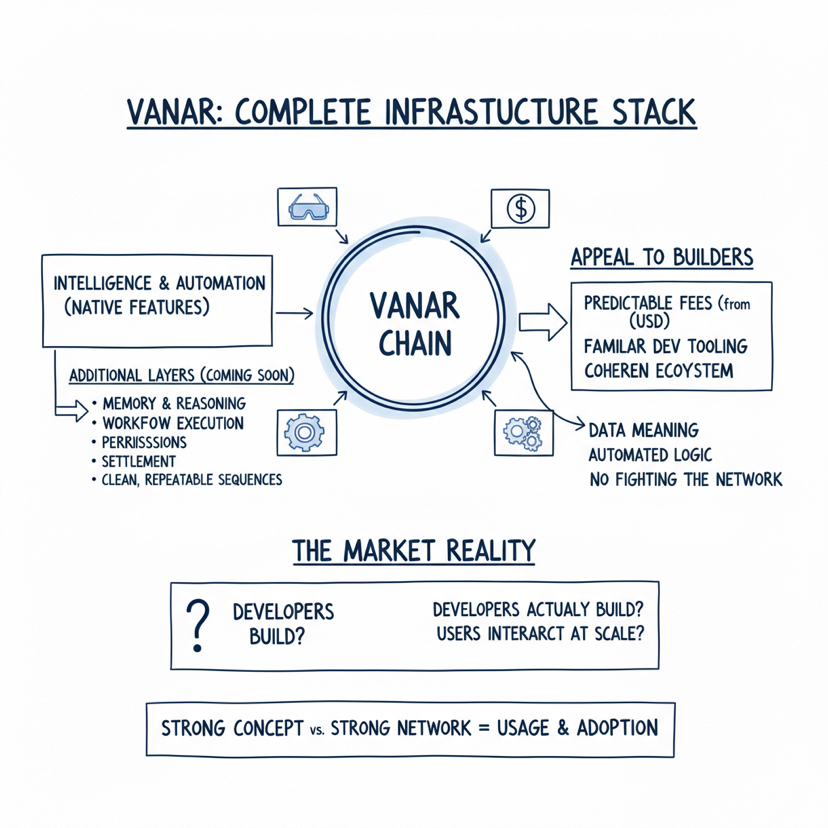

This is important because a lot of adoption fails at the “glue layer,” where apps need data, identity, history, permissions, compliance checks, and conditional execution, but the chain is only good at simple state updates unless you add off-chain services to do the heavy lifting. Vanar is trying to bring more of that glue into native infrastructure, so developers can build applications that feel intelligent and responsive without creating a spider web of dependencies that fall apart when user traffic spikes. If they pull this off in a way that developers actually use, it becomes a real differentiator, because it changes the application model from “contract executes when called” to “system understands context and can power workflows.”

Now, none of this matters if the token design doesn’t support the network properly, and Vanar’s token story is fairly direct in their documentation and whitepaper framing. VANRY is positioned as the native token that powers transactions and execution on the network, and it also plays a role in network participation through staking and validator rewards. The ecosystem also maintains an ERC-20 representation on Ethereum, which is the contract you linked, and the interoperability framing in their whitepaper references bridging and wrapped representations so the token can move across networks when needed.

Their whitepaper also lays out a supply framework that stands out because it tries to describe a long issuance runway via block rewards, and it presents a structure for how new issuance is directed, heavily oriented toward validator rewards and development incentives over time. The way this reads is that they want a network that can sustain validator participation and ongoing development without relying purely on hype cycles, and they explicitly describe a long-term issuance schedule. Whether the market likes any token model is always a separate question, but from a project-building perspective, long-run sustainability is usually healthier than short-run excitement, especially when the goal is to build infrastructure for mainstream products that need to exist for years, not weeks.

On the network side, their materials describe a validator setup that emphasizes practicality, with a foundation-led selection approach described in their docs, while the broader community participates through delegating and staking. This is a structure many networks use during early stages because it reduces chaos while infrastructure is still stabilizing, and it also lets them enforce performance standards for validators, which is especially relevant when a chain’s target customers include consumer applications that can’t tolerate instability. Over time, the market typically expects a credible path toward broader decentralization, but early-stage stability is usually what enables serious products to even consider building.

When you look at Vanar’s ecosystem positioning, you can see they’re intentionally building around the categories that already know how to attract huge numbers of users, which is why names like Virtua and VGN keep appearing in the public narrative. It’s essentially the logic of distribution, where the chain doesn’t have to manufacture adoption from scratch if it can plug into experiences people already want, and that matters because adoption is less about convincing people to “use blockchain” and more about shipping products that people would use anyway, where blockchain is simply the backend that makes ownership, verification, and value transfer smoother.

The recent direction on their official channels keeps leaning toward the same theme, which is that Vanar wants to be known not just as a fast L1, but as a complete infrastructure stack where intelligence and automation become native features rather than external add-ons. Their site labels additional layers as “coming soon,” which suggests they’re aiming to expand from memory and reasoning into more direct workflow execution, so that applications can define sequences like verification, permissions, triggers, and settlement in a clean, repeatable way. If that roadmap is executed well, it’s the kind of thing that can appeal to builders working on real products, because it reduces the need to build custom pipelines for every application.

What I like about Vanar’s approach is that it doesn’t rely on one single claim like “we’re the fastest” or “we have the most TPS.” Instead, they’re focusing on the stuff that quietly decides whether a project can host real adoption, like predictable fees, familiar developer tooling, a coherent ecosystem story, and infrastructure that tries to handle the real messy parts of applications such as data meaning, verification, and automated logic. The challenge, of course, is that the market only rewards what gets used, and the difference between a strong concept and a strong network is whether developers actually build on these primitives and whether users end up interacting with those products at scale.

My takeaway is that Vanar is trying to play the long game by building a chain that fits the shape of mainstream products, and that’s why the gaming and entertainment roots matter, because those industries punish friction more than any other. If Vanar can keep the network stable, keep costs predictable in a way that businesses can trust, and keep delivering the intelligence layers as usable tools rather than just branding, it has a real shot at carving out a distinct lane where it becomes the infrastructure behind consumer-grade Web3 experiences that don’t feel like Web3 at all.