After I had started doing some research on the subject of decentralized storage solutions a few years ago, I quickly found out that the majority of the conversations in the crypto world are about speed and scalability how many transactions per second a blockchain could throughput. Although that is certainly crucial to payments and DeFi, a more subtle, but equally significant issue that I was attracted to is the need to make big, unstructured data permanently available without costly access and centralizing needs. That is why I took time to read about @Walrus 🦭/acc on Sui, and to my mind it is a conscious change in priority that the industry might use.

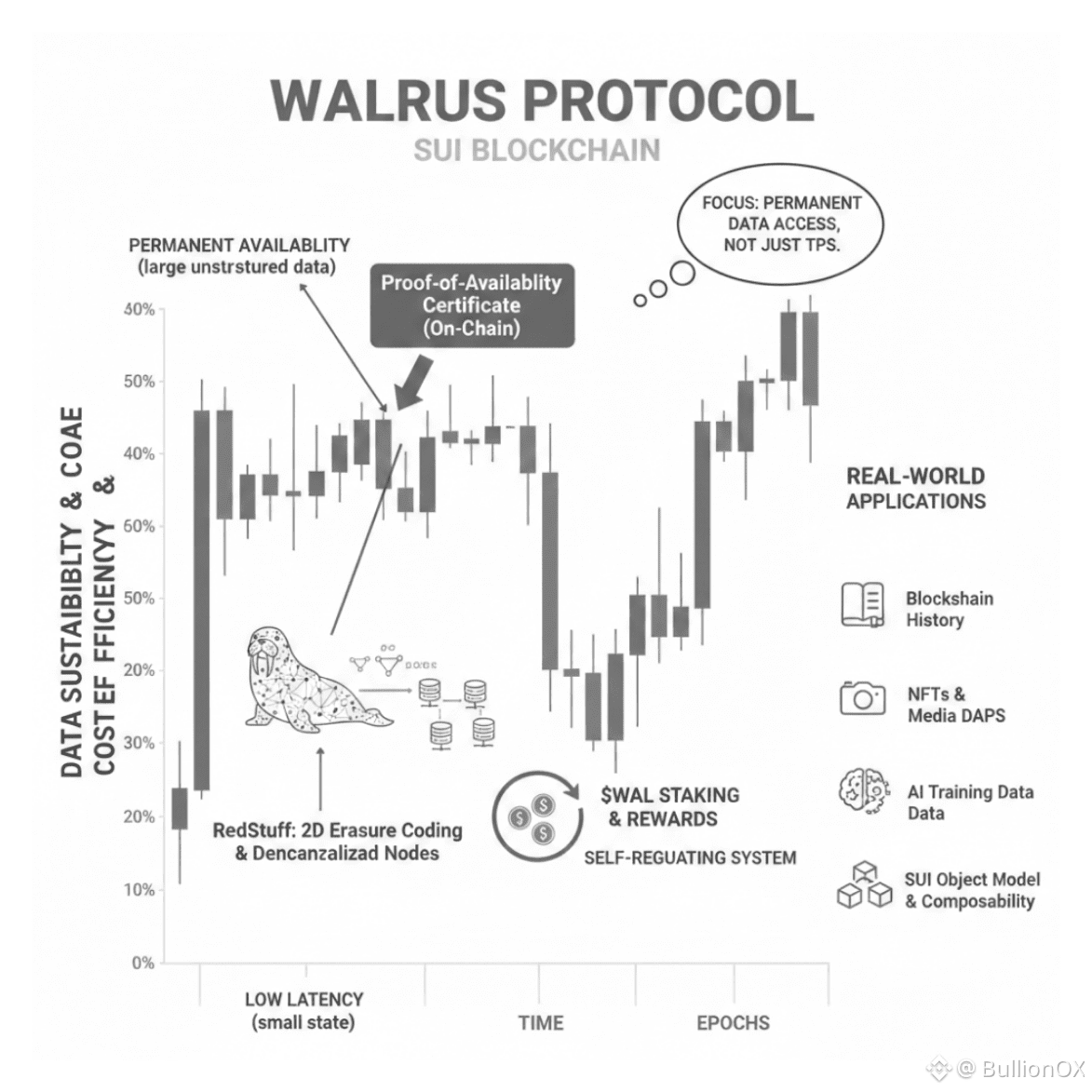

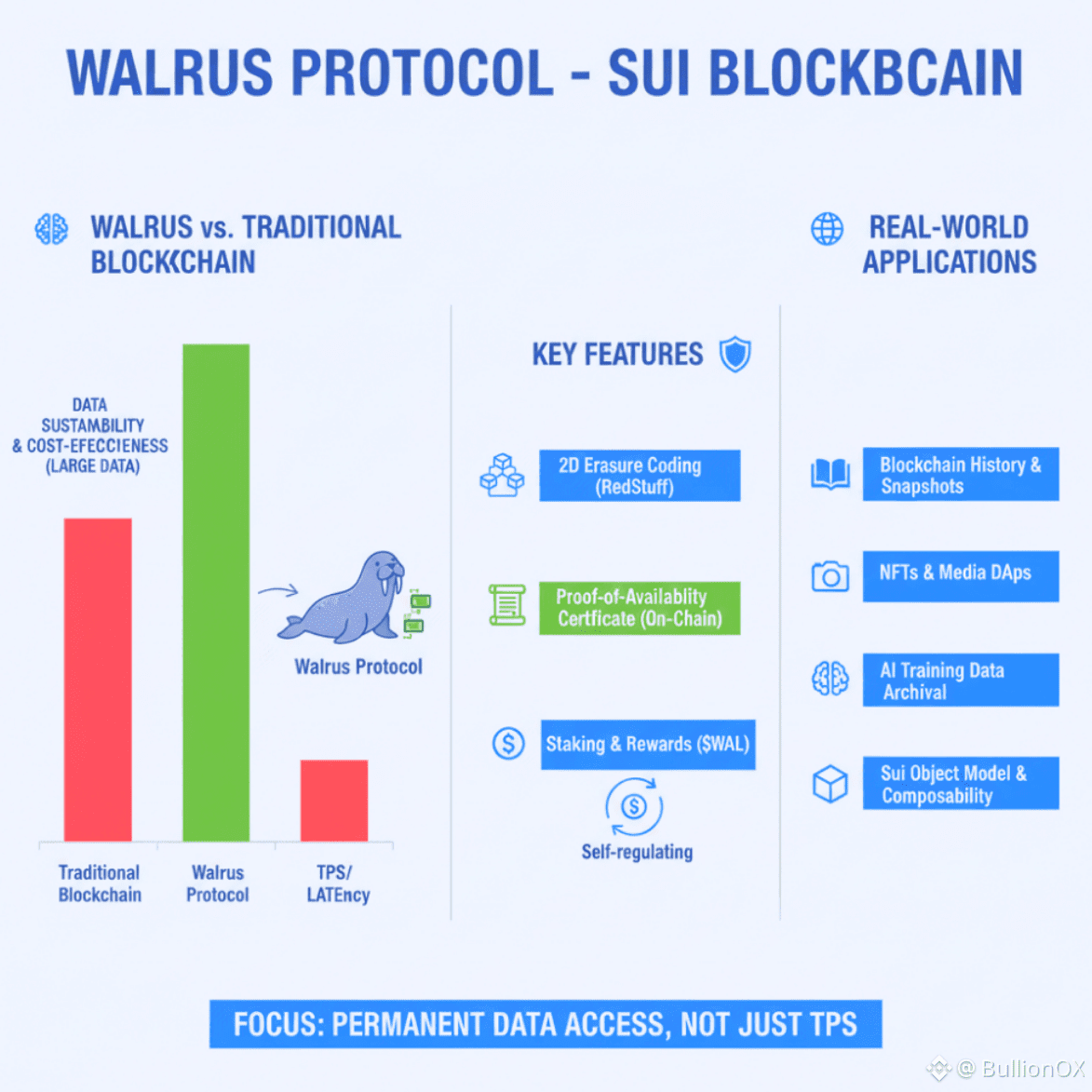

Walrus does not compete by the raw throughput indicators. Rather, it is concerned with ensuring the sustainability of data availability and ensuring it is economically sustainable. The default blockchain storage can be commonly based on full replication among the validators that is efficient when it comes to small state but becomes inefficient when dealing with gigabytes of images, videos, datasets or archival records. This is taken care of by Walrus with RedStuff, its 2D erasure code system. The data is coded into slivers and is transported out on a network of autonomous storage sites. With just a fraction of these slivers, the reconstruction process can be done with an effective replication factor of about 4x to 5x much less than full-replication strategies but still with a high fault tolerance.

This architecture choice allows actual long term utility. When uploaded, every blob creates an on-chain Proof of Availability certificate stored on Sui. With this certificate, any participant can verify the data, be it a smart contract, light client or a developer without having to download the entire file. Nodes put up a stake of $WAL , get rewarded based on their performance per epoch, and they are part of a self regulating system with availability being directly incentivized with user paid fees paid over time.

This architecture is in practice useful to meaningful real world applications. The history of blockchains (e.g. of old checkpoints or state snapshots) may be stored inexpensively in the future. Developers of media intensive dApps can archive NFTs, game files or AI training data and be sure that the content will not go away as a result of link rot or centralized providers. Composability is additionally improved with the integration of Sui with the object model: blobs are programmable primitives which can be referred to or extended, transferred or managed by smart contracts without any off chain workaround.1

Naturally, to become truly permanent, the network has to keep growing, i.e. new nodes must be involved, incentives should be maintained and epoch changes managed. These are mitigated by Walrus staking and reward structure, but as any protocol it changes as it gets adopted.

The maturity in this focus is what can to me most. Although TPS is in the news headlines, permanent data access is the foundation of trust in applications of Web3 that is not limited to simple transfers. Walrus is quietly establishing an infrastructure based on that foundation, in which data is not temporal but an asset of years worth verifying.