Every cycle, crypto rediscovers speed.higher TPS numbers. Faster finality. Microsecond comparisons between chains. Benchmarks get posted. Screenshots get shared. The conversation usually stops there.If it’s faster, it must be better.

I used to think like that early in my career when I was working around distributed systems outside of blockchain. Performance metrics were clean. You could improve them. You could prove improvement. It felt objective.

But over time, I realized something uncomfortable: the systems that lasted the longest were rarely the ones that looked best in early performance charts.

They were the ones that behaved predictably when something went wrong.

Optimizing for speed in isolation isn’t that mysterious. You trim redundancy. You simplify validation. You assume more capable hardware. You narrow what the system has to check before accepting state changes. In controlled conditions, everything looks smooth, production environments don’t behave like that.

Users show up in bursts. Integrations are written imperfectly. External dependencies stall. A service that “never fails” fails at exactly the wrong moment. And suddenly, the most important property isn’t how fast the system can move it’s how calmly it handles stress.

That’s why I find Vanar Chain’s positioning interesting.

Vanar doesn’t seem obsessed with chasing headline throughput numbers. The emphasis appears to be elsewhere on keeping behavior consistent in environments that are structurally messy: gaming, digital assets, stablecoin flows, AI-driven execution.

Those workloads are not simple transfers from A to B.

In gaming environments, you don’t just process transactions. You process state changes that depend on other state changes. One in-game trigger can cascade into thousands of related updates. If coordination slips slightly, you don’t get an obvious crash. You get subtle inconsistency. And subtle inconsistency is harder to debug than outright failure.

AI workflows make this more complicated. They aren’t linear. They branch. They depend on intermediate outputs. Timing matters. Retry logic matters. Determinism matters more than raw speed.

In my experience, distributed systems don’t usually collapse because they were too slow. They degrade because complexity outgrows the original architectural assumptions.

That’s the part most people ignore.

Early decisions especially those made to make benchmarks look impressive — stick around. They get embedded into SDKs, documentation, third-party tooling. Years later, when workloads change, those assumptions are still sitting there in the foundation.

Changing them isn’t just technical work. It’s ecosystem work. It’s coordination work.

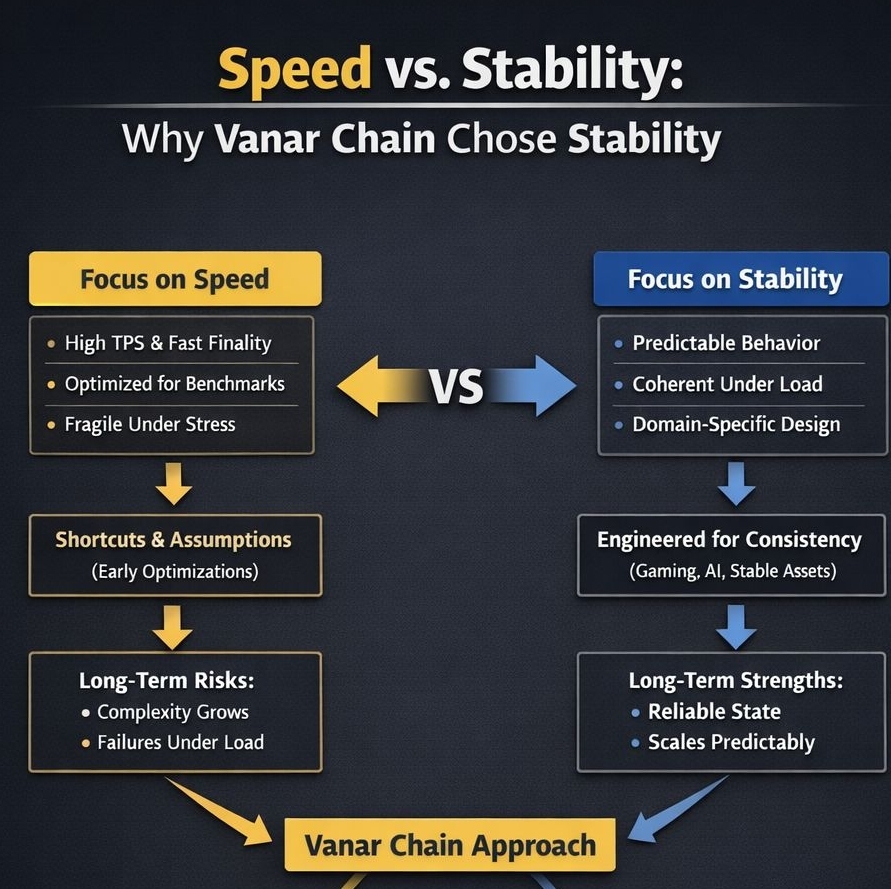

There are generally two paths infrastructure takes.

One path is broad and flexible at the beginning. Build something general-purpose. Let use cases define themselves later. Adapt as you go. This works, but it accumulates layers. Over time, those layers interact in ways nobody fully anticipated at launch.

The other path starts narrower. Define the primary operating environment early. Engineer deeply for it. Accept that not everything will fit perfectly, but ensure the core workloads remain stable even under pressure.

Vanar seems closer to the second approach. By leaning into interactive digital systems and AI-integrated workflows, it’s implicitly accepting constraints. That may limit certain benchmark optimizations. It may slow some experimental flexibility.

But constraints reduce ambiguity.

And ambiguity is where fragility hides.

Fragility doesn’t usually appear as a dramatic failure. It shows up as small synchronization mismatches. Occasional reconciliation delays. Edge cases that only appear during peak demand. Each one manageable. Together, increasingly expensive.

Eventually, you notice the system spending more energy defending itself than enabling growth.

Markets rarely reward that kind of long-term thinking immediately. Speed is easier to market. It’s a single number. Stability takes time to demonstrate, and by the time it becomes obvious, the narrative has usually moved on.

But infrastructure doesn’t care about narrative cycles.

If a network is meant to support gaming economies, digital assets, AI processes, and financial transfers simultaneously, what ultimately matters is whether its coordination model holds as complexity compounds.

Not whether it was fastest in year one.

For Vanar and the broader VANRY ecosystem forming around it the real evaluation won’t come from benchmark charts. It will come from how the system behaves after years of real usage, real integrations, and real stress.

Because in the end, distributed systems aren’t judged by how fast they can move under ideal conditions.

They’re judged by whether they remain coherent when conditions stop being ideal.