I remember the first time I integrated an “AI-powered” feature into a smart contract. It felt impressive for about a week. The demo worked. The chatbot responded. The dashboard lit up with green metrics. And then the cracks showed. The AI wasn’t actually part of the chain. It was hovering around it, stitched in through APIs, reacting to events but never really understanding them. That’s when it hit me that most AI-on-blockchain projects are solving for optics, not structure.

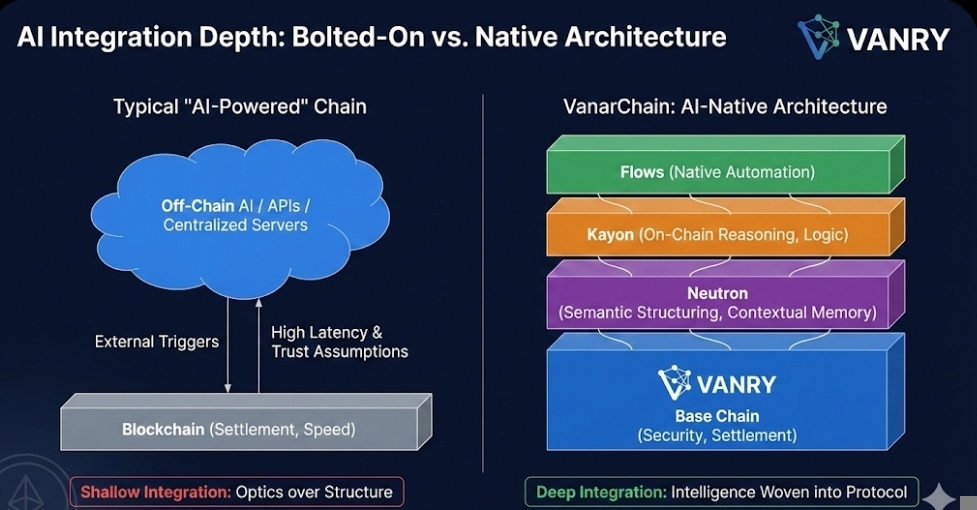

Right now the market is crowded with chains claiming AI alignment. If you scroll through announcements on X, including updates from VanarChain, you’ll see the same words repeated everywhere else too. AI integration. Intelligent automation. Agent-ready systems. But when I look underneath, most of it comes down to one of three things. Hosting AI models off-chain. Using oracles to fetch AI outputs. Or letting smart contracts trigger AI APIs. None of that changes the foundation of how the chain itself handles data.

And that foundation matters more than the headline feature.

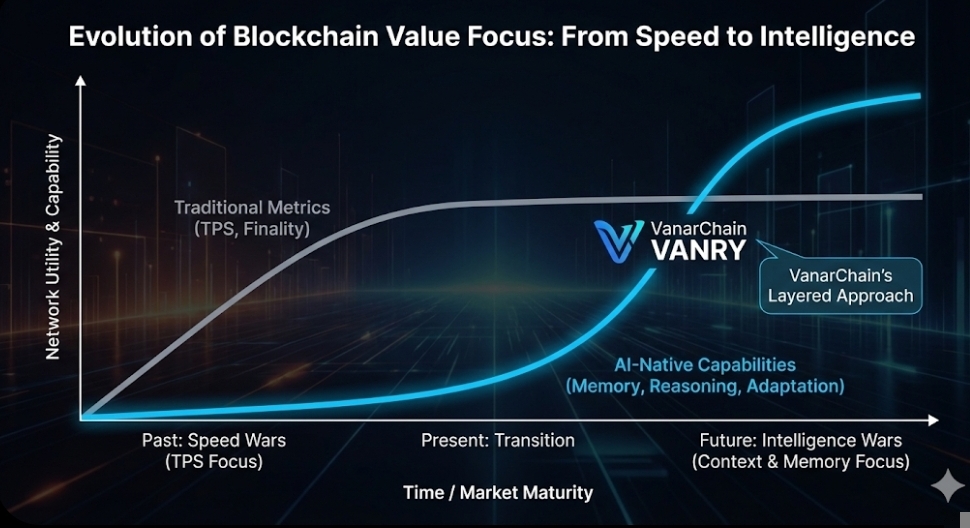

Traditional smart contracts are deterministic by design. You give them inputs, they produce outputs. No ambiguity. That’s useful for finance. It’s less useful for intelligence. Intelligence needs context. It needs memory. It needs a way to interpret rather than just execute. If you deploy on a typical high-throughput chain that boasts 10,000 or even 50,000 transactions per second, what you’re really getting is speed without understanding. The contract runs faster. It doesn’t think better.

Understanding that helps explain why so many AI integrations feel shallow.

Take transaction finality as an example. Sub-second finality, say 400 milliseconds, sounds impressive. And it is. But what is being finalized? A state change. A balance update. A token transfer. The chain confirms that something happened. It does not understand why it happened or what that implies for future interactions. So developers build layers on top. Off-chain databases. Memory servers. Indexers. Suddenly the “AI” lives outside the blockchain, and the blockchain becomes a settlement rail again.

That momentum creates another effect. Complexity drifts outward.

Every time you add an external reasoning engine, you increase latency and trust assumptions. If your AI model sits on a centralized server and feeds decisions back into a contract, you’ve just reintroduced a point of failure. If that server goes down for even 30 seconds during high activity, workflows stall. I’ve seen this during market spikes. A DeFi automation tool froze because its off-chain AI risk module timed out. The blockchain itself was fine. The intelligence layer wasn’t.

This is where VanarChain’s structure starts to feel different, at least conceptually.

When I first looked at its architecture, what struck me was not TPS. It was layering. The base chain secures transactions. Then you have Neutron handling semantic data structuring. Kayon focuses on the reasoning. Flows manages automation logic. On the surface, that sounds like branding. Underneath, it is an attempt to push context and interpretation closer to the protocol layer.

Neutron, for instance, is positioned around structured memory. That phrase sounds abstract until you think about what blockchains normally do. They store state. They do not store meaning. Semantic structuring means data is organized in a way that can be referenced relationally rather than just sequentially. Practically, that reduces how often you need external databases to reconstruct user behavior. It changes where intelligence lives.

Now, there are numbers attached to this stack. VanarChain has highlighted validator participation in the low hundreds and block confirmation times that compete in the sub-second range. On paper, those figures put it in the same performance band as other modern Layer 1 networks. But performance alone is not the differentiator. What those numbers reveal is that the chain is not sacrificing base security for AI features. The underlying throughput remains steady while additional layers operate above.

Meanwhile, Kayon introduces reasoning logic on-chain. This is where most chains hesitate. Reasoning is heavier than execution. It consumes more computational resources. If reasoning happens directly in protocol space, you risk congestion. If it happens off-chain, you risk centralization. Vanar’s approach attempts to keep reasoning verifiable without collapsing throughput. If this holds under sustained load, that balance could matter.

Of course, skepticism is healthy here.

On-chain reasoning can increase gas costs. It can introduce latency. It can make audits more complex because you are no longer reviewing simple deterministic logic but contextual flows. There is also the question of scale. Handling a few thousand intelligent interactions per day is different from handling millions. We have seen other networks promise intelligent layers only to throttle performance when activity spikes.

Still, early signs suggest VanarChain is structuring for AI from the start rather than bolting it on later. That difference is subtle but important. Retrofitting AI means adjusting an architecture built for finance. Designing with AI in mind means structuring data so that context is not an afterthought.

The broader market context reinforces this shift. AI agent frameworks are gaining traction. Machine-to-machine transactions are being tested. In 2025 alone, AI-linked crypto narratives drove billions in token trading volume across exchanges. But volume does not equal functionality. Many of those tokens sit on chains that cannot natively support persistent memory or contextual automation without heavy off-chain infrastructure.

That disconnect is quiet but real.

If autonomous agents are going to transact, negotiate, and adapt on-chain, they need more than fast settlement. They need steady memory. They need a texture of data that can be referenced over time. They need automation that does not rely entirely on external servers. VanarChain’s layered model is attempting to address that foundation issue.

Whether it succeeds it's still remains to be seen.

Execution will determine the credibility. Validator growth must be stay steady. Throughput must remain consistent during stress cycles. AI layers must not degrade performance. And developers need real use cases, not demos. If those conditions are met, the difference between AI-added and AI-native infrastructure becomes clearer.

What I am seeing more broadly is a quiet migration in focus. Speed wars are losing their shine. Intelligence wars are starting. Chains are no longer competing just on block time but on how well they can host systems that think, remember, and adapt. That is a harder metric to quantify. It is also harder to fake.

When I step back, the real issue is not whether AI exists on-chain. It is where it lives in the stack. If intelligence sits on top, loosely connected, it can always be unplugged. If intelligence is woven into the structure, it becomes part of the network’s identity.

And that is the point most AI-on-blockchain projects miss.

They optimize the feature. They ignore the foundation.

In the long run, intelligence that is earned at the protocol layer will matter more than intelligence that is attached at the edges.

Articolo

Why Most AI-on-Blockchain Projects Miss the Point And What VanarChain Is Structuring Differently

Disclaimer: Include opinioni di terze parti. Non è una consulenza finanziaria. Può includere contenuti sponsorizzati. Consulta i T&C.

0

13

138

Esplora le ultime notizie sulle crypto

⚡️ Partecipa alle ultime discussioni sulle crypto

💬 Interagisci con i tuoi creator preferiti

👍 Goditi i contenuti che ti interessano

Email / numero di telefono