Privacy stops being protection the moment it cannot be explained under pressure.

Privacy stops being protection the moment it cannot be explained under pressure.

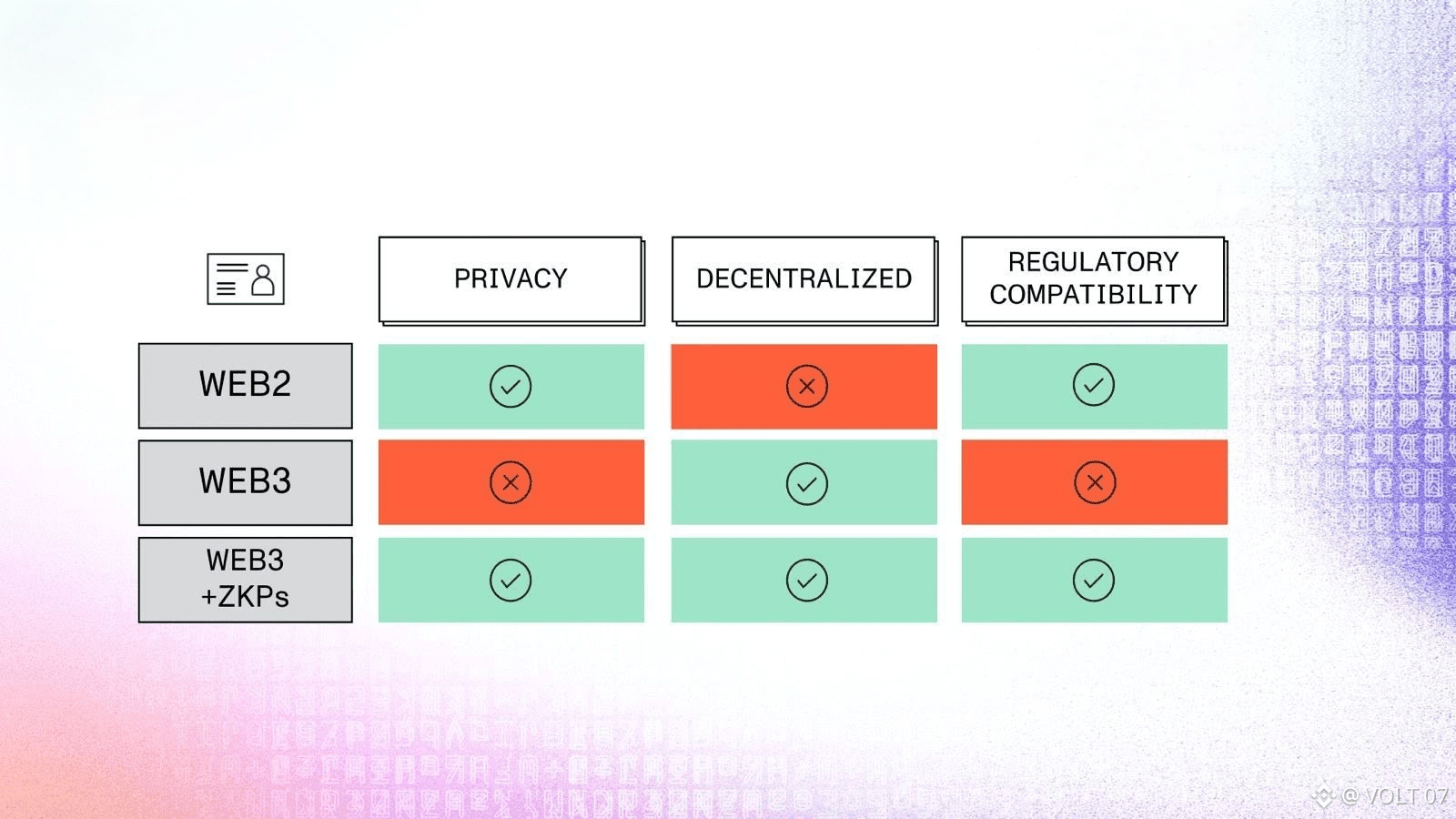

In Web3, privacy is often treated as an inherent good. More encryption, more obfuscation, fewer visible signals. But in real financial infrastructure, privacy is not judged by how much it hides it is judged by what happens when someone demands answers.

That is the line where privacy flips from asset to liability.

This is the line that separates marketing-grade privacy from infrastructure-grade privacy and it is exactly where Dusk Network positions itself.

The hidden risk: privacy without accountability

Privacy becomes dangerous when it creates:

delayed proofs,

ambiguous responsibility,

discretionary explanations,

reliance on trust during audits.

At that point, privacy no longer protects users or institutions. It protects uncertainty.

Regulators, institutions, and risk committees are not afraid of hidden data.

They are afraid of unprovable correctness.

How privacy turns into liability in poorly designed systems

Privacy-first systems fail when:

proofs require reconstruction instead of being native,

disclosure paths are improvised rather than deterministic,

correctness depends on operator behavior,

failure modes are unclear or asymmetric.

In these systems, the first serious audit reframes privacy as obstruction even if no wrongdoing occurred.

That reputational shift is irreversible.

Why “we can explain it” is not good enough

Explanations imply interpretation.

Interpretation implies discretion.

Discretion implies risk.

Modern regulation does not want explanations. It wants:

binary answers,

cryptographic guarantees,

immediate verification,

minimal but sufficient disclosure.

If privacy delays or complicates that process, it becomes a liability regardless of intent.

Dusk’s core distinction: privacy that never competes with proof

Dusk is not designed to hide activity.

It is designed to separate execution confidentiality from verification certainty.

This means:

execution remains private,

correctness remains provable,

compliance remains enforceable,

disclosure remains scoped and immediate.

Privacy never becomes a negotiating position.

Proof is always the final authority.

When regulators lose patience, systems are reclassified

The most dangerous moment for any privacy system is not a hack it is hesitation.

If a regulator asks:

Was this rule enforced?

Is this transaction compliant?

and the system responds with:

delays,

explanations,

partial logs,

“we’re working on it,”

then privacy has already failed. The system is no longer evaluated as infrastructure. It is evaluated as risk.

Dusk is built to avoid that moment entirely.

Why transparent systems fail differently but not better

Fully transparent chains avoid the “proof hesitation” problem by exposing everything. That creates a different liability:

permanent strategy leakage,

MEV as a structural tax,

unfair execution,

institutional non-participation.

Transparency avoids one regulatory failure mode by embracing another economic one.

Dusk rejects that tradeoff.

The survivable model: privacy with zero excuses

Privacy is only defensible when:

failure does not create advantage,

proof does not require permission,

audits do not rely on narrative,

accountability is cryptographic.

Dusk’s architecture assumes scrutiny, not trust.

That assumption is what keeps privacy protective instead of radioactive.

Why institutions are hypersensitive to this distinction

Institutions do not fear privacy.

They fear:

being unable to respond instantly,

being forced to justify architecture under pressure,

being exposed to regulatory ambiguity,

being associated with systems that “hesitate.”

Dusk aligns with institutional reality by ensuring privacy never obstructs certainty.

The moment privacy becomes a liability is predictable

It happens when:

stress replaces calm,

deadlines replace demos,

regulators replace builders,

proof replaces promises.

Systems that survive that moment were designed for it from day one.

I stopped asking whether privacy is strong.

Strength is easy to claim.

I started asking:

Does privacy ever force the system to ask for time, trust, or explanation?

If the answer is yes, privacy is already a liability.

Dusk earns relevance by ensuring that privacy is never something the system has to defend only something it uses while proof speaks for itself.