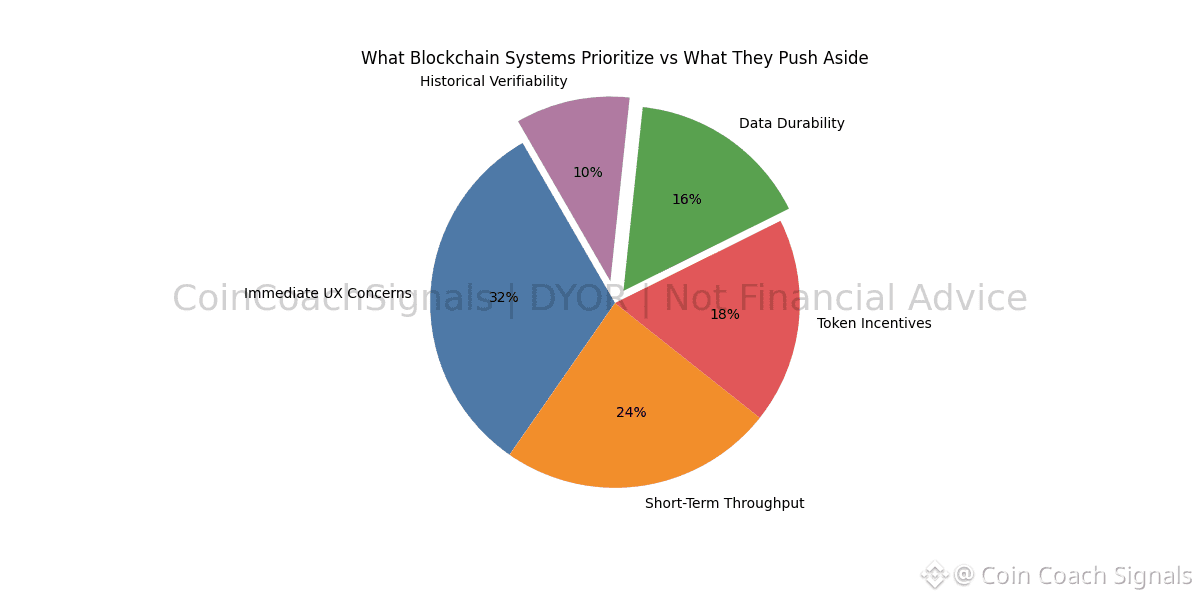

Blockchains are usually judged by what sits on the surface. Execution speed. Fees. User experience. Composability. But underneath all of that is a quieter dependency that ultimately determines whether any of those layers can be trusted over time. Data. Where it lives, who is responsible for keeping it available, how long it persists, and under what conditions it can still be verified. Walrus is built around the idea that this layer can no longer be treated as secondary.

In early blockchain systems, data availability was almost taken for granted. Everything lived onchain, and costs were manageable because usage was limited. As ecosystems grew, that assumption started to break. Storing large volumes of data directly on execution layers became expensive and increasingly impractical. The industry responded by pushing data offchain, often accepting weaker guarantees in exchange for lower costs. Walrus exists to address that tradeoff directly instead of shifting the problem elsewhere.

The protocol starts from a fairly simple observation. Scalable blockchains do not usually fail because execution slows down. They fail when data becomes unreliable. Rollups struggle to verify state. Applications cannot reconstruct history. Users lose confidence not because transactions stop, but because the underlying data can no longer be trusted to persist. Walrus treats this failure mode as its primary design constraint.

Rather than forcing developers to choose between cost efficiency and security, Walrus separates responsibilities cleanly. Execution layers focus on computation. Walrus focuses on ensuring that the data those layers depend on remains available and verifiable. Large data blobs live outside execution environments, but their existence and integrity are anchored cryptographically. This allows systems to scale without quietly eroding trust assumptions.

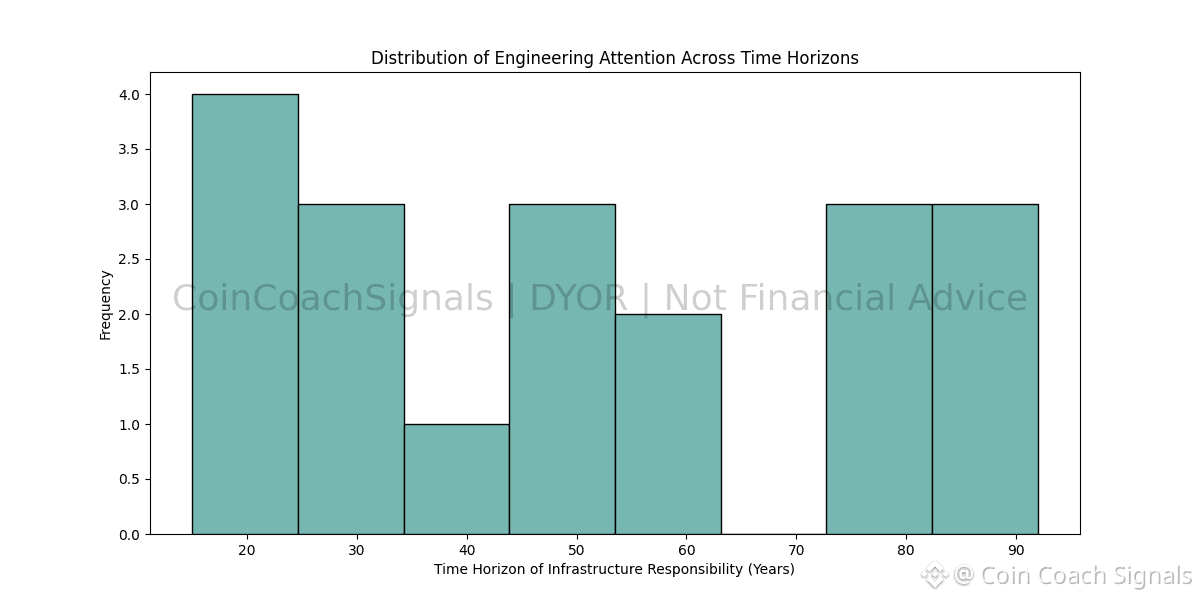

One of Walrus’s more understated strengths is its focus on durability instead of peak performance. Many infrastructure projects optimize for throughput or headline metrics. Walrus optimizes for time. Data is not just available today, but expected to remain accessible months and years into the future. That distinction matters for applications that cannot tolerate disappearing history. Financial records, rollup states, governance data, and archived information all require guarantees that extend beyond short incentive cycles.

Economic predictability is what makes this work. Data availability only matters if participants can plan around costs over long periods. When fees swing wildly, long-term use becomes risky and unattractive.

Walrus aims to keep those expectations clear. Its incentives are designed so storage providers are rewarded for staying reliable over time, not for short bursts of participation. That shift turns storage from a speculative activity into something closer to real infrastructure.

The relevance of this approach becomes clearer as modular blockchain design matures. Execution, settlement, and data availability are no longer bundled by default. Each layer is optimized separately. In this model, data availability becomes a shared dependency across many systems. Walrus positions itself as a neutral layer that does not compete for control, but provides a service others rely on. That neutrality matters. Infrastructure that tries to dominate tends to fragment ecosystems. Infrastructure that complements tends to endure.

Developers working on rollups and Layer 2s feel this difference immediately. Their applications rely on dependable access to historical data for things like verification and dispute handling. When data availability is uncertain, that risk gets pushed onto the application itself. Walrus takes much of that weight away. It allows developers to trust that data will remain available and verifiable, so their attention stays on building the application itself, not on preparing for things to break.

Walrus also changes how storage providers are viewed. They are not encouraged to chase short-term yield. Instead, they are expected to act as long-term custodians of availability. The incentives favor uptime and consistency over quick rewards. Over time, that pushes the network to behave like real infrastructure rather than an ongoing experiment, where reliability is what truly matters.

Walrus also aligns well with a broader shift in industry priorities. As blockchain adoption grows, the cost of data loss rises. Tolerance for experimentation declines. Systems that cannot guarantee availability under stress are gradually sidelined. In that environment, dedicated data availability layers are no longer optional components. They become prerequisites for serious deployment. Walrus aligns with this reality without trying to redefine the rest of the stack.

What stands out most is how narrow Walrus keeps its focus. It does not claim to solve execution bottlenecks, governance challenges, or application design. It concentrates on a single problem and treats it with the seriousness it demands. That restraint creates coherence. Design decisions reinforce the same objective rather than competing with one another.

As blockchain systems move from experimentation toward sustained operation, the invisible layers become decisive. Users may never interact directly with data availability protocols, but they depend on them every time state is reconstructed or history is verified. Walrus is building for that invisible layer. Not for attention, but for necessity.

Over the long term, infrastructure that lasts is rarely the most visible. It is the most dependable. Walrus is positioning itself in that category, quietly reinforcing the foundations that scalable blockchain systems cannot function without.

For educational purposes only. Not financial advice. Do your own research.

@Walrus 🦭/acc #Walrus #walrus $WAL