In most decentralized systems, data availability is assumed, not proven. A rollup publishes data. A storage layer accepts files. A node promises to keep serving them. Everything appears fine until the day someone needs that data and it is gone.

The failure is subtle but catastrophic. The data might still exist somewhere, but no one can prove it does. No one can reconstruct it. No one can verify it. At that moment, the system has lost its past.

Walrus was built because that kind of failure is unacceptable for blockchains, AI agents, and decentralised finance.

@Walrus 🦭/acc does not store data. It turns availability into something that can be measured, enforced, and proven.

That distinction is what makes it infrastructure rather than storage.

Why availability cannot be assumed in decentralized systems

In centralized systems, availability is a service-level agreement. If Amazon or Google fails to deliver data, you call support. The operator is responsible.

In decentralized systems, there is no operator. Every node is independent. Incentives drift. Hardware fails. Networks partition. Rational actors stop serving data when it is no longer profitable.

This means the default state of decentralized storage is entropy.

Most protocols try to fight this with replication. Store the same file on many nodes and hope enough of them stay online. But replication does not give you certainty. It gives you probability.

Walrus rejects probability.

Walrus defines availability as a provable condition

In Walrus, data is not a blob stored on a disk. It is transformed into a set of cryptographic commitments.

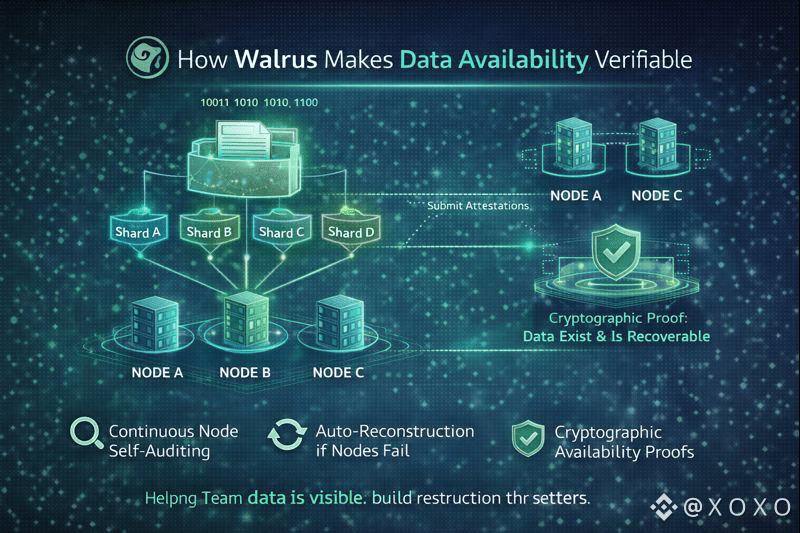

When data enters the network, it is:

encoded into fragments

distributed across independent operators

bound to cryptographic proofs

These proofs are not about what was written. They are about what is still retrievable.

Nodes in Walrus are required to continuously demonstrate that they can serve their assigned data fragments. If they cannot, the system detects it. If too many fail, the data is reconstructed from the remaining fragments.

This creates something that does not exist in traditional storage systems: live verifiability.

The network does not trust that data is available. It proves that it is.

From files to availability guarantees

Most storage networks treat data as static. Upload once, retrieve later.

Walrus treats data as an ongoing obligation. The network is constantly checking whether the data still exists in enough places to be reconstructable. Availability becomes a moving target that must be maintained.

This is what allows Walrus to act as a foundational layer for rollups, bridges, and AI systems. They do not need to trust any single operator. They only need to trust the cryptographic guarantees.

Why this changes how blockchains can scale

Rollups depend on data availability for fraud proofs and state reconstruction. If data disappears, the rollup becomes unverifiable. It may still run, but no one can prove that it is honest.

Walrus prevents this by making data availability something that can be independently checked by anyone at any time.

This turns data from a liability into a guarantee.

It also means new participants can always reconstruct the full state of a system without asking permission. That is what keeps decentralized systems decentralized.

Availability as a property, not a hope

Walrus does something subtle and powerful. It converts availability from a service into a property of the data itself.

A file on Walrus is not just stored. It is mathematically bound to a set of proofs that show it can be retrieved. Anyone can check those proofs. Anyone can challenge them. Anyone can reconstruct the data if enough fragments exist.

This makes data as reliable as consensus.

That is why Walrus is not competing with storage providers. It is competing with uncertainty.

My take

The future of decentralized systems depends less on how much data they can hold and more on how confidently they can prove that their history still exists. Walrus is where that confidence comes from.