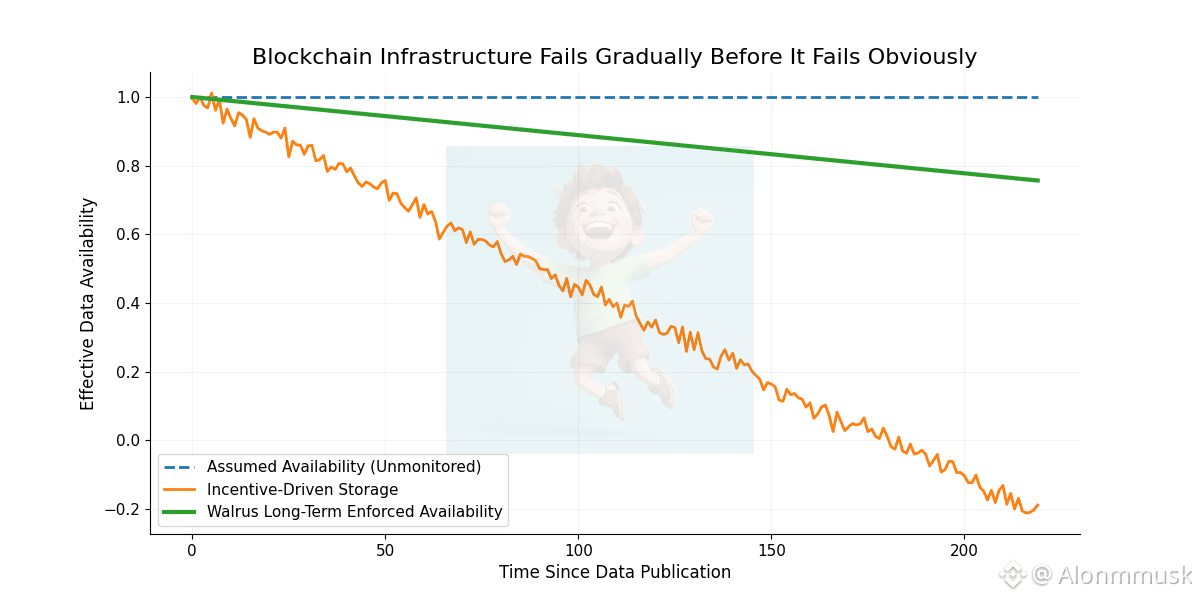

Most breakdowns in blockchain infrastructure do not arrive as dramatic events. They unfold quietly. A piece of historical data becomes harder to retrieve. A rollup struggles to reconstruct state. An application begins relying on assumptions that no longer hold. By the time the failure is obvious, the damage is already done. Walrus is designed for this slow failure mode rather than the obvious one.

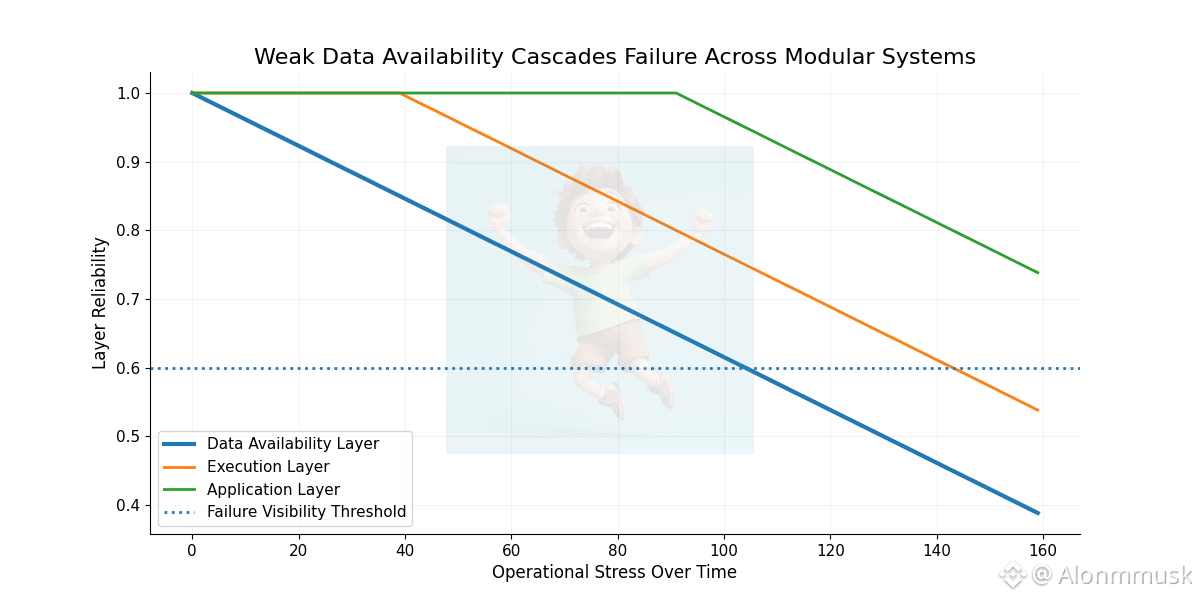

As blockchain architectures become modular, responsibility becomes fragmented. Execution layers process transactions. Settlement layers finalize outcomes. Interfaces handle users. But data persists across all of these layers and across time. When data availability weakens, every other layer inherits that weakness. Walrus exists because this dependency is often underestimated until it becomes irreversible.

Early blockchains avoided this problem by storing everything onchain. Availability was guaranteed, but at a cost that did not scale. As usage expanded, data was pushed outward into offchain systems that were cheaper but less reliable. In many cases, availability became an assumption rather than a guarantee. Walrus addresses this gap directly by treating data availability as a first-class responsibility rather than a side effect of execution.

The protocol’s design starts with a clear premise. Data is only useful if it remains accessible long after it is created. Availability cannot depend on short-term incentives or participant enthusiasm. It must be enforced through structure. Walrus creates that structure by allowing large data blobs to live outside execution environments while anchoring their existence and integrity cryptographically. This preserves verifiability without imposing unsustainable costs on base layers.

What distinguishes Walrus is not the mechanics alone, but how it treats time. Most systems are optimized for the moment data is posted. Walrus is optimized for the years that follow. Storage providers are incentivized to maintain availability over extended periods, aligning rewards with continuity rather than initial contribution. This long-term framing addresses the most common cause of data loss. Gradual disengagement rather than sudden failure.

For rollups and Layer 2 systems, this reliability is critical. Their security models depend on access to historical data for verification and dispute resolution. If that data becomes unavailable, execution correctness loses meaning. Walrus provides a layer where these systems can rely on persistence rather than redundancy. Developers are freed from designing elaborate fallback mechanisms and can instead assume continuity as a property of the stack.

This shift also changes how risk is distributed. In many modular systems, risk is pushed outward. Applications are expected to manage data availability themselves. When something breaks, responsibility becomes ambiguous. Walrus pulls that responsibility inward. By isolating data availability as its own layer, it makes accountability clearer and failures easier to reason about. Infrastructure becomes more legible rather than more complex.

Economic predictability reinforces this trust. Long-lived systems cannot be built on volatile or opaque cost models. Developers need to understand not just what availability costs today, but what it is likely to cost over time. Walrus emphasizes clearer economic structures that make long-term planning possible. This predictability is often what separates experimental deployments from production infrastructure.

Neutrality is another defining feature. Walrus does not attempt to influence execution design or application behavior. It does not compete for users or liquidity. It provides a service that many systems can depend on simultaneously without ceding control. This neutrality generally allows it to integrate broadly across ecosystems without becoming a point of fragmentation. Infrastructure that seeks dominance often fractures. Infrastructure that seeks reliability tends to persist.

The ecosystem forming around Walrus reflects these priorities. Builders are not optimizing for attention or rapid iteration. They are working on rollups, archival systems, and data-heavy applications where failure cannot be undone easily. These teams care less about performance headlines and more about guarantees. For them, the value of Walrus lies in what it prevents rather than what it enables visibly.

There is also a broader industry context reinforcing Walrus’s relevance. As blockchain systems handle more real value, tolerance for hidden fragility declines. Users may not understand data availability conceptually, but they experience its absence immediately when systems cannot reconstruct state or verify history. In mature environments, these failures are unacceptable. Walrus aligns with this shift by in practice, focusing on the least visible but most consequential layer of the stack.

What stands out most is Walrus’s restraint. It does not expand its scope beyond data availability. It does not chase execution narratives or application trends. Each design decision reinforces the same objective. Keep data accessible. Keep it verifiable. Keep it economically sustainable. This clarity gives the protocol coherence and credibility over time.

In complex systems, reliability is often defined by what does not happen. No missing data. No broken assumptions. No gradual erosion of trust that only becomes visible after damage is done. Walrus is building toward that standard by treating data availability as an obligation rather than a convenience.

As modular blockchain architectures continue to mature, layers like Walrus become less optional and more foundational. They may never be visible to end users, but they shape whether entire ecosystems can survive stress, upgrades, and time. Walrus is building for that quiet role.

Not to accelerate momentum, but to preserve memory.

For educational purposes only. Not financial advice. Do your own research.

@Walrus 🦭/acc #Walrus #walrus $WAL