I have watched enough cycles to know how language gets worn down. A strong idea turns into a headline, then a badge, then a shortcut people use to avoid doing the hard work. “AI-ready” is drifting into that territory. I do not blame anyone for wanting simple labels. I just learned to distrust them. What I trust is structure, the kind you only notice when you look closely. Where does context live, how does it stay usable, and can a system explain itself months later without rewriting history.

When I dig into Vanar Chain, I keep coming back to the same quiet impression. It is not loud. It feels like a network quietly building under the surface, choosing depth over breadth, with infrastructure first as the default.

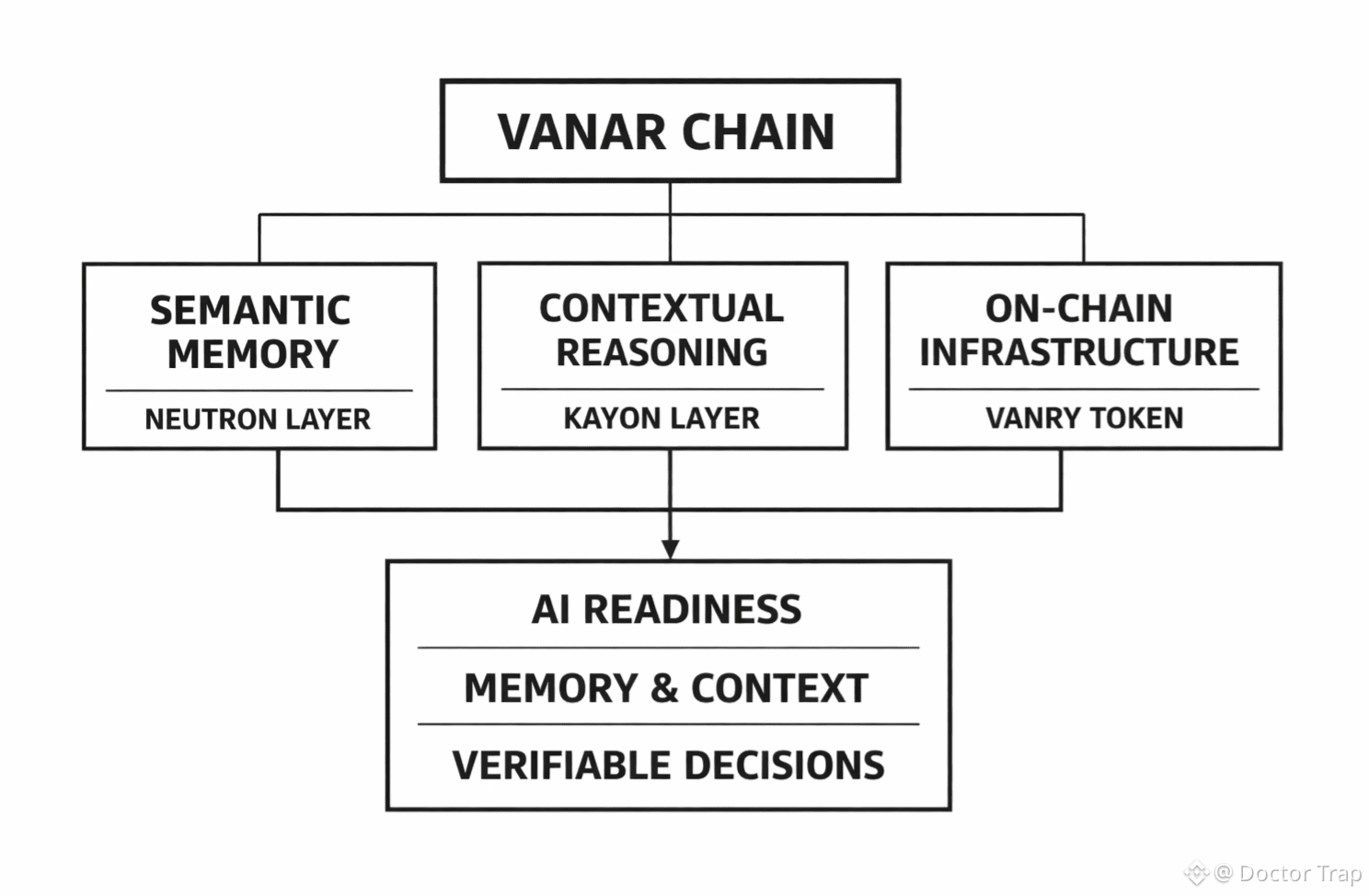

AI readiness is not a model sitting on top of a chain. It is a chain that respects context as a first-class thing. I remember building systems where the ledger was correct but the application forgot its own reasons. It is a subtle kind of failure. Everything “works” until someone asks why a decision was made, or tries to reproduce it, or needs to audit it under stress. Most stacks keep memory off to the side and call it “integration.”

That is where trust quietly leaks away. Vanar’s direction feels different. The design reads like it starts from the problem of context and works outward, not the other way around.

Vanar’s focus on semantic memory through its Neutron layer. I have noticed how often storage is treated like a warehouse, you put data in, you prove it is there, and you move on. But AI systems care less about “there” and more about “what does it mean.” Data can remain intact and still become useless once structure drifts, metadata gets separated, or the original intent fades.

Neutron feels like a deliberate attempt to prevent that slow decay. The aim is not just to store, but to store in a way that stays compact, structured, and queryable. I remember too many systems where the hash survived but the story disappeared. This is the kind of problem teams only solve when they are quietly building for the long run.

I have learned to distrust outputs that cannot be revisited. Reasoning that cannot be replayed is just a result, and results without trails tend to age poorly. Vanar’s Kayon layer is described as contextual reasoning tied to on-chain memory.

That is the point. Not “AI” as a feature, but reasoning as something anchored to verifiable context. I have watched networks chase compute and forget accountability. Then the first real dispute arrives, and suddenly nobody can explain what happened. When reasoning is anchored, you can trace the path backward, check the inputs, and understand why the system arrived where it did.

That ability is not glamorous, but it is the difference between demos and infrastructure.

I try not to fall in love with narratives. I look for the boring counters that keep ticking even when nobody is watching. When I look at Vanar’s activity, I see accumulation, blocks stacking up, transactions compounding, wallet addresses growing over time.

Those signals do not prove everything, but they do show that something is being used, not just discussed. I also notice that the liquidity footprint is modest but present, not inflated, not trying to perform strength, just sitting there like a working piece of plumbing. Partnerships around payment infrastructure also read differently to me. They suggest integration work, the kind that happens under the surface and only becomes visible when it is already stable. That aligns with the same motif, not loud, depth over breadth, quietly building.

I remember when tokens were treated like story engines. Over time, that approach tends to burn out. Vanar’s token design feels more like a tool than a slogan.

$VANRY is positioned as the working asset for the network, paying for transactions and supporting the chain’s daily function, with bridging via its ERC-20 form to connect with wider ecosystems. That interoperability matters because builders do not live in one place anymore.

At the same time, the core use remains straightforward, the token exists to run the system. Supply is clearly framed, which makes long-term modeling possible without guessing. None of this is made to impress in a single scroll. In my experience, that is a quiet strength.

Many networks chase breadth and get lost in their own surface area. Vanar seems to be choosing depth, and it shows in what it prioritizes. Memory first. Reasoning second. Everything else built around that spine. From my vantage point, that is what real AI readiness looks like. A place where context can live without rotting, and where decisions can be explained without performance.

And yes, the token has a price, it moves like everything else moves, and people will always talk about it. I mention it only because silence would feel like theater. Price is loud. Structure is quiet. After enough cycles, I know which one tends to stay standing when the noise fades.

Under the surface, quietly building the kind of memory that lasts.