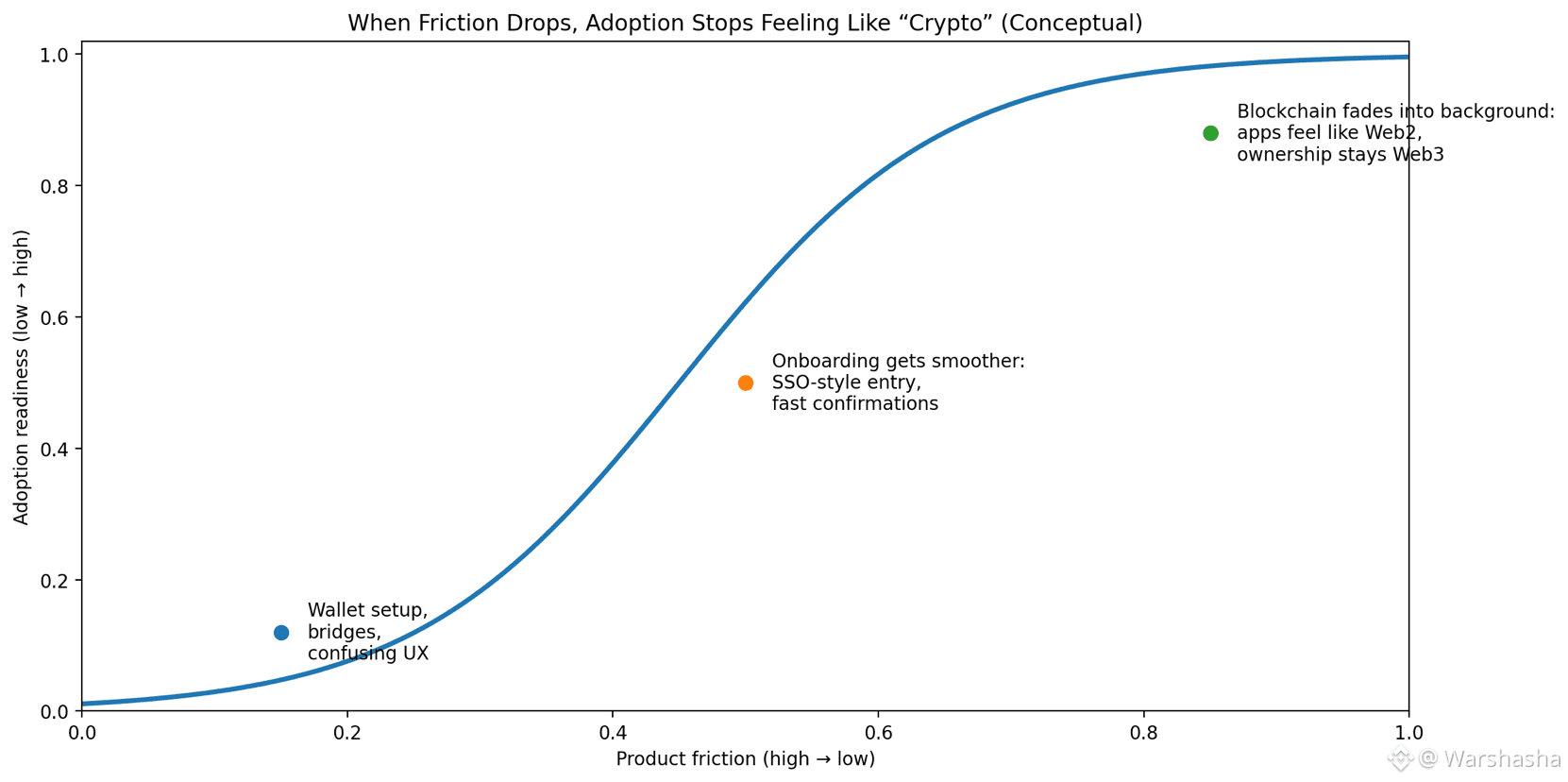

Lately I’ve been watching a pattern repeat itself across Web3: the tech keeps improving, but mainstream behavior doesn’t move at the same speed. People don’t wake up excited to “use a chain.” They show up for games, creator tools, AI features, digital collectibles, communities — and they leave the second the experience feels slow, expensive, or overly technical.

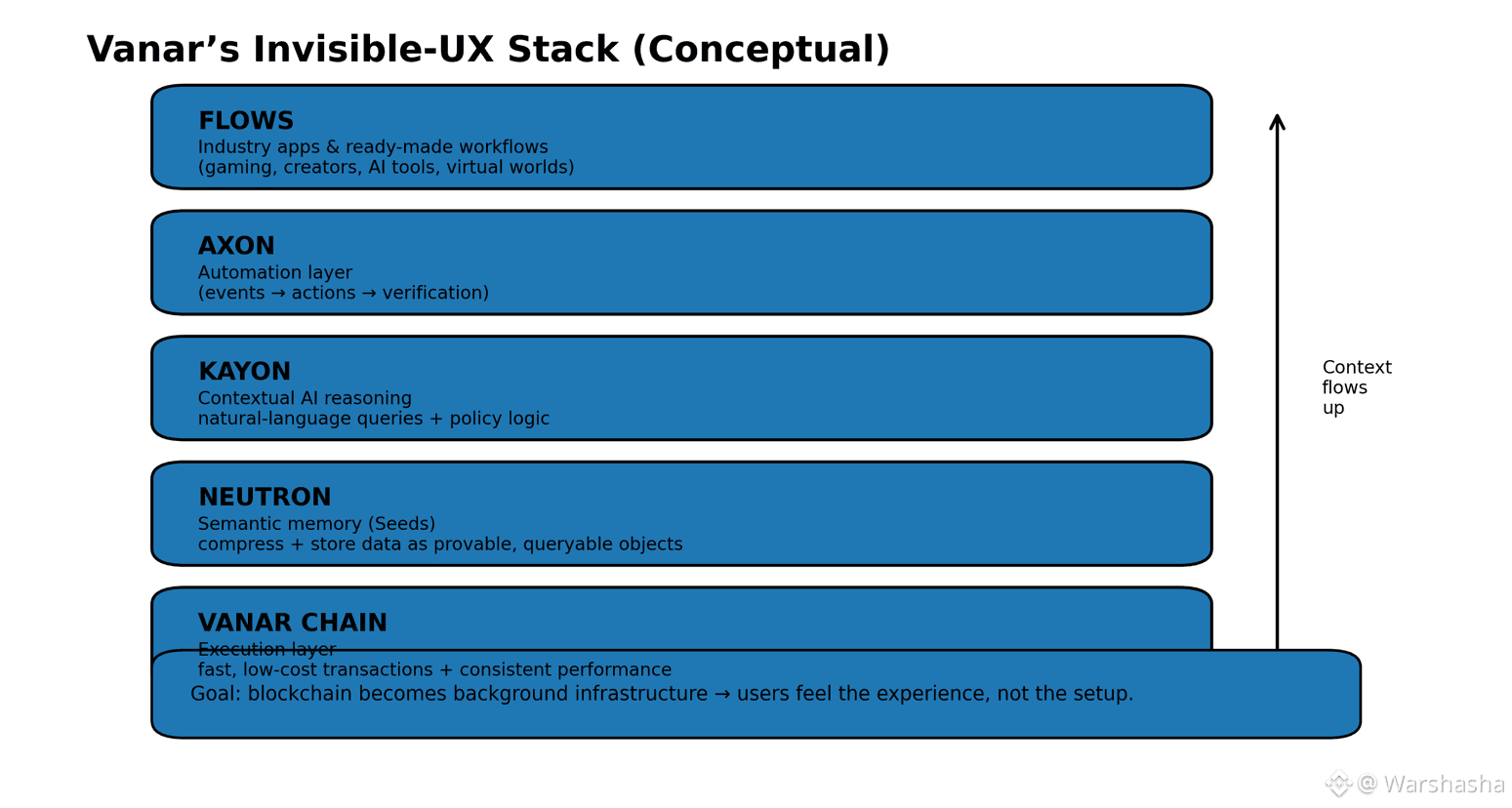

That’s why @Vanarchain caught my attention in a different way. The direction here feels less like “let’s build another L1” and more like “let’s build the rails so everyday digital experiences can quietly become on-chain without users needing a crash course.” Vanar positions itself as an AI-native infrastructure stack with multiple layers — not just a single execution chain — and that framing matters because real adoption usually comes from stacks, not slogans.

The real battleground is UX, not TPS

Web3 gaming and immersive digital environments don’t fail because the idea is bad — they fail because friction kills immersion.

If a player has to pause gameplay for wallet steps, the moment is gone.

If fees spike or confirmations lag, the “world” stops feeling like a world.

If data (assets, identity, game state, receipts) can’t be stored and understood reliably, developers end up rebuilding the same plumbing over and over.

Vanar’s long-term thesis seems to be: reduce friction until blockchain becomes background infrastructure, while still preserving what makes Web3 valuable (ownership, composability, verifiability).

A stack approach: execution + memory + reasoning (and what that unlocks)

Instead of treating data as an afterthought, Vanar’s architecture leans into a layered model: the chain executes, memory stores meaningfully, and AI reasoning turns that stored context into actions and insights.

The part most people ignore: “data that survives the app”

#Vanar highlights Neutron as a semantic memory layer that turns raw files into compact “Seeds” that remain queryable and verifiable on-chain — basically shifting from dead storage to usable knowledge objects.

And if you think that’s just abstract, the compression claim alone shows the intent: Neutron describes compressing large files down dramatically (example given: 25MB into 50KB) to make on-chain storage more realistic for richer applications.

Then comes reasoning: where apps stop being “dumb contracts”

Kayon is positioned as an on-chain reasoning layer with natural-language querying and compliance automation (it even mentions monitoring rules across 47+ jurisdictions). That matters because a lot of “real” adoption (brands, studios, platforms) eventually runs into reporting, risk, and operational constraints. If the chain can help answer questions and enforce rules natively, the product experience gets cleaner.

The most interesting “new adoption door” I’m watching: portable memory for AI workflows

One of the freshest angles in Vanar’s recent positioning is myNeutron: a universal knowledge base concept meant to carry context across AI platforms (it explicitly mentions working across tools like ChatGPT, Claude, Gemini, and more). In plain terms: your knowledge stops being trapped inside one platform’s silo.

If this category keeps growing, it becomes a stealth demand driver: more usage → more stored data → more queries → more on-chain activity, without relying on speculative hype cycles.

Gaming and digital worlds: the “invisible blockchain” stress test

Gaming is brutal because it doesn’t forgive clunky design. And that’s why it’s such a strong proving ground.

Vanar is already tied into entertainment-facing products like Virtua — including its marketplace messaging around being built on the Vanar blockchain.

Here’s what I think is strategically smart about that: gaming isn’t just a use case — it’s user onboarding at scale. If players come for the experience and only later realize they own assets, that’s how Web3 creeps into normal behavior.

Where $VANRY fits, not as a “ticker,” but as an ecosystem meter

In the Vanar docs, $VANRY is clearly framed beyond just paying fees: it’s described as supporting transaction fees, community involvement, network security, and governance participation — basically tying together usage + security + coordination.

The way I read this is simple:

If builders ship apps people actually use, $VANRY becomes the economic layer that keeps that motion aligned (fees, staking, incentives, governance).

If the ecosystem expands across gaming/AI/tools, the token’s role grows naturally without needing forced narratives.

Also worth noting: Vanar’s docs describe $VANRY existing as a native gas token and also as an ERC-20 deployed on Ethereum and Polygon for interoperability via bridging.

The adoption flywheel I see forming

This is the “quiet” part that feels different:

Better onboarding + smoother UX (so users stay)

Richer data stored as usable objects (so apps feel smarter and more personalized)

Reasoning + automation (so teams can operate at scale without turning everything into manual workflows)

More real usage (which strengthens the network economics + builder incentives through $VANRY)

That’s the kind of loop that compounds — and it’s the opposite of “one announcement pumps, then the chain goes quiet again.”

What I’d personally watch next

Are more consumer apps actually shipping on Vanar (games, creator tools, AI utilities) — not just integrations, but products people return to.

How quickly Neutron-style data becomes a default workflow (content, receipts, identity, game-state, proofs).

Whether Kayon-style querying becomes a standard layer inside explorers, dashboards, and enterprise tooling.

Ecosystem programs and onboarding rails (bridging/staking/onramps) staying simple enough that new users don’t bounce.