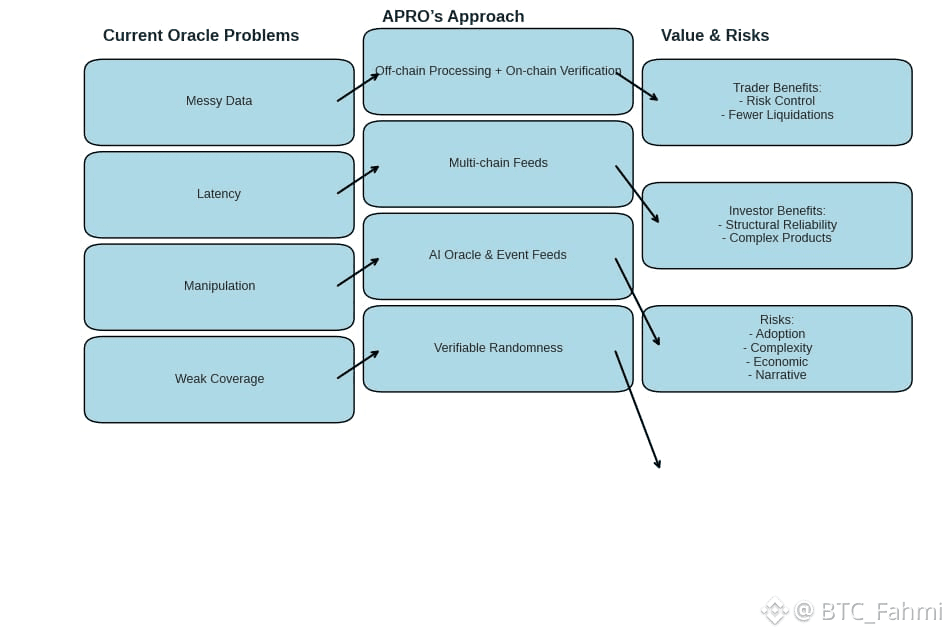

Most traders don’t think about oracles until something breaks. A price spikes for no real reason, a lending market starts liquidating healthy positions, or a “news-driven” bot trades on a headline that later turns out to be wrong. The uncomfortable truth is that a lot of on-chain activity still depends on data pipes that were built for simpler times. As the market gets faster and products get more complex, the oracle layer has to grow up too. That’s where APRO’s approach feels different, not because it promises magic, but because it is trying to treat “reliable intelligence” as infrastructure, not a feature.At its simplest, APRO is an oracle network that aims to deliver verifiable data to smart contracts. That sounds familiar, but APRO’s emphasis is on bridging two worlds that increasingly overlap: blockchain execution and AI interpretation. In APRO’s own documentation, the AI Oracle is framed as a way to deliver real time, verifiable, tamper resistant data that AI models and smart contracts can consume, with the explicit goal of reducing hallucinated or manipulated inputs. That wording matters because it admits a real problem: when data is messy, late, or selectively sourced, “intelligence” becomes a fancy word for guesswork.If you’ve traded through high-volatility events, you’ve probably felt this in practice. I remember watching a sharp move in a mid-cap token during a thin-liquidity window and seeing on-chain perps react as if the new price was fully confirmed reality. Later, when the broader market caught up, the move looked more like a temporary distortion than true price discovery. For a trader, that kind of distortion is more than noise. It can trigger liquidations, mess with funding, and create a chain reaction that punishes anyone using leverage. In those moments, the oracle is not just “data.” It is the referee.APRO’s model is built around combining off-chain processing with on-chain verification, which shows up repeatedly across ecosystem descriptions. The practical takeaway is that APRO is trying to do two jobs at once: gather information from the messy outside world, then prove enough about it on-chain that applications can rely on it. That second part is where many systems either become expensive, slow, or centralized. APRO’s pitch is that you can keep data fresh without compromising verification, and that the network design focuses on security and stability rather than raw speed at any cost. One detail traders often overlook is coverage. Reliability isn’t only about whether a feed updates quickly. It’s also about whether the data you actually need exists across the places you trade. APRO is described as supporting both push and pull data models and offering a large set of price feeds across many chains, which matters because multi-chain liquidity is now normal rather than special. If you’ve ever tried to price an asset that trades actively on one chain but has thin liquidity on another, you know how easily a single weak venue can distort the “on-chain truth.” The more robust the aggregation and the broader the network coverage, the harder it is for one pocket of liquidity to hijack the feed.The “growing up” part of the oracle layer, though, is not only about price feeds. It’s about richer data and richer reasoning. APRO’s roadmap highlights an evolution from core data infrastructure into AI oracle functionality, event feeds, validator nodes, verifiable randomness, and a consensus layer for its AI related message formats. For investors, this is the part that’s easy to misunderstand. It’s tempting to hear “AI” and assume it means prediction. APRO’s framing is closer to interpretation and validation: taking structured and unstructured data, processing it, and then making the result usable for contracts and agents without trusting a single party. A real-world example helps. Imagine an on-chain prediction market that settles based on an event outcome. In traditional setups, you either rely on a narrow set of data sources or accept a slow dispute process. APRO has described a focus on prediction market grade oracles and disclosed a strategic funding round aimed at powering next generation oracle infrastructure for those use cases. If APRO can make event resolution both verifiable and timely, it reduces the gap between “the world happened” and “the chain agrees it happened.” That gap is where manipulation lives.For traders, the immediate value is risk control. Better oracles can mean fewer bad liquidations, fewer broken perps, fewer weird cascades driven by stale or spoofed inputs. For investors, the value is more structural: reliable data enables more sophisticated financial products without quietly increasing systemic fragility. But it’s important to stay neutral here because nothing is guaranteed. Even the best oracle design faces hard problems: source selection, latency versus security tradeoffs, node incentives, and governance questions about what happens when the truth is contested.There are also clear risks to watch. First, adoption risk. Oracles are only as strong as the projects that integrate them, and switching oracle providers can be slow and politically complicated. Second, complexity risk. Adding AI processing and richer data types increases the number of things that can fail or be misunderstood, even if verification is strong. Third, economic risk. Node participation and security budgets depend on incentives, and token dynamics can impact long-term sustainability. Finally, narrative risk. When a sector gets crowded, the market sometimes prices stories faster than it prices real usage, and traders can end up holding volatility instead of fundamentals.Still, the broader trend is real: the market is moving from simple price updates to full “reliable intelligence” pipelines. APRO’s emphasis on verifiable, tamper resistant data for both smart contracts and AI agents is a sign of where the oracle layer is heading. If this direction succeeds, it could make on-chain markets feel less fragile during stress and make complex products safer to build. And as someone who has watched how quickly small data errors become big losses, I’m glad more teams are treating the oracle layer like the foundation it really is, not an afterthought.