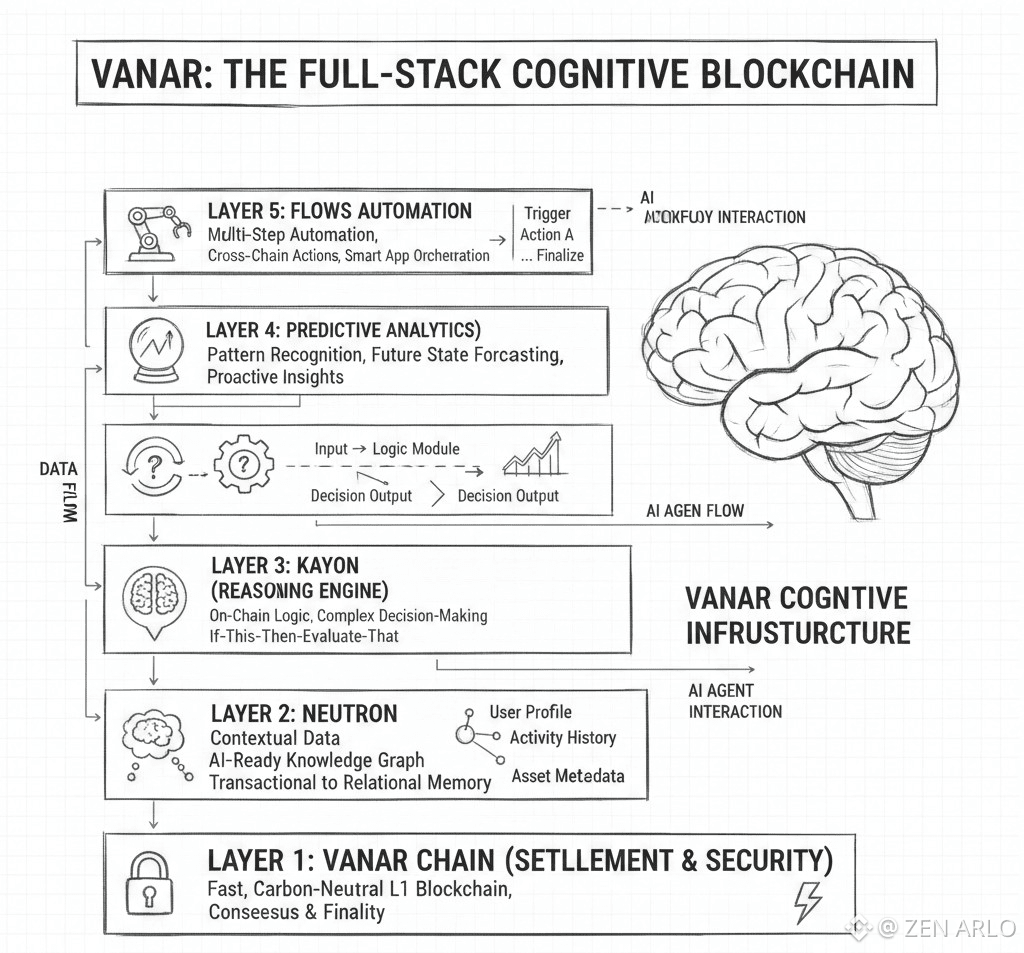

Vanar is aiming for something bigger than another fast Layer 1. The project reads like a full stack that starts with settlement, then climbs into memory and reasoning so apps can work with context instead of just raw transactions. On the official site, Vanar maps this out as a five layer direction where the base chain supports the higher layers Neutron for semantic memory, Kayon for reasoning, and two upcoming layers called Axon and Flows.

The reason this approach matters is that real adoption is rarely just sending tokens. Real adoption is messy data, permissions, receipts, statements, business rules, compliance checks, and endless back office logic that usually lives outside the chain. Vanar is trying to bring that reality closer to the chain itself by making context a first class thing. Their Vanar Chain page describes a network built for AI workloads, including native support for AI inference and training, semantic oriented data structures, built in vector storage and similarity search, and AI optimized validation.

Neutron is the part of the story that gives Vanar a very specific personality. It is positioned as semantic memory, not just storage. The pitch is that every file or conversation can become a compressed, queryable object they call a Seed, designed to be light enough for on chain anchoring while still being usable for applications and AI systems. The Neutron page makes a bold compression claim, describing an engine that can compress 25MB into 50KB using semantic, heuristic, and algorithmic layers and still keep the output cryptographically verifiable.

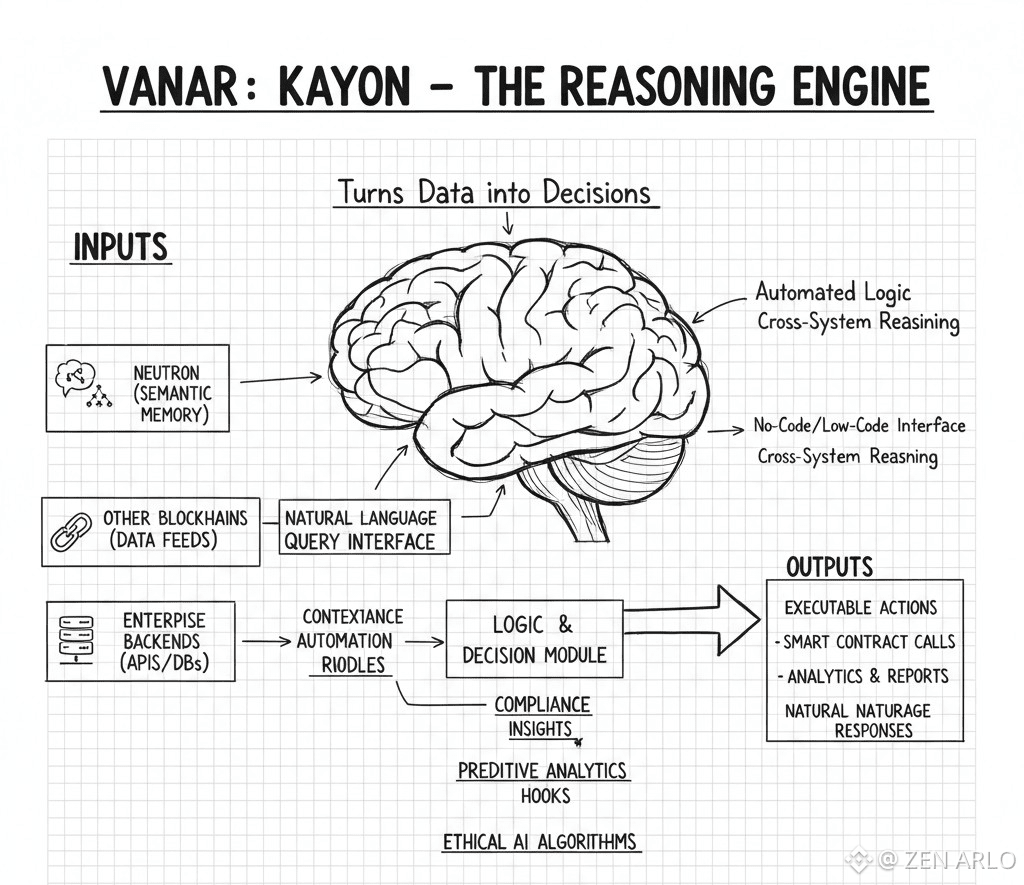

If Neutron is memory, Kayon is the layer that turns memory into action. Vanar describes Kayon as a reasoning layer designed for natural language queries across Neutron, blockchains, and enterprise backends, with an emphasis on contextual insights and compliance automation. In simple terms, it is the part that tries to turn data into decisions without forcing teams to build custom analytics and logic pipelines from scratch.

What makes this feel grounded is that there is already a live network footprint you can observe. The Vanar mainnet explorer shows current totals for blocks, transactions, and wallet addresses, which confirms an active chain environment rather than a purely conceptual roadmap. At the time of this check, the explorer homepage displayed about 8,940,150 total blocks, 193,823,272 total transactions, and 28,634,064 wallet addresses.

Under the hood, Vanar also presents itself as an EVM compatible Layer 1, which matters because it lowers friction for builders who already know Solidity and EVM tooling. A widely used developer registry lists Vanar Mainnet with Chain ID 2040 and shows VANRY as the native token for the network.

The token side of Vanar is not separate from the project identity, it is part of how the ecosystem stays coherent across products and activity. Your link points to the VANRY ERC 20 contract, and Etherscan labels VANRY as the token of the Vanar blockchain, shows the token contract address, and notes it uses 18 decimals. In the verified contract details, the code also includes a MAX_SUPPLY constant set to 2,400,000,000.

So what does VANRY do in practice inside the Vanar story. It functions as the network fuel for transactions and activity, and it is also the connective asset across the ecosystem experiences that Vanar has historically leaned into like gaming and entertainment. That consumer angle is not random branding, it is a stress test. If a chain can handle constant high frequency interactions from consumer style apps, it is more likely to handle payment style flows and real world asset activity where reliability and cost predictability matter.

When you ask what they are doing behind the scenes, the clearest answer is that Vanar is trying to turn the intelligence layer into the product itself. The public roadmap language on their site keeps pointing to the next steps as Axon and Flows. Neutron gives you structured memory, Kayon gives you reasoning over that memory, and Axon and Flows are framed as the layers that will push automation and industry applications closer to plug and play. If those ship as real tooling, Vanar becomes less about narrative and more about shipping a usable stack for teams who need context, verification, and automation to live together.

For recent project signals, the most reliable public trail is what the official site is emphasizing right now. The homepage and product pages keep spotlighting the same structure and the same priorities, AI first infrastructure plus semantic memory plus reasoning plus upcoming automation and application layers. They also continue expanding the product surface around Neutron, including a dedicated My Neutron page that frames portable, privacy controlled memory across tools with optional anchoring on Vanar Chain.

Vanar is trying to win a different game. Instead of competing only on TPS or hype cycles, they are positioning themselves around something practical: data that stays meaningful, logic that can be verified, and workflows that can be automated without breaking trust. If Neutron delivers verifiable compression at scale and Kayon proves it can query and reason across that memory reliably, then Vanar has a clean lane into payment infrastructure, tokenized assets, and consumer scale apps that need more than basic transfers. The next real checkpoint is execution on Axon and Flows, because that is where the stack either turns into a builder platform or stays a strong idea.