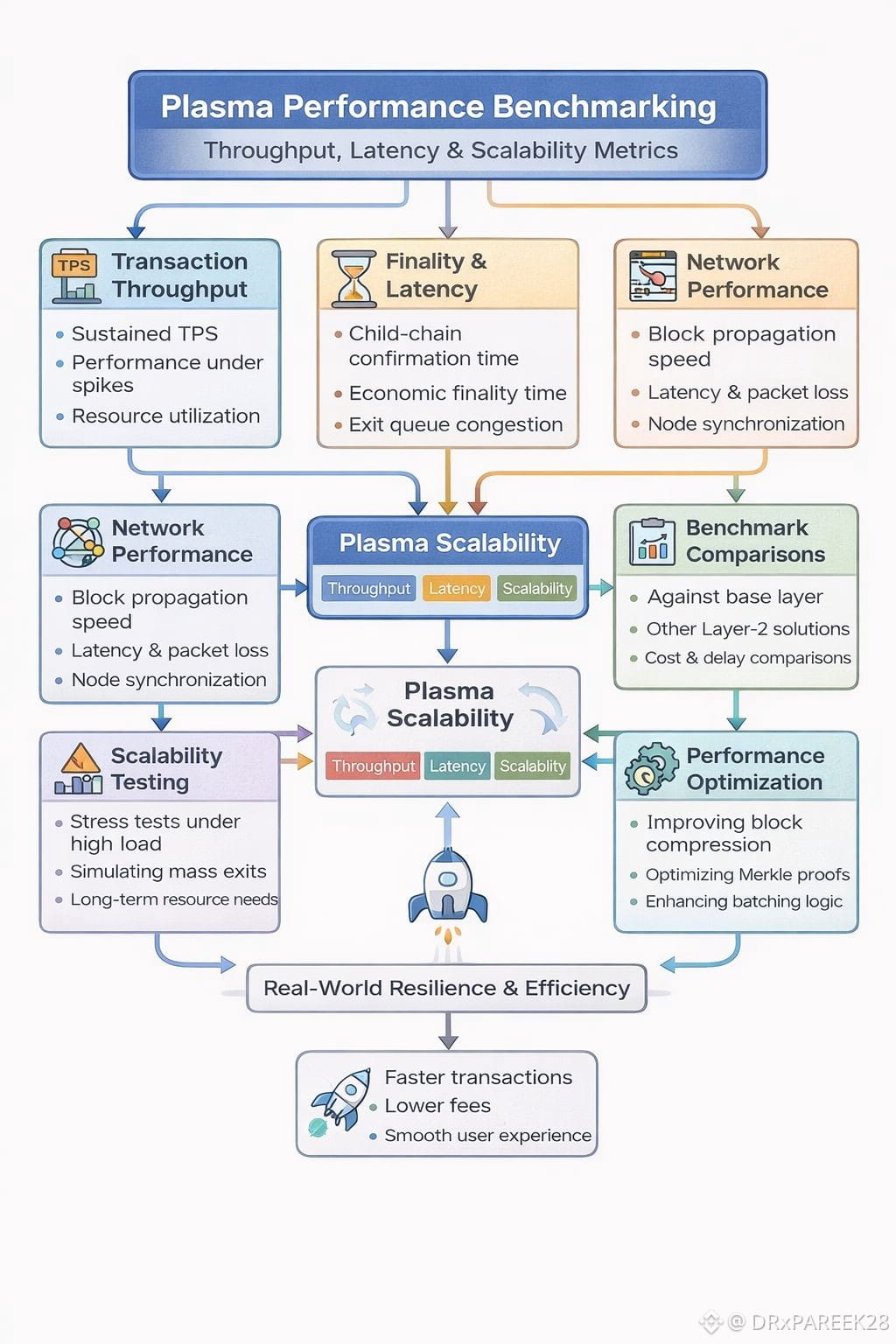

When people talk about Plasma in blockchain, they often stop at “it’s a Layer-2 scaling solution.” But the real story begins when we measure it. Performance benchmarking is where theory meets pressure. Throughput, latency, and scalability are not abstract buzzwords they define whether Plasma can survive real traffic, real users, and real economic activity.

1. Transaction Throughput: Can Plasma Handle Volume?

Throughput measures how many transactions the system can process per second (TPS). In a Plasma architecture, child chains batch thousands of transactions before committing a summary to the root chain. This batching mechanism is the core scalability advantage.

But benchmarking throughput is not just about peak TPS numbers. It involves:

Sustained TPS under continuous load

Performance during traffic spikes

Resource utilization (CPU, memory, bandwidth)

A realistic benchmark tests Plasma under stress scenarios—imagine a DeFi protocol launch or NFT minting event. The goal isn’t just to hit a high TPS number once. It’s to maintain stable throughput without increasing failure rates or creating bottlenecks at exit mechanisms.

The critical insight: Plasma throughput scales horizontally. Adding more child chains increases capacity without overwhelming the base layer—if properly optimized.

2. Finality Time & Latency: Speed vs Certainty

Latency measures how fast a transaction is confirmed within the child chain. Finality time measures how long it takes before that transaction is irreversible, especially considering the challenge period required in Plasma designs.

In benchmarking:

Child-chain confirmation may occur in seconds.

Economic finality may require waiting for challenge windows.

Exit queue congestion must be measured during stress tests.

This dual-layer latency creates a tradeoff. Plasma can feel instant in user experience but still rely on longer dispute resolution at the root chain level. Benchmarking must therefore separate:

Perceived user confirmation time

True cryptographic finality

Real-world scalability depends on minimizing the gap between the two.

3. Network Performance: The Hidden Variable

Even the most efficient architecture fails if network propagation is weak. Plasma benchmarking includes:

Block propagation delay

Node synchronization speed

Validator communication overhead

High throughput systems amplify network weaknesses. If blocks propagate slowly, forks increase. If nodes struggle to sync, decentralization suffers.

Performance tests simulate distributed geographic nodes to measure:

Packet loss impact

Latency between regions

Resilience during partial node failure

Scalability is not just about transaction math. It’s about distributed coordination efficiency.

4. Scalability Testing: Beyond Ideal Conditions

True scalability testing pushes Plasma beyond comfortable limits. This includes:

Stress testing under 2× and 5× expected traffic

Simulating mass exit events

Measuring memory growth over long uptime periods

Monitoring gas cost impact on root-chain commitments

A robust benchmark doesn’t just show that Plasma works. It shows how it fails—and how gracefully it recovers.

For example: If exit queues grow exponentially under congestion, optimization strategies must focus on batching exits or prioritizing economically significant withdrawals.

5. Benchmark Comparisons: Context Matters

Performance numbers mean nothing without comparison. Plasma should be benchmarked against:

Base layer transaction capacity

Other Layer-2 models

Sidechain architectures

Key metrics to compare:

Cost per transaction

Confirmation delay

Maximum sustainable TPS

Resource cost per validator

The goal isn’t to “win” a TPS race. It’s to demonstrate efficiency per unit of trust and security.

6. Performance Optimization Strategies

Benchmarking naturally reveals weak points. Optimization strategies may include:

Improving block compression algorithms

Optimizing Merkle proof generation

Enhancing batching logic

Reducing exit verification costs

Parallelizing validation processes

Small architectural improvements can yield measurable performance gains. For example, reducing proof size by 20% directly reduces bandwidth usage and speeds up verification.

The Human Layer of Performance

Ultimately, Plasma performance benchmarking is about user experience. Numbers translate into:

Faster payments

Lower fees

Reliable transaction execution

Reduced network congestion

A scalable system must feel invisible. Users should not worry about challenge periods or exit queues. They should experience smooth interaction—even when thousands of others are active simultaneously.

Performance benchmarking transforms Plasma from a conceptual scaling model into a measurable infrastructure layer. Throughput tests show capacity. Latency metrics reveal responsiveness. Scalability trials expose structural resilience. And optimization ensures evolution.

In the end, Plasma is not judged by architecture diagrams but by how it performs under pressure. That is where scalability becomes reality.