The first time I proved something without showing it, I thought I was lying.

Not maliciously. Just... wrong.

Cryptography has that effect. You generate a proof, some zk-snarky thing the textbooks call "succinct," and you expect the verifier to ask for more. Demand the whole picture.

Instead: accepted. Verified. Moved on.

I kept waiting for the catch. The moment where someone said, "actually, we need to see the balance after all."

It didn't come.

I blamed my own misunderstanding. Thought maybe Moonlight, the visible rail, was leaking more than I realized.

Checked the transaction. No. Just the credential hash. The jurisdiction flag. The compliance timestamp.

The actual amount, the counterparty, the purpose: still buried in Phoenix. Still private. But provably real.

That distinction matters more than I expected.

"Selective disclosure," the documentation says.

I hate the phrase. Sounds like a settings menu. Privacy toggles.

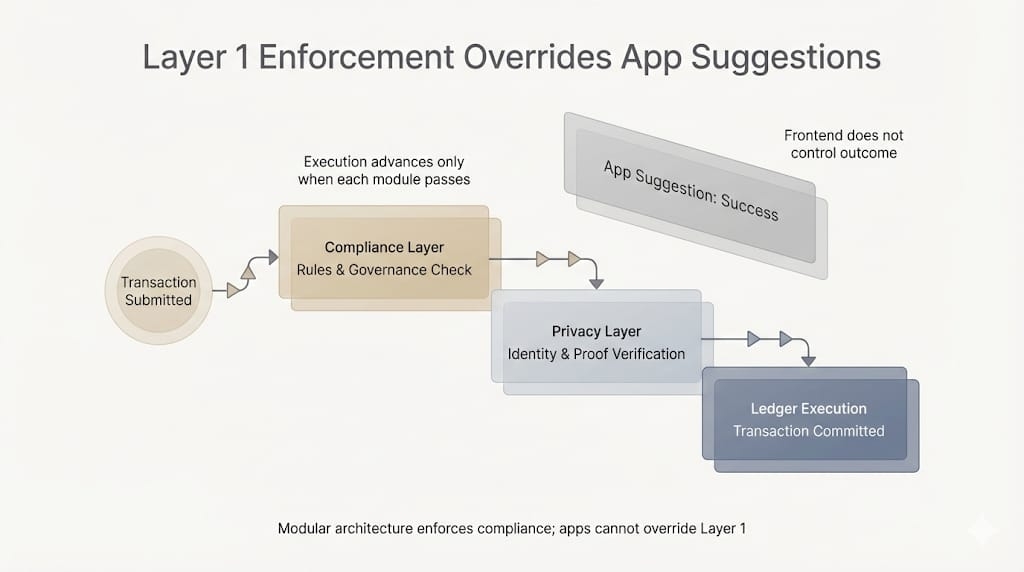

What Dusk actually does is... binding. The rules about what must be seen and what can stay hidden are encoded before anyone submits anything. Not a choice made after. Not a negotiation with regulators six months later.

The chain enforces the boundary.

Violate it, try to reveal too little for compliance, too much for privacy and the transaction doesn't fail gracefully.

It doesn't move.

I watched a DuskTrade settlement for a tokenized building. Real estate, the kind where jurisdictions squabble over beneficial ownership and everyone pretends to know who actually owns what.

The Phoenix side hid the investor's total exposure across other assets.

Moonlight exposed only that the specific building wasn't already pledged in another structure. Nothing else.

The proof carried the weight. The verification didn't require trust in a person.

Just math. Just the Layer 1 state, DuskDS, holding the line.

I tried to break the boundary. Constructed a proof that claimed compliance while smuggling extra data in the metadata.

The Rusk VM caught it.

Not because it's smart. Because the rules are literal. The credential validation happens at execution time, which sounds like a technical detail until you realize it means no after-the-fact forgiveness.

No "we didn't know."

The chain knew. The chain refused.

"Transparent," I wrote, then scratched it out.

Wrong word. Suggests glass walls, everything visible.

This is more like... directed light. Beams where they're needed, darkness where it's earned.

Regulators don't get keys to the vault. They get proof the vault is full.

The difference is trust versus verification. Most chains ask you to trust their privacy promises.

Dusk makes you verify their disclosure boundaries.

The operator running the node I used, some validator with enough Dusk staked to hurt if they misbehave, didn't even see what I was trading.

Just that I was trading. Just that the proof checked out.

Their accountability is economic, not informational.

Skin in the game, not eyes in the transaction.

I kept thinking about accidents. The way most systems leak: a misconfigured API, a rushed audit, a regulator demanding "just a little more visibility" until there's nothing left to hide.

This system refuses accidents by refusing optionality.

Disclosure by design means the design is the only way. Change what must be seen? Upgrade the chain. Convince the committee. Wait for consensus.

No shortcuts through human convenience.

The RWA settled. Eleven seconds.

The building changed digital hands, the investor's privacy held, the regulator's requirement met, and I sat there wondering why this felt so unfamiliar.

Then I realized: I wasn't used to systems that keep promises without being watched.

Dusk doesn't disclose by accident.

It discloses by holding the line between what must be proven and what must be protected.

Which, I guess, is the same thing.