I was sorting through old photo albums the other day, flipping pages of family snapshots from years ago, and it hit me how much we curate what we share—some memories stay tucked away, private, while others get posted online. It's a personal filter we all apply, but it made me reflect on how privacy isn't absolute; it's often negotiated, depending on who's asking.

That reflection stuck with me as I logged into Binance Square and tackled the CreatorPad task on Regulatory Reporting Without Data Leakage Using Dusk. I was going through the content, and right there in the Zero-Knowledge Compliance section—it detailed how ZK proofs allow for verifiable reports to authorities without exposing underlying transaction details. That specific mechanism, laid out on the screen with its emphasis on selective auditability, unsettled me. It wasn't just technical jargon; it crystallized a doubt I'd been ignoring, showing how privacy tech can serve oversight as much as it protects users.

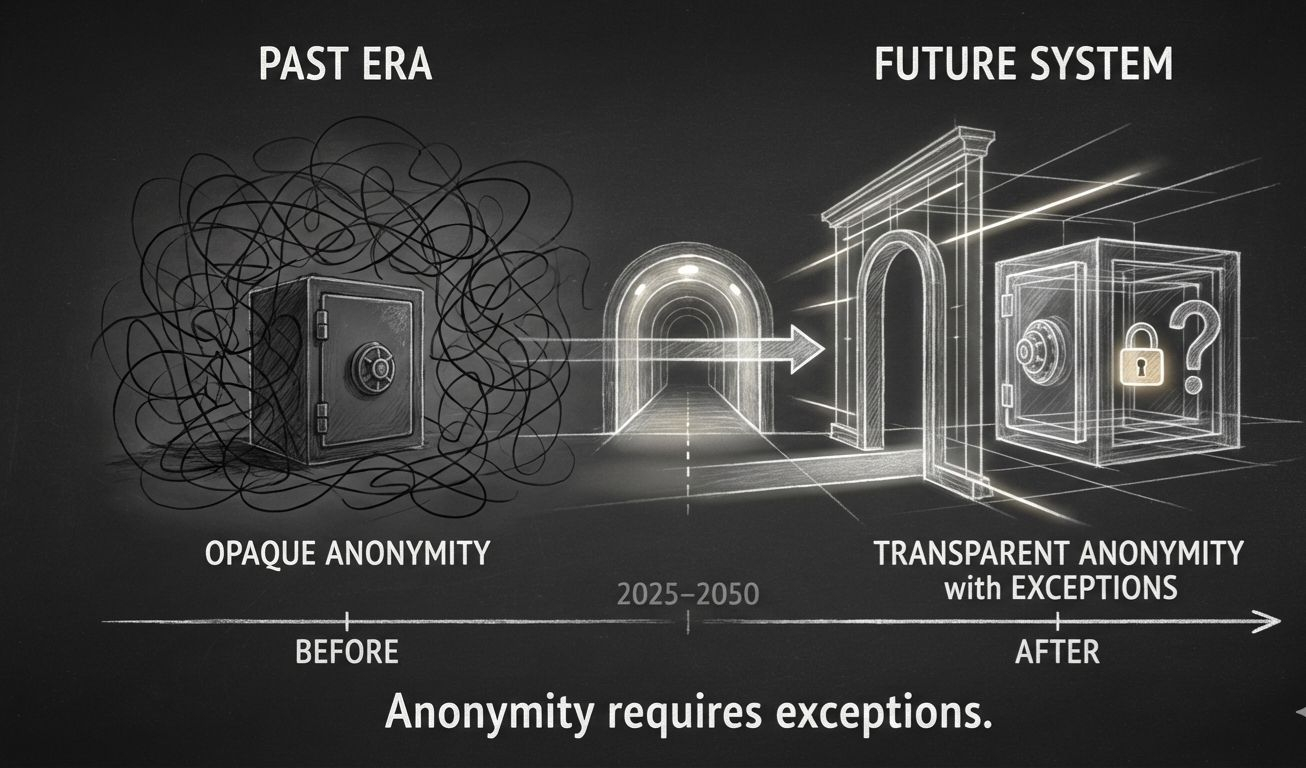

Simply put, the cherished idea in crypto that privacy tools grant unbreakable anonymity against any authority seems overstated, perhaps even naive. If systems are built to enable reporting without leakage, it implies privacy is designed with exceptions in mind.

Pushing this further, it's not confined to one protocol. The crypto narrative often romanticizes privacy as a shield against surveillance, fueling the appeal of decentralization. But when you examine these implementations, many incorporate features that allow for conditional transparency—think mixers or shielded pools that still log enough for audits. It creates a tension: on one hand, it helps projects navigate legal landscapes, avoiding outright bans; on the other, it risks normalizing access points for regulators, turning privacy into a feature that's toggled rather than inherent. I've observed this in broader discussions around data protection, where what starts as user empowerment evolves into compliant frameworks that prioritize institutional trust over individual sovereignty. It's slightly critical because it questions whether the push for mainstream adoption is diluting the original promise of crypto as a haven from oversight. If privacy mechanisms are engineered to prove compliance without full disclosure, they might inadvertently train the ecosystem to accept monitored anonymity, where freedom is granted only insofar as it doesn't threaten the status quo.

Dusk illustrates this balance without exaggeration. The task highlighted its use of ZK for reporting, where transactions remain confidential yet provable to meet regulatory needs. It's a pragmatic approach, fitting for financial applications, but it underscores how privacy isn't absolute—it's layered, with tools like selective disclosure ensuring that data stays hidden until required. This isn't unique, but seeing it framed around leakage prevention in the task brought home the compromise: efficiency in reporting comes at the expense of unyielding secrecy.

This observation extends to how crypto intersects with real-world finance, where similar trade-offs appear in tokenized assets or DeFi protocols. The belief in total privacy drives enthusiasm, but ignoring these built-in concessions feels like overlooking a fundamental shift. It's disturbing because it suggests the space is maturing into something more integrated, less rebellious.

When does designed-in compliance stop being a feature and start eroding the essence of privacy?