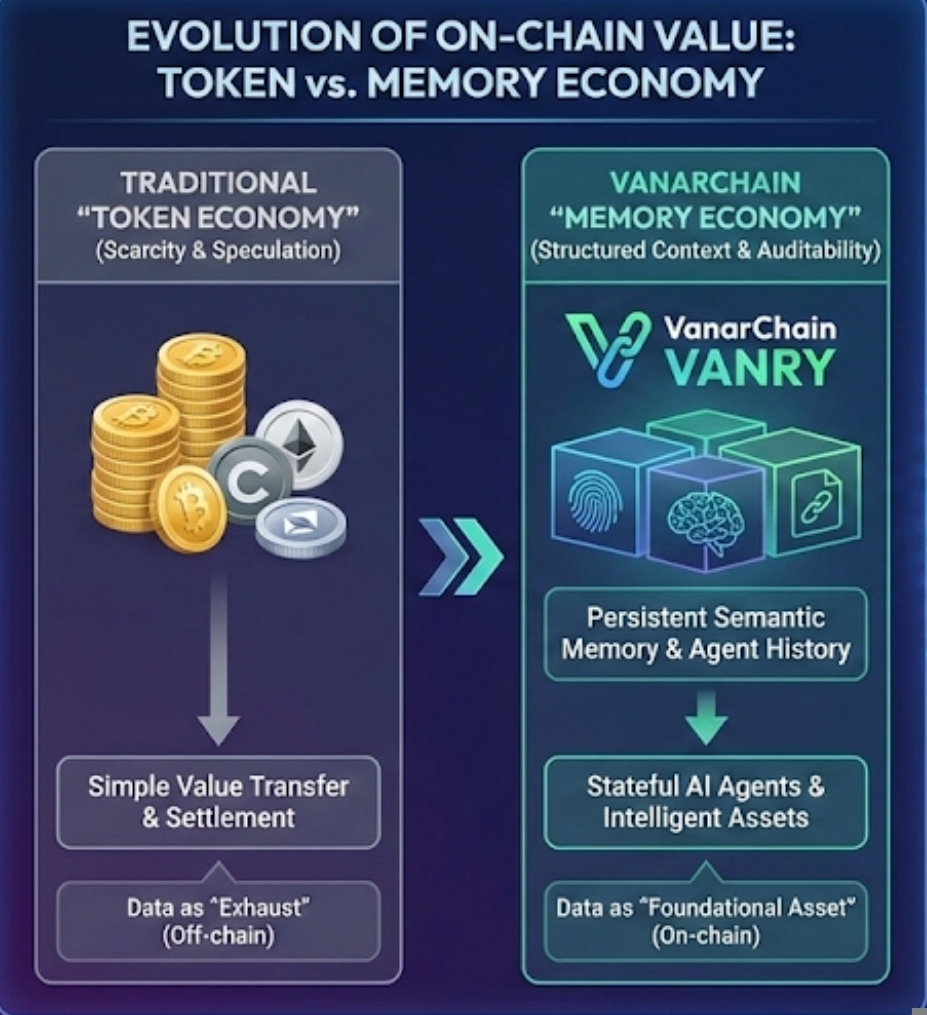

The first time I started thinking about data as an asset, not just exhaust, it felt slightly uncomfortable. We’ve spent years talking about tokens as the thing you own, stake, trade, speculate on. Data was background noise. Useful, yes. Valuable, obviously. But not native. Not something the chain itself treated as a first-class economic object.

That’s where the idea of a memory economy starts to feel different. And when I first looked closely at what VanarChain is building, what struck me wasn’t speed or branding. It was this quiet shift underneath. The suggestion that stored intelligence, structured memory, and contextual data might become as foundational as tokens themselves.

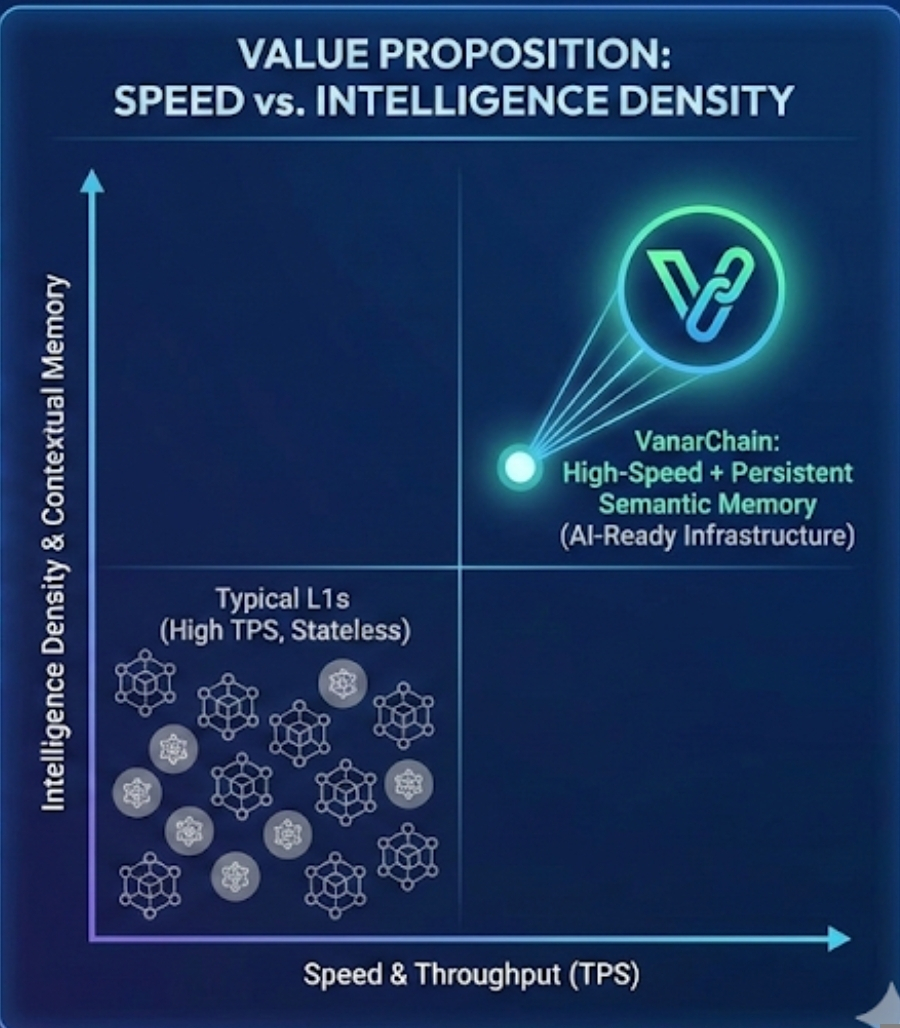

On the surface, VanarChain is positioning itself as AI-native infrastructure. That phrase gets thrown around a lot right now. As of early 2026, almost every new Layer 1 mentions AI somewhere in its roadmap. But when you look at what most chains actually do, they move tokens from one address to another and execute predefined logic. Fast settlement. Deterministic contracts. Clear accounting.

Vanar’s claim is different. It talks about semantic compression, persistent memory, agentic flows. Strip away the language and what it means is this: instead of just recording transactions, the chain stores structured context that machines can reference and reason over later. That changes the texture of what is being saved.

Here’s why that matters economically. Today, most on-chain value is tied to scarcity of tokens. Bitcoin has a capped supply of 21 million. Ethereum burns fees under certain conditions. Scarcity drives price narratives. But data has been treated as an off-chain externality. AI models are trained off-chain. Memory sits in centralized databases. The chain only settles outcomes.

If Vanar’s architecture holds, memory itself sits on the foundation. And that momentum creates another effect. If memory is on-chain, it becomes auditable. If it is auditable, it becomes trustable. If it becomes trustable, it can carry economic weight.

Let’s ground this in numbers. As of February 2026, the global AI market is estimated to exceed 300 billion dollars annually. Meanwhile, the entire crypto market cap fluctuates around 1.8 to 2.2 trillion dollars depending on volatility that week. That gap tells you something. AI is not a niche. It is infrastructure. But its economic flows largely bypass blockchains.

Vanar is trying to narrow that gap by embedding AI context into the chain itself. Not by hosting large language models on-chain, which would be computationally impractical. Instead, by storing compressed semantic representations. Think of it like storing a fingerprint of knowledge rather than the entire book.

Underneath, semantic compression reduces heavy data into structured vectors. On the surface, that means lower storage costs and faster retrieval. Underneath that, it means machines can verify that a piece of reasoning refers to a specific stored context. That enables explainability. And explainability is not cosmetic. In finance, it’s compliance.

Consider agentic payments. Vanar has been publicly discussing agent-based transaction systems, including appearances at finance events where programmable payments were demonstrated alongside traditional processors. On the surface, it looks like automation. AI paying for services without a human clicking approve. Underneath, it requires persistent memory of prior agreements, identity context, and policy constraints.

Without on-chain memory, an AI agent is stateless. It reacts but does not remember. With persistent memory, it accumulates context. That difference is subtle but economic. A stateless agent is a tool. A stateful agent can manage capital.

Now imagine thousands of such agents transacting. Each action references stored memory. Each reference has value because it carries history. That begins to resemble an asset layer that isn’t a token in the traditional sense. It’s accumulated, structured data that influences decision-making.

Of course, there are risks. Storing more context on-chain increases surface area. If memory is immutable, mistakes are permanent. That cuts both ways. Auditability is a strength, but rigidity can be a weakness. Meanwhile, storage costs matter. Even with compression, on-chain storage is not free. Gas economics must account for AI-scale interactions, which could mean higher demand for VANRY if usage grows. If that demand does not materialize, the thesis weakens.

Meanwhile, look at what the broader market is doing. Ethereum continues to optimize rollups. Solana pushes throughput and low fees. New L1s still highlight transactions per second as a headline metric. As of Q1 2026, some chains advertise 50,000 TPS theoretical capacity. But TPS alone does not capture intelligence density. It measures speed, not memory.

Understanding that helps explain why a memory economy feels like a different category. Instead of asking how fast can we move tokens, the question becomes how richly can machines reference prior state. In traditional finance, institutions pay heavily for data feeds. Bloomberg terminals cost around 24,000 dollars per year per seat because information has economic leverage. That is off-chain memory monetized.

If on-chain memory becomes structured and composable, something similar could emerge natively. Data feeds, AI reasoning logs, agent histories. Each with economic weight. Early signs suggest that developers are experimenting with these ideas, though ecosystem scale remains modest compared to Ethereum’s millions of daily transactions.

There is also the regulatory dimension. As AI integrates into finance, regulators increasingly demand traceability. In 2025 alone, multiple jurisdictions issued AI governance guidelines focused on transparency and audit trails. A blockchain that embeds explainable memory at its base layer is better positioned in that climate than one that only executes opaque smart contracts.

Still, this remains early. Market caps fluctuate. Narratives shift quickly. VANRY’s token performance has mirrored broader altcoin volatility, rising during AI narrative surges and cooling during macro pullbacks. If AI hype fades, infrastructure projects tied to it could see reduced speculative interest. That is real risk.

But if the structural trend continues, where AI agents transact, negotiate, and settle autonomously, then memory stops being background noise. It becomes infrastructure. And infrastructure, when it works quietly, earns its value over time rather than through sudden spikes.

When I step back, the bigger pattern feels clear. We moved from information scarcity to information overload. Now we are moving into structured intelligence. The next layer of economic competition may not be about who holds the most tokens, but who controls the most trusted context.

If that shift holds, the most valuable asset on-chain might not be what you trade. It might be what your agents remember.