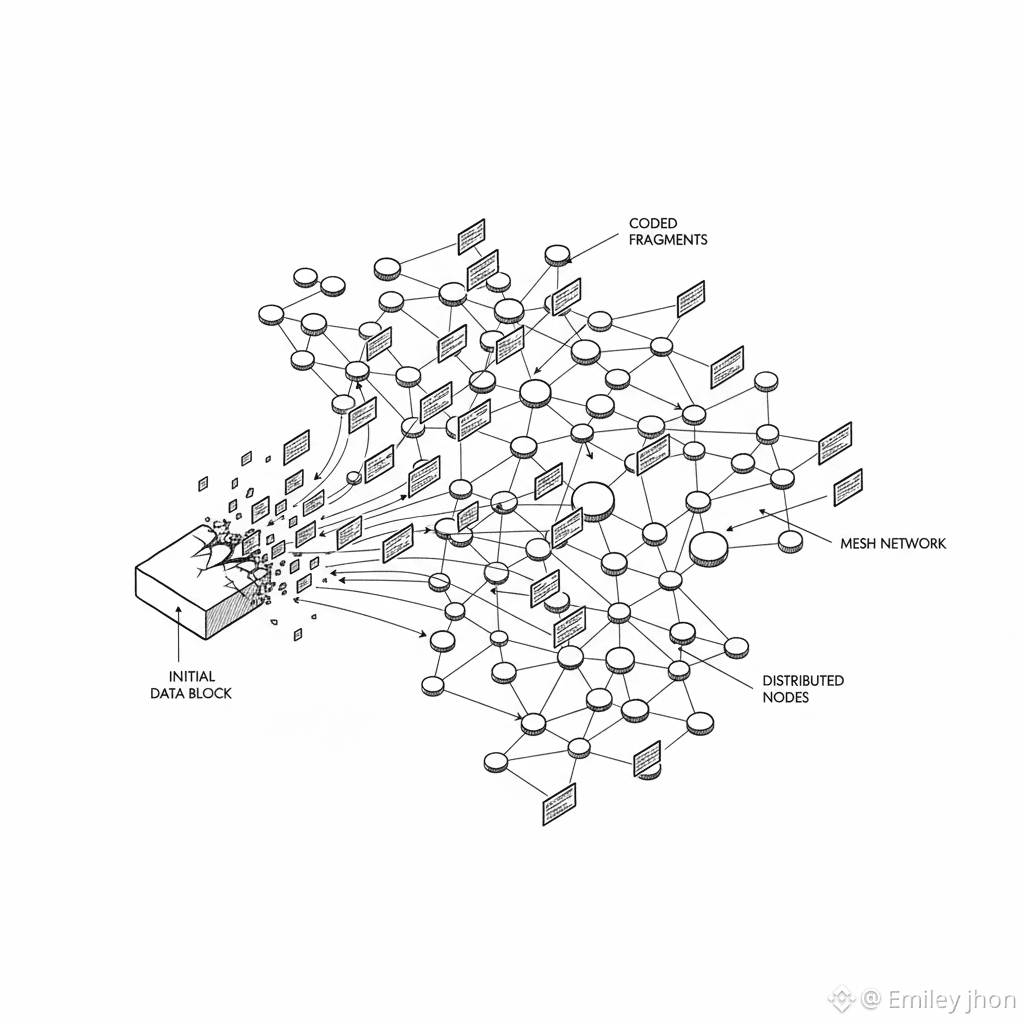

Privacy infrastructure is usually designed like a vault: hard perimeter, strong lock, catastrophic breach. Walrus rejects that architecture entirely. Its real innovation is not encryption or access control, but statistical indifference to failure. When data is erasure-coded and dispersed across a blob network, no single node, outage, or adversary meaningfully degrades integrity or confidentiality.

What’s striking is how this shifts the threat model. Attacks no longer aim to break defenses; they must coordinate improbabilities. A node can disappear. Several can misbehave. Even whole regions can go dark. The system absorbs it without revealing more than noise. Privacy here is not enforced — it emerges from redundancy and economic dispersion.

Midway through, the analogy becomes clear: Walrus treats data like packets in a fault-tolerant organism rather than assets in storage. Just as biological systems survive by over-provisioning and decentralization, Walrus survives scrutiny by making precision attacks structurally inefficient.

This reframes censorship resistance too. You can’t meaningfully censor what you can’t reliably locate, isolate, or exhaust. Control fails not because of ideology, but because the cost curve explodes.

The deeper lesson is timeless: systems that expect failure age better than systems that fear it. Walrus doesn’t promise secrecy — it makes collapse irrelevant.