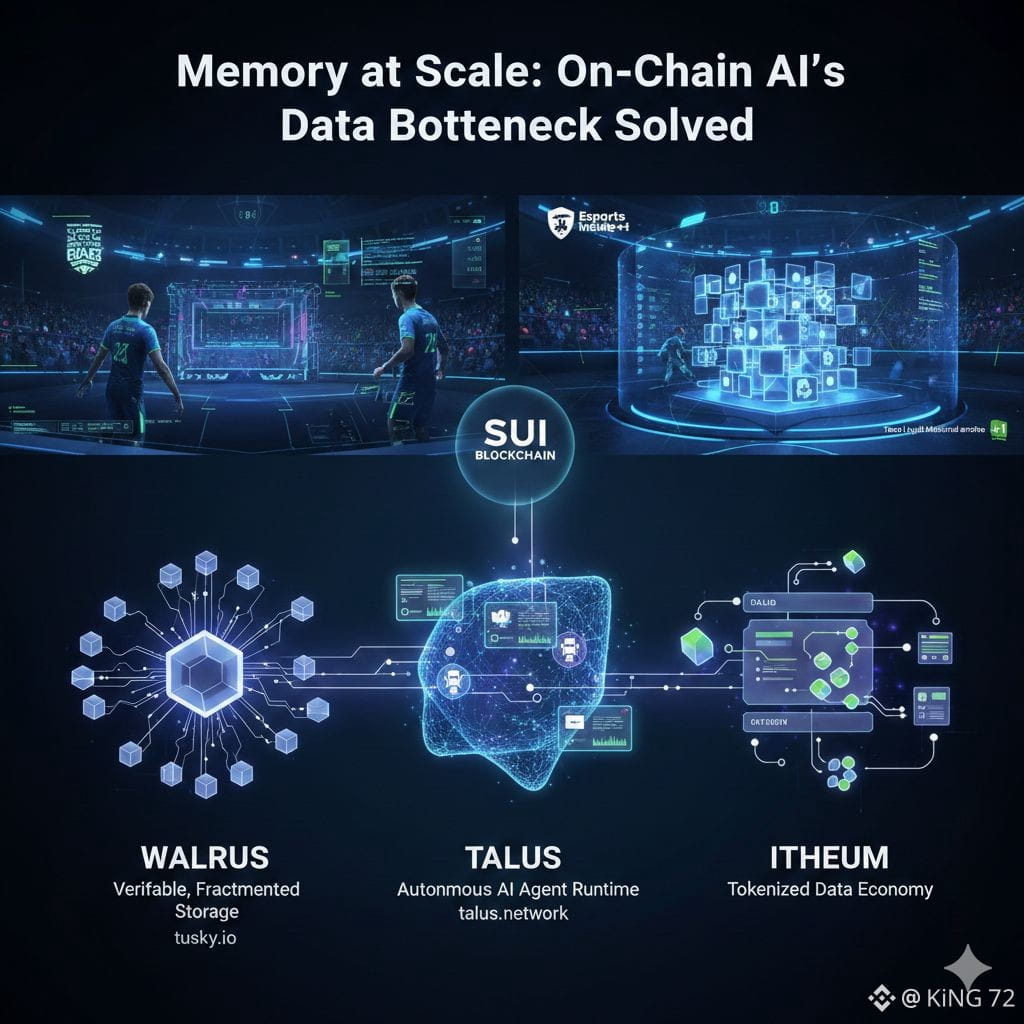

When I first wired an AI agent to stream and analyze live esports matches, the model was the easy part. The real pain arrived the moment the pipeline needed to keep reliable memory: terabytes of footage, streaming telemetry, player stats, and the messy swirl of social chatter. Models can reason; they can’t make up for brittle storage. For engineers, builders, and infrastructure decision-makers wrestling with on-chain AI, that mismatch is the single most important problem to solve. This article is for blockchain developers, AI engineers, and Web3 infrastructure leaders who need a practical mental model for what “data-native” blockchains look like — and why Walrus, Talus, and Itheum together represent a meaningful step toward agentic systems you can actually build on.

Most blockchains were designed around small, deterministic state transitions: account balances, token transfers, and short, verifiable logs. That design is beautiful for trustless settlement and composability, but it breaks down when the unit of work becomes a video file, a trained model, or a multi-gigabyte dataset that agents must read, verify, and reason over. The naive approach — replicate every file copy across every full node — is the wrong tradeoff. It’s secure but disastrously slow and prohibitively expensive at terabyte scale; it also destroys the latency and throughput that modern agents need. The consequence is predictable: builders peel expensive storage and compute off-chain, stitch together fragile oracles and middlemen, and end up with agent systems that are clever on paper and fragile in the wild.

Walrus approaches the problem by asking a simple question: what if the chain didn’t have to replicate full files everywhere to preserve availability and verifiability? Instead of wholesale replication, Walrus splits large files into many fragments using erasure coding and distributes those fragments across a decentralized storage fabric. The file can be reconstructed as long as a sufficient subset of fragments remains available, which dramatically reduces total storage overhead while preserving resilience against node failures. Walrus treats the blockchain as a coordination and certification layer rather than as the file carrier itself — uploads emit compact on-chain blob certificates that smart contracts can verify without ever carrying the media bytes on chain. That separation keeps on-chain logic lightweight while delivering verifiable, auditable storage guarantees at scale. �

tusky.io

This design choice — fragment, attest, verify — has practical downstream effects for agent design. Agents don’t want opaque S3 links and a hope-for-the-best SLA: they want cryptographic proof their “memory” hasn’t been tampered with, predictable retrieval performance, and a semantics for ownership and access that smart contracts can enforce. By storing file metadata, lifecycle state, and economic incentives on a fast execution layer like Sui, Walrus gives dApp and agent developers the primitives to build persistent memory that’s both verifiable and performant. It’s a pragmatic split: heavy media lives distributed; proofs and permissions live on chain. That pattern shifts many architectural headaches from brittle off-chain glue to composable on-chain primitives and verifiable storage references. �

Walrus

Talus is the complementary piece of the puzzle on the compute and agent side. Where Walrus guarantees that memory exists and is provably intact, Talus asks how agents should act consistently across long horizons with that memory available. Talus markets itself as an infrastructure stack for autonomous AI agents — agents that execute workflows, hold state across sessions, and perform economic actions in a transparent, auditable way. Those agents need three things to be useful in production: continuity (persistent memory and identity), verifiability (provable inputs and outcomes), and coordination (a framework for multi-agent orchestration and incentives). By baking support for persistent, tokenized agent memory into the agent runtime, Talus enables agents to reason about historical context and re-enter workflows without the brittle reconnection logic that trips up many early experiments. The synergy is straightforward: Talus runs the agent model and policy; Walrus supplies provable memory; the chain ties the two together with economic and governance primitives. �

talus.network

Itheum occupies the third design point: turning data itself into first-class economic objects. Tokenizing datasets — whether they are master audio files, labeled training corpora, or provenance-tracked video — only makes sense when the underlying file is reliably available and provably unchanged. Itheum’s vision is to make datasets tradable and composable in the same way we treat code or NFTs, enabling revenue flows for creators and traceable licensing for consumers. That market requires storage guarantees, encryption options, and access controls that can be enforced without centralized custodians. Integrations between Itheum and Walrus are therefore more than a convenience: they are a practical necessity for an on-chain data economy. Tokenized datasets that reference on-chain blob certificates mean buyers can verify authenticity and lineage before they mint or trade, and agents can be programmed to negotiate terms, access datasets, and pay for usage with minimal manual intervention. �

Walrus

The architecture I’m describing is not hypothetical — adoption is material and accelerating. Walrus has announced a steady stream of integrations and partnerships across media IP holders, cloud partners, and Web3 infrastructure projects, positioning itself as the dedicated data layer for several agent-first stacks. The clearest operational signal came in January 2026 when esports giant Team Liquid migrated a massive portion of its historical archive — reported in the hundreds of terabytes — onto Walrus, illustrating how content owners view decentralized, verifiable storage as a viable operational option for long-term media archival and new fan experiences. Those kinds of migrations aren’t PR stunts; they’re production moves that test recovery, latency, and economic models at scale. The takeaway for builders is blunt: the storage layer is now a product decision, not an afterthought. �

Esports Insider +1

If you’re an engineer deciding between “just use IPFS + Filecoin” and “build on a data-native stack,” here’s the practical framing. IPFS/Filecoin are powerful and battle-tested at scale, and Arweave argues convincingly about permanence. But for agentic workflows, you need three additional properties: low-latency retrieval and predictable availability for hot datasets, tight smart contract integration for lifecycle and access control, and storage economic models that align with continuous agent querying rather than one-off archival payments. Walrus — by design — targets that middle ground: not pure permanence, not pure replication, but efficient, verifiable data availability that can be paired with agent runtimes. That alignment changes tradeoffs for product teams: you can build agentic features that rely on consistent memory without wrapping them in fragile, centralized proxies.

Token design and incentives are the quiet engineering problem behind all of this. Walrus’s token (WAL) is structured less like a speculative utility token and more like an operations instrument: users pay for storage and retrieval, nodes earn rewards over time for fragment availability, and stakers back node quality and reliability. A governance layer manages slashing conditions and incentives to penalize correlated failures or misreporting. The economic trick isn’t to create volatility — it’s to create predictable uptime economics that map to service-level expectations. For teams building agentic features, monitoring operational signals is more important than tracking price charts: look at query rates, steady-state upload volume, node health distributions, and actual reconstruct success rates during simulated node outages.

Nothing here is without risk. Storage is a brutally competitive market; incumbents and adjacent projects will continue to evolve. Systemic risks include correlated node failures, latent reconstruction bugs in erasure coding implementations, or incentive designs that create perverse edge cases under stress. Oracle reliance for fiat pricing or payment rails is another fragile surface: any mechanism that ties on-chain contracts to off-chain pricing needs robust fallback rules for market stress. Interoperability is also a double-edged sword — Sui integration gives Walrus speed and programmability, but it also introduces a coupling: the health of the coordination chain matters to the storage layer’s perceived guarantees.

So what should builders do tomorrow? First, treat storage as a first-class design decision during architecture sprints. Run failure drills: simulate node losses and prove that reconstruct and retrieval latency meet your agent’s real-time requirements. Second, design your agents to be storage-agnostic at the interface level: write memory adapters that can talk to WAL, IPFS, or a centralized fallback so you can A/B test availability and cost. Third, instrument operational telemetry into the economic layer: track fragment availability, reconstruct success rates, average retrieval times, and the distribution of data across independent node operators. Those operational metrics — not token movement — will tell you whether the stack is viable for mission-critical agent features.

The story of on-chain AI isn’t about a single protocol winning; it’s about an architectural realignment. Agents need persistent, verifiable memory; datasets need to be tradable and auditable; and storage must be efficient enough to operate at the scale modern models require. Walrus’s fragment-and-certify approach reduces the cost of trust for heavyweight files, Talus gives agents a runtime that expects continuity, and Itheum provides the economic rails to make data itself a tradable asset. Together they turn a historically brittle part of the stack into an explicit, composable building block.

If you’re shipping agentic features in 2026, your success will hinge less on model architecture and more on how reliably your system can answer the question: “is this memory true — and is it available when the agent needs it?” When memory becomes a dependable commodity, innovation accelerates. Agents stop being proofs-of-concept and start being reliable tools that augment workflows, monetize creator content, and unlock new interactive experiences. That’s the promise on the table — and the technical choreography between Walrus, Talus, and Itheum shows a clear path toward making it real.