The AI era is quietly breaking many of the assumptions that new Layer-1 blockchains are still built on. For years, launching a new L1 followed a familiar script: promise higher throughput, lower fees, faster finality, and a cleaner developer experience. If the benchmarks looked good and incentives were attractive, users and builders would come. That playbook worked when blockchains were mostly serving humans clicking buttons, trading tokens, or interacting with simple applications.

AI changes that equation completely.

The core problem is not that new L1s lack ambition. It’s that many of them are optimized for a world that no longer exists.

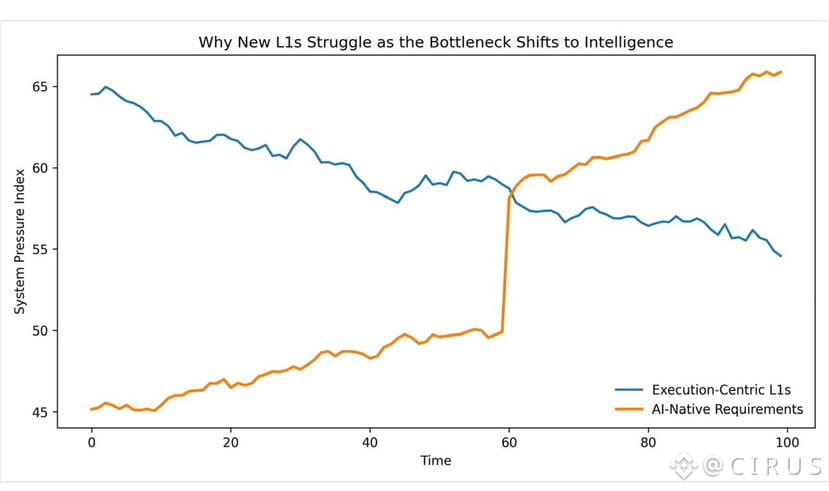

In an AI-driven environment, execution speed alone is no longer the constraint. Intelligence is. Persistence is. Enforcement is. Systems are no longer judged by how fast a transaction clears, but by whether autonomous processes can operate continuously, reason over historical context, and rely on outcomes that are actually enforced by the network.

This is where most new L1s start to struggle.

The Execution Trap

Most new chains still design themselves as execution engines. They focus on pushing more transactions per second, parallelizing execution, and reducing gas costs. These are useful optimizations, but they solve a diminishing problem.

AI agents do not behave like traders. They don’t spike activity during market hours and disappear during downturns. They run continuously. They make decisions based on accumulated state. They coordinate with other agents. They need environments that behave predictably over long periods, not just under short bursts of load.

A chain that is fast but forgetful is not AI-friendly. Stateless execution forces agents to reconstruct context repeatedly, pushing memory and reasoning off-chain, where trust breaks down. When intelligence lives off-chain but enforcement lives on-chain, the system becomes fragile.

Many new L1s fall into this trap. They assume execution is the bottleneck when, for AI systems, it is often the least interesting part.

Memory Is the Real Scarcity

AI systems depend on memory. Not just storage, but structured, persistent state that can be referenced, updated, and enforced over time. Most blockchains technically “store data,” but they do not treat memory as a first-class design concern. It is expensive, awkward, and often discouraged.

This pushes developers to external databases, indexing layers, and off-chain services. The more intelligence relies on these external components, the less meaningful the blockchain becomes as a coordination layer. The chain settles transactions, but it does not understand the system it governs.

New L1s often underestimate how destructive this is for AI-native applications. Intelligence without on-chain memory is advisory at best. It can suggest actions, but it cannot guarantee continuity.

Reasoning Without Boundaries Breaks Systems

Another failure point is reasoning. Many chains assume that if developers can write smart contracts, reasoning will emerge naturally. But reasoning is not just logic execution. It is the interpretation of context, constraints, and evolving rules.

AI agents need environments where rules are stable, explicit, and enforceable. They need to know what they are allowed to do, what happens if conditions change, and what outcomes are final. Chains that treat governance, permissions, and enforcement as secondary features create uncertainty that autonomous systems cannot tolerate.

This is why “move fast and patch later” works poorly in the AI era. AI systems amplify inconsistencies. Small ambiguities turn into systemic failures when agents operate at scale.

Enforcement Is What Turns Intelligence Into Reality

A common misconception is that intelligence alone creates value. In decentralized systems, enforcement is what gives intelligence weight.

If an AI agent decides something should happen, the system must guarantee that the decision is carried out—or rejected—according to defined rules. Otherwise, intelligence becomes optional, negotiable, or exploitable.

Many new L1s rely on social or economic incentives to enforce behavior. That works when participants are humans who can be persuaded, punished, or replaced. It works far less well when participants are autonomous systems acting continuously.

In the AI era, enforcement must be structural, not social.

Why Vanar Takes a Different Path

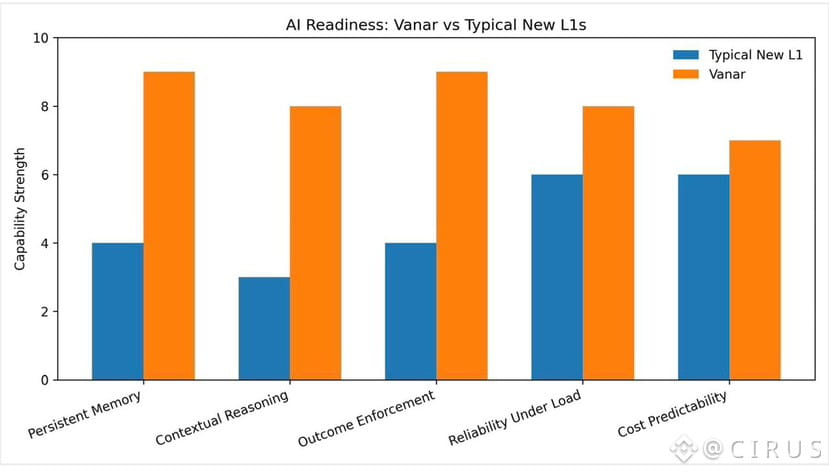

Vanar stands out not because it promises faster execution, but because it starts from a different premise: AI agents are not edge cases. They are the primary users.

This changes everything. Vanar’s architecture emphasizes memory, reasoning, and enforcement as core properties of the chain rather than add-ons. Instead of optimizing purely for transaction throughput, it optimizes for long-running systems that need continuity and trust.

Memory is treated as an asset, not a burden. Reasoning is embedded into how systems interpret state. Enforcement is explicit, giving outcomes finality that autonomous agents can rely on. This makes the chain less flashy in benchmarks, but far more resilient as intelligence scales.

Most importantly, Vanar does not assume humans are always in the loop. It is built for systems that act on their own, coordinate with other systems, and remain operational regardless of market cycles.

The Real Challenge for New L1s

The hardest part of the AI era is not adding AI features. It is unlearning assumptions.

New L1s struggle because they are still competing in a race that matters less every year. Speed and cost are becoming table stakes. What differentiates infrastructure now is whether it can support intelligent behavior without collapsing under its own complexity.

Chains that fail to adapt will not necessarily fail loudly. They will fail quietly. Developers will keep execution there, but move intelligence elsewhere. The chain becomes a settlement layer for decisions made off-chain. At that point, it loses strategic relevance.

Closing Perspective

The AI era is not asking blockchains to be faster calculators. It is asking them to be environments where intelligence can live, remember, and act with consequences.

Most new L1s are still building calculators.

Vanar is trying to build something closer to a habitat.

Whether that approach succeeds long-term will depend on execution, but the direction itself explains why so many new chains feel increasingly out of sync with where intelligent systems are actually heading.