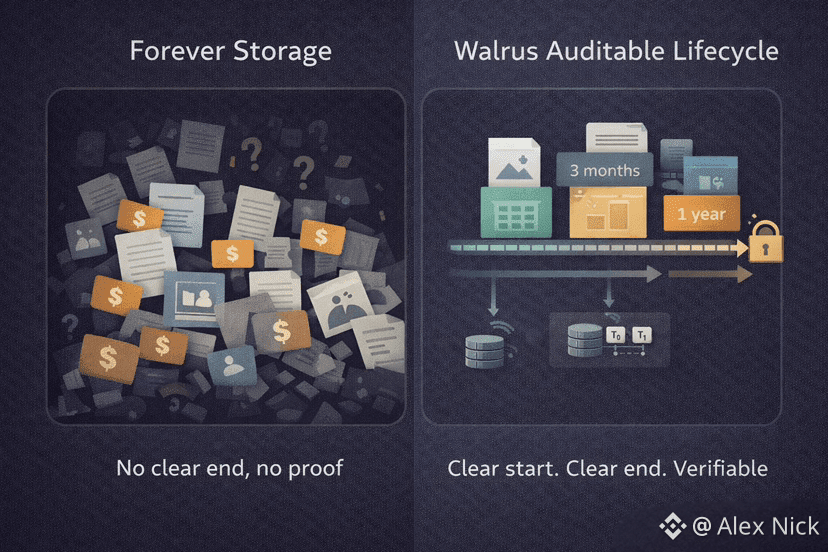

Most people hear the phrase decentralized storage and immediately picture a massive eternal hard drive floating somewhere in the cloud, owned by no one and promising to last forever. When I first looked at Walrus, I realized it is built on a very different mental model. Walrus behaves less like an immortal disk and more like a service with rules, timelines, and accountability. I do not just throw data into the void. I place it for a defined period, the network assigns responsibility to specific nodes, and I can observe what is happening at every stage.

That difference explains why Walrus talks about blobs, epochs, committees, certification, and challenges instead of vague guarantees. It treats storage as something that lives through a lifecycle. I can see who is responsible for my data right now, what happens when time advances, and how the system reacts if something goes wrong. That shift is what separates a simple storage network from infrastructure I can actually build products on.

How coordination works without dragging files on chain

One thing I appreciate is how Walrus separates coordination from data itself. Large files never sit on the blockchain. Instead, storage nodes hold the data while the chain records evidence about behavior and rules. From what I have read, the blockchain coordinates storage groups through epoch changes, which allows new nodes to join and old ones to rotate out without freezing the system.

This matters because it creates a shared source of truth. When I store a file, its entire history can be followed on chain. Other applications do not need to trust a single company database or a private API. They can rely on the public record that shows when the file was registered, when it was certified, and which group was responsible at each moment.

Time as a built in tool rather than an afterthought

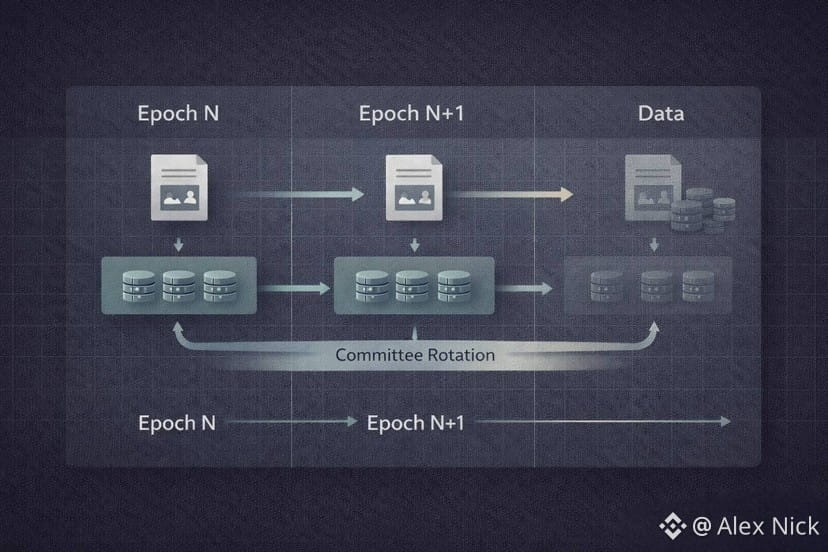

Walrus organizes storage around epochs, which are fixed windows of time. During each epoch, a specific set of storage nodes is responsible for holding particular blobs. I find this important because real networks are messy. Nodes go offline, machines fail, and connections break.

In many systems, that mess stays hidden until something silently degrades. With epochs, change is expected and visible. At the end of each period, responsibility rotates. No node is meant to hold data forever. That rotation feels like gravity in infrastructure. It keeps things healthy by design instead of relying on hope.

Committees make responsibility explicit

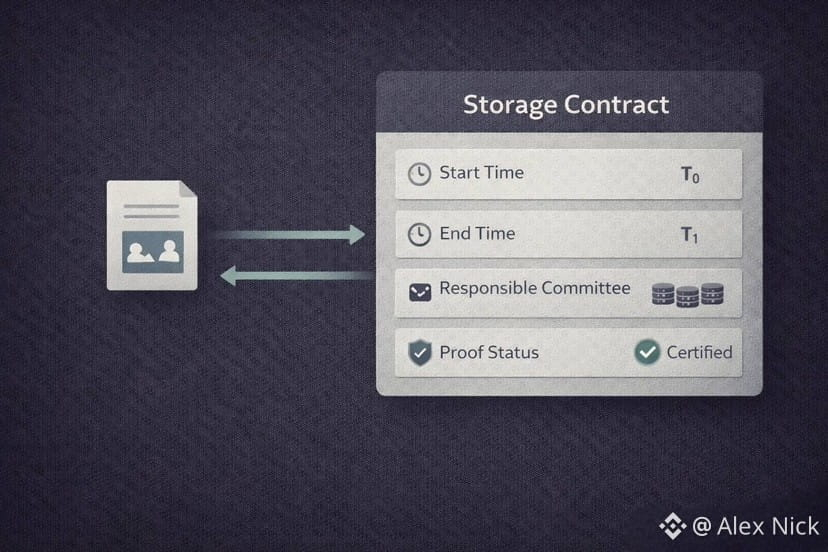

In a lot of storage systems, the answer to who is holding my file is basically a shrug. You upload and trust the system. Walrus does not do that. Each blob is assigned to a committee, and the system exposes which committee is responsible during which epoch. Changes are tied to staking mechanics involving WAL.

For me, the value is clarity. I can ask a simple question and get a real answer. Who is responsible for my data right now. That clarity lets developers build dashboards, alerts, and automation. I can imagine systems that renew storage automatically when an epoch ends or trigger warnings if something looks risky.

Certification as the real moment of truth

In Walrus, a file is not considered real just because my upload finished. It becomes real when the network certifies it. The documentation explains that certification only happens once enough encoded pieces are confirmed to be stored across nodes so retrieval is guaranteed during the agreed time.

That distinction is powerful. In most systems, upload success is local. In Walrus, success is collective and publicly announced. I can build logic around that moment. I can wait for certification before minting an NFT, starting an AI training job, or opening access in a marketplace. That level of assurance is hard to get elsewhere.

Storage as a defined process not a dump

The blob model makes storage feel like a transaction flow rather than a black hole. I register a file, upload it, and wait for certification. I pay transaction costs in SUI and storage costs in WAL for a specific duration.

That alone tells me Walrus is not built for upload and forget. It is built for store with rules. Time, cost, and proof are all part of the same process. That is why it feels programmable. I can reason about tradeoffs instead of guessing.

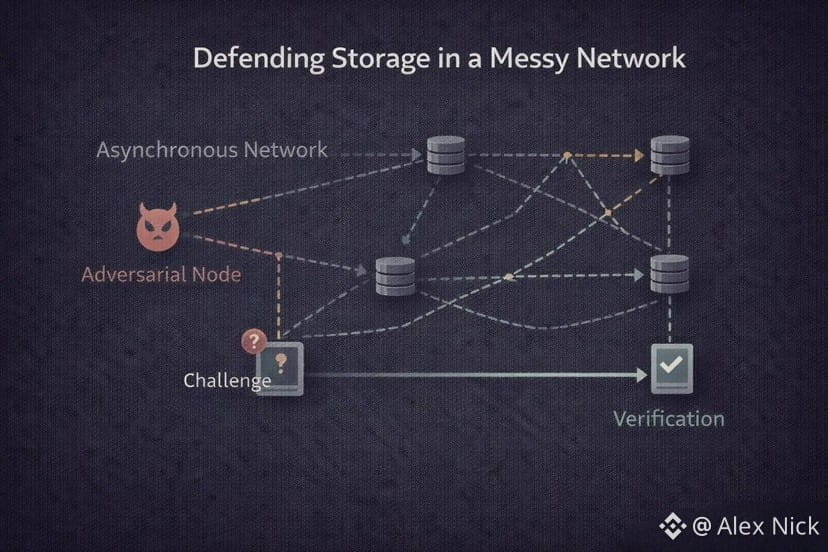

Why real network conditions matter

Most people assume networks behave nicely. Walrus assumes the opposite. The RedStuff design described in its research tackles situations where messages are delayed or reordered. These are not edge cases. They are normal conditions on the internet.

By accounting for asynchronous behavior, Walrus blocks a whole class of attacks where someone pretends to store data by exploiting timing tricks. To me, this shows the team is designing for reality, not for diagrams.

Integrity matters as much as availability

Another detail that stood out is how Walrus treats bad clients as seriously as bad servers. The research discusses authenticated data structures that ensure what I retrieve is exactly what was stored.

This matters more than it sounds. Data that is subtly wrong can be worse than data that is missing. If I am training models, running analytics, or serving financial content, silent corruption destroys trust. Walrus clearly prioritizes integrity alongside availability.

What this means when I am building something

Because storage has clear states like registered, uploaded, certified, and active per epoch, I can write cleaner logic. I can design interfaces that wait for certification. I can trigger processes only when proofs exist. I can monitor epoch transitions instead of guessing when reliability might slip.

The documentation even shows that I can verify availability by checking on chain certification events instead of trusting a gateway response. That is what infrastructure means to me. Stable states that software can rely on.

A quieter but stronger idea underneath it all

If I had to summarize the deeper idea, I would say Walrus is turning decentralized storage into something that looks like a service contract. Time is explicit. Responsibility is named. Proof has a clear moment. Attacks and failures are assumed, not ignored.

That is why serious builders keep paying attention. It is not about promising forever storage. It is about offering something measurable and verifiable that products can depend on.

Why this approach can last

Infrastructure rarely wins by sounding revolutionary. It wins by being dependable. Walrus pushes decentralized storage into that boring but powerful category by making lifecycle, accountability, and verification explicit.

If this continues, Walrus will not be known for one flashy feature. It will be known for bringing the same clarity we expect from mature systems into decentralized data. Clear ownership. Clear timelines. Clear proof points. Clear responsibility when things change.