I want to start this honestly. When I first looked into decentralized storage, I thought the main problem was speed or cost. I didn’t think much about coordination. But after reading how different storage networks fail in real conditions, one thing became clear to me: data loss usually doesn’t happen because storage is missing it happens because coordination breaks.

This is the angle from which I understand Walrus.

Walrus is not trying to be “cloud storage on blockchain.” It is trying to answer a more practical question: how do you store large data blobs across many independent nodes while still keeping the system organized, predictable, and recoverable?

At its core, Walrus Protocol is built to store large files called blobs across a decentralized network in a way that remains efficient, reliable, and verifiable over time. That sentence sounds technical, but the idea behind it is simple. Walrus assumes that things will go wrong. Nodes will disconnect. Some data will disappear. The system is designed around that reality.

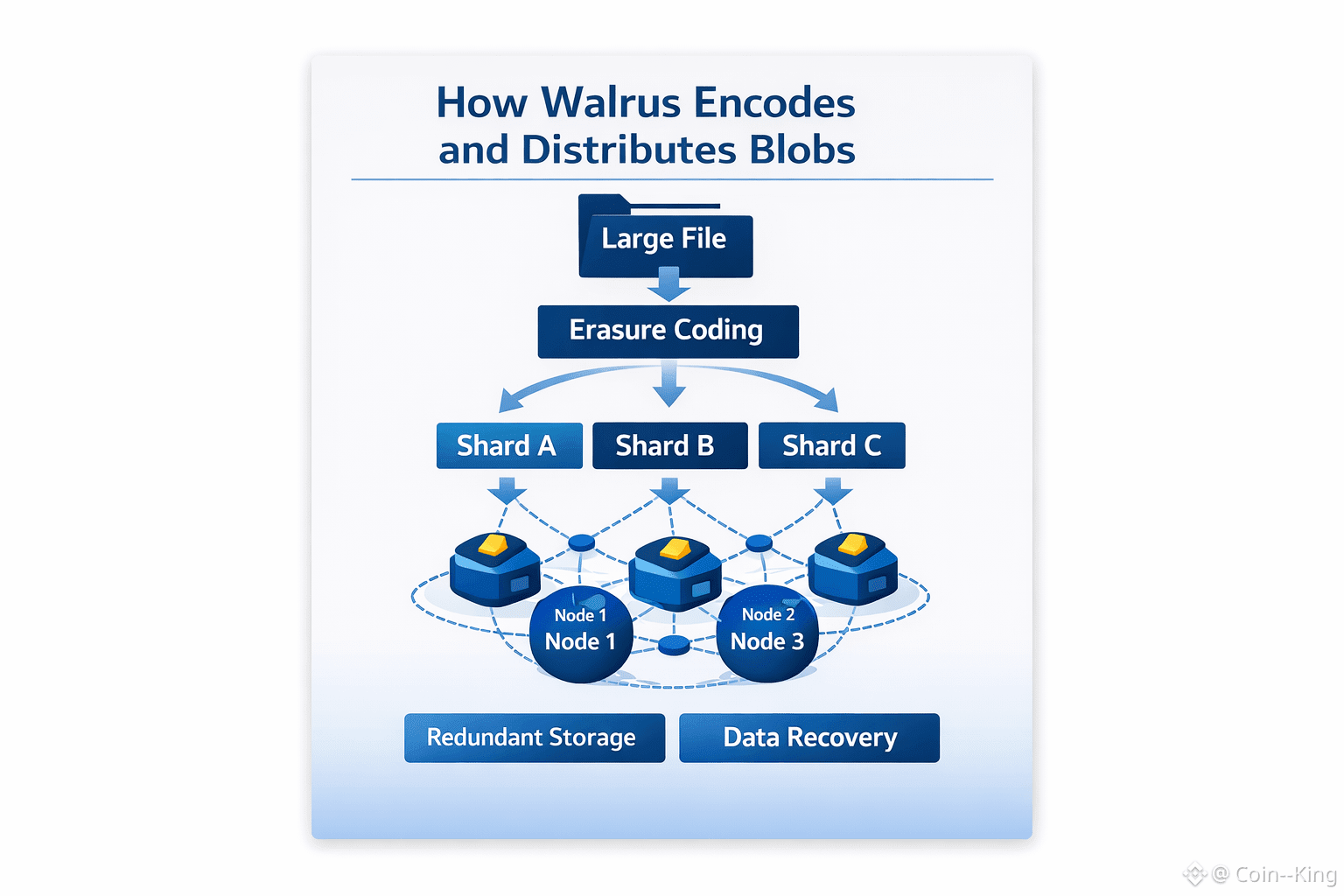

When I explain this to beginners, I usually compare it to shared responsibility. Imagine a group of people storing parts of an important document. Nobody holds the full copy, but enough people together can always rebuild it. This is exactly what erasure coding allows Walrus to do.

Instead of storing full copies of data again and again, Walrus breaks a file into pieces and spreads those pieces across storage nodes. As long as a minimum number of pieces remain available, the original data can be reconstructed. This approach reduces storage waste while keeping data availability strong something decentralized systems struggle with.

Where Walrus really becomes interesting is coordination. Storage nodes are not acting randomly. The network follows clear rules about:

who is responsible for storing which data,

when data must be available,

and how availability is checked.

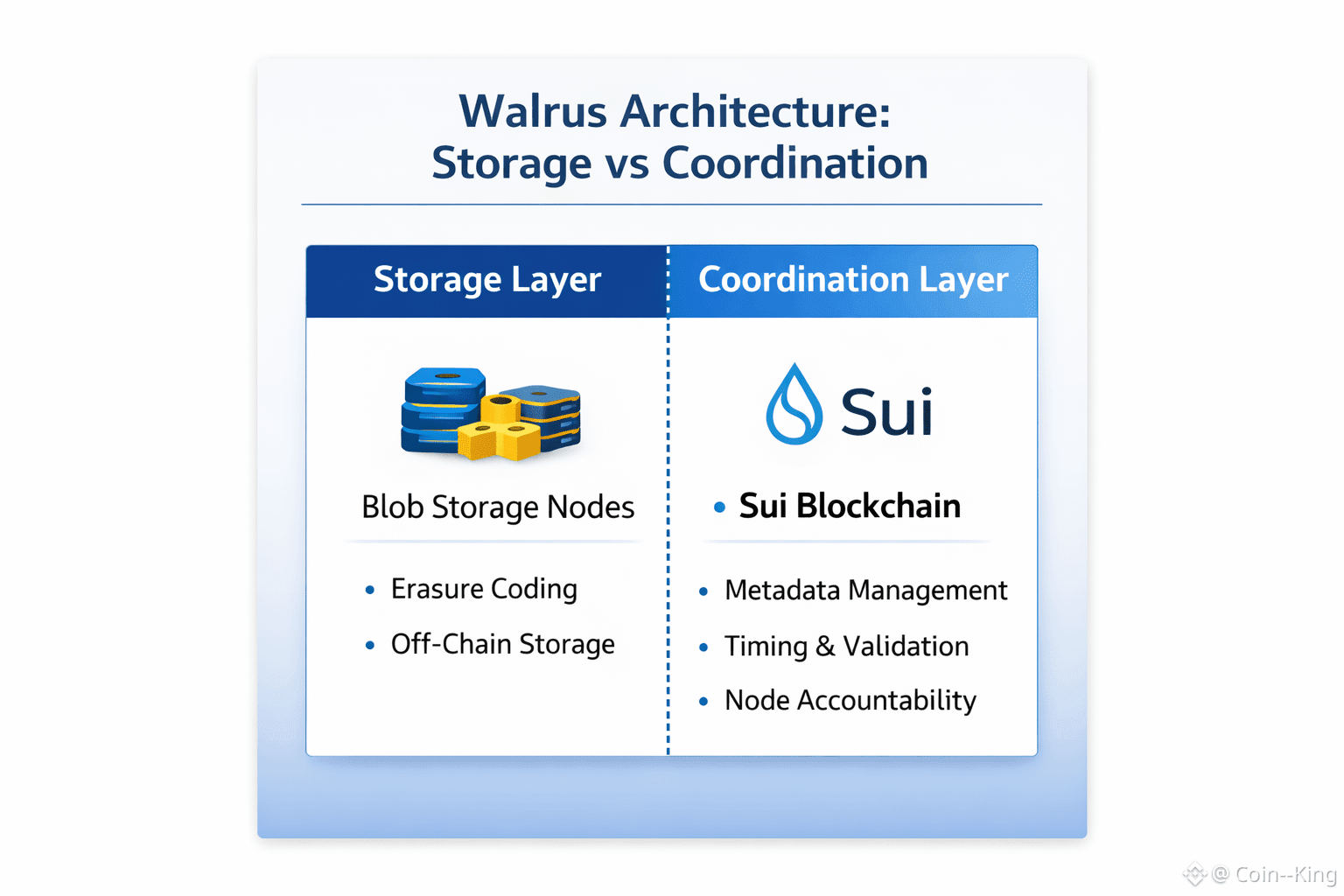

This is where Sui blockchain comes into play. Walrus uses Sui not to store data, but to manage metadata, timing, and accountability. Large files stay off-chain. Coordination stays on-chain. From an infrastructure point of view, this separation makes sense.

Too much on chain data becomes expensive and slow. Too little coordination becomes chaotic. Walrus sits in the middle.

I’ve watched many Web3 projects fail because they tried to put everything on the blockchain. Walrus doesn’t do that. It uses the blockchain where it adds value and avoids it where it doesn’t. That tells me this project is designed by people who understand systems, not hype cycles.

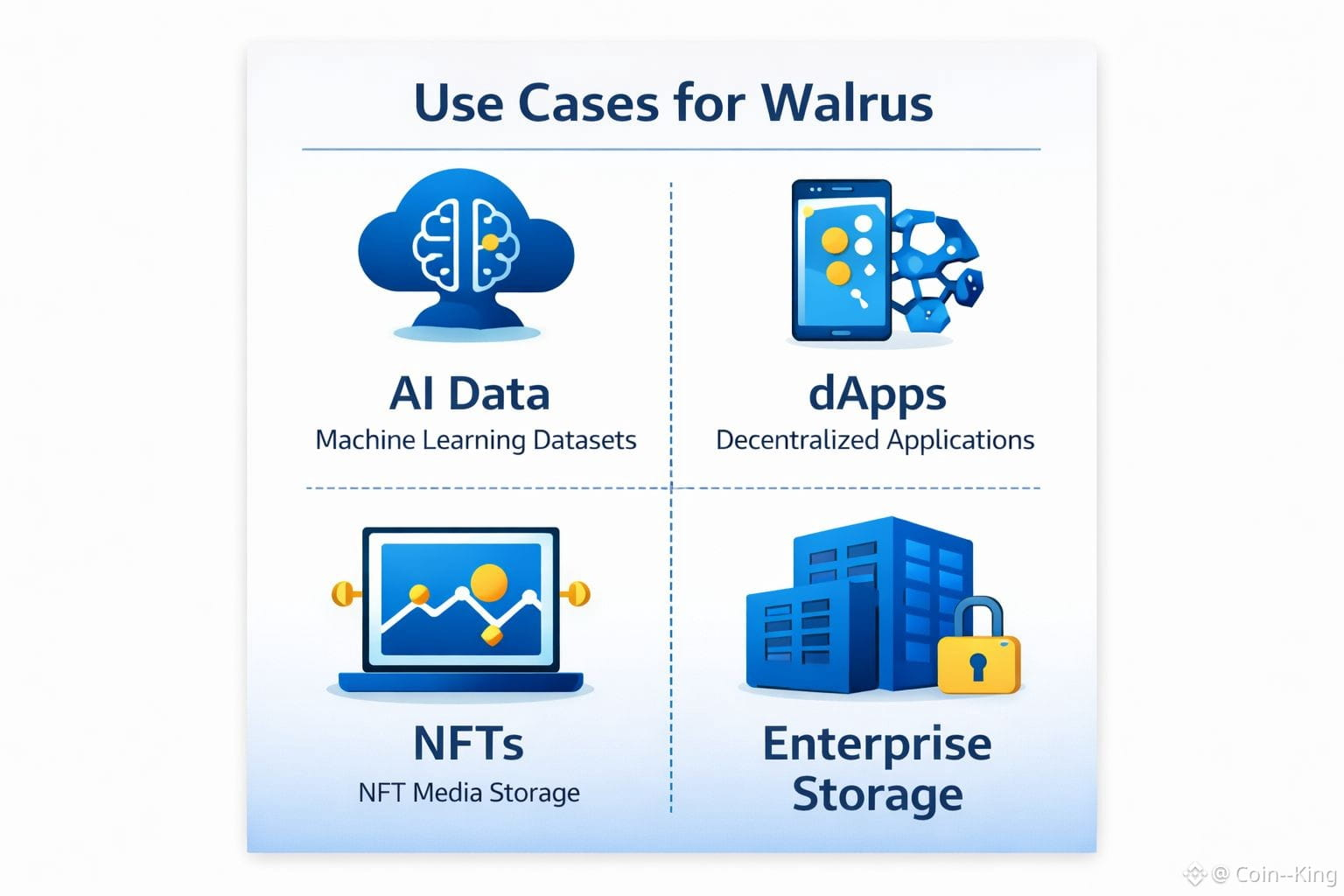

The real value of this design shows up in real use cases. AI datasets are large and expensive to lose. Decentralized applications (dApps) need persistent data. NFT media should remain accessible long after minting. Even enterprises are now looking for storage that cannot be censored or controlled by a single provider. Walrus is clearly built with these scenarios in mind.

What I personally respect about Walrus is that it feels like infrastructure. It doesn’t try to sound exciting. It tries to be correct. It accepts that decentralized networks are messy and builds a system that still works under pressure.

For beginners, Walrus (WAL) is a good example of how decentralized storage is evolving.

It makes one thing clear: decentralization isn’t just about putting data in many places. It’s about deciding who is responsible, making sure data stays available, and keeping everything coordinated as time passes.

Based on my experience studying these systems, this move from basic storage to proper coordination is where decentralized infrastructure is truly heading.