The more time I spend looking at Walrus Protocol, the more it starts to feel like one of those projects that quietly becomes the foundation of everything while nobody is paying attention. At first you think it is just another storage network, maybe a slightly smarter version of the usual decentralized storage systems, but then you go deeper and realize Walrus is not trying to compete with them at all. It is building something that treats data like a living part of the chain instead of a file thrown into a distant server and forgotten. It feels like a project that already understands where Web3, AI, gaming, identity, social data, and creator ecosystems are heading and is simply preparing the world for that future.

What really changes your perspective is how Walrus makes data programmable. On most networks, storing anything bigger than a small file means jumping off chain and hoping that some gateway or server stays online. Walrus does not treat data like that. When you upload something here, it becomes part of your on chain world. You can version it, verify it, reference it, attach logic to it, and build applications around it. It is the closest thing we have seen to storage that genuinely behaves like state.

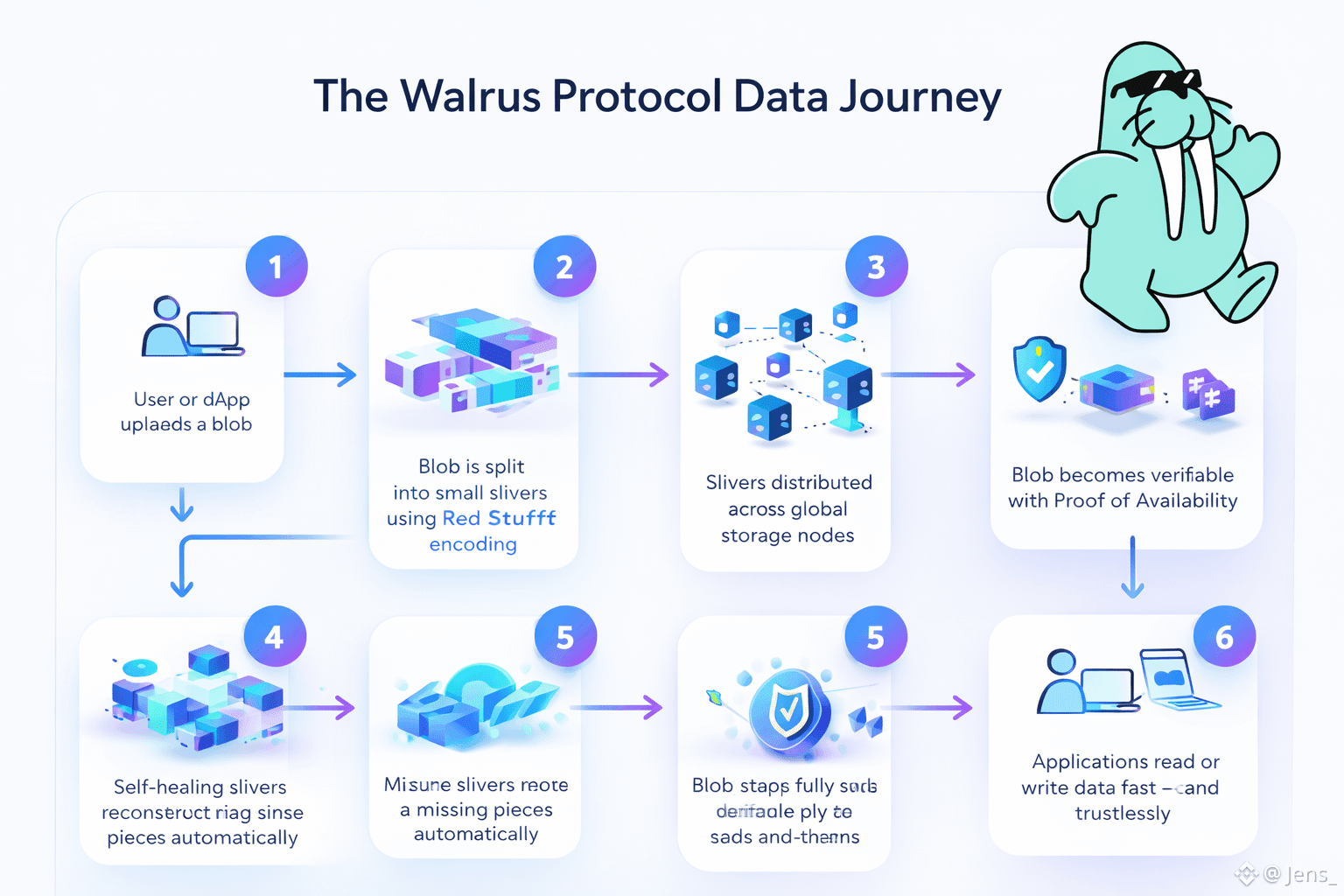

The architecture behind this is the Red Stuff encoding system. Instead of creating endless full copies of data, Walrus breaks everything into small slivers arranged in a two dimensional layout. This means the network can reconstruct missing pieces from the remaining fragments without needing full replicas. It lowers overhead, reduces cost, and gives Walrus a form of self healing that makes the whole protocol feel alive. The system only needs a fraction of the replication older networks rely on, but it still delivers stronger reliability and much faster performance. When you imagine AI models, gaming assets, esports archives, prediction market data, identity proofs, or creator content, you immediately understand why this approach matters.

The economic model also feels more mature than what we have seen before. Instead of vague storage promises, Walrus lets you buy clear, time bound storage units. When you attach a storage resource to a blob, the network creates a Point of Availability that proves where your data lives and how long it will be available. Everything about redundancy, availability, and access is verifiable directly from chain logic. You never have to trust a centralized interface to tell you if your data is safe. You see it for yourself.

As Walrus has moved into production, the usage numbers have become even more interesting. Millions of blobs stored, over four and a half million according to recent updates. Tens of thousands of gigabytes being actively used. And the new update from the project itself added something that hit me on a deeper level. They said they have seen things. Blobs you would not believe. More than 332 terabytes of data permanently stored. AI chatbots storing their memories on its servers. Not taking breaks. Not sleeping. Just verifying data so nothing disappears. And in the middle of the night, even Walrus wonders what it means to be permanent. What it means to be the foundation for companies, creators, teams, or entire networks. To carry data that might matter fifty years from now. Then it jokes that none of this is that deep because it is Walrus and its job is simply to keep storing blobs. Somehow this small update captures exactly why people are drawn to this network. It is technical but also strangely human.

And when you look at who relies on Walrus, the story becomes even clearer. AI and data driven teams like Baselight, Yotta Labs, Gata, FLock, and OpenGradient use Walrus for training data, embeddings, and model checkpoints. Agent frameworks like Talus and elizaOS depend on Walrus for persistent memory, something that becomes more important as agents grow smarter and more autonomous. Gaming studios use Walrus to store massive 3D assets. Claynosaurz and Everlyn already do this. Team Liquid uses Walrus to preserve esports archives that must remain safe and accessible long term. Identity and social data platforms like Humanity Protocol and Itheum rely on Walrus to store proofs, biometric data, and social graphs. The consistency in all of this is simple. Anyone dealing with real data that actually matters eventually ends up on Walrus.

Performance is another reason people keep choosing it. Walrus is built for high throughput uploads and fast retrieval. It was designed with modern scale in mind where datasets are measured in terabytes, not megabytes. Traditional DePIN systems often hit bottlenecks under real load, but Walrus behaves like a modern distributed storage engine. When I look at it, I see a system that knows exactly what kind of workloads the next decade will require.

The AI angle deserves its own space because it might be the biggest unlock Walrus enables. Centralized storage works for traditional apps, but the future of AI will depend on neutral storage that can be verified, shared, and accessed without trusting a single corporation. Walrus gives AI models and agents a place to store memory, datasets, traces, and state in a way that is transparent and permanent. Adding payment logic, data rules, and access controls on chain turns Walrus into a complete data engine for AI native applications.

The confidence the ecosystem has shown in Walrus is also reflected in its funding. A one hundred forty million dollar raise led by Standard Crypto with participation from a16z crypto, Electric Capital, and Franklin Templeton does not happen by accident. These firms understand infrastructure at a level beyond hype cycles. Their interest tells you exactly how important Walrus is becoming.

As I look at Walrus now, I see a network that is not chasing narratives. It is anchoring them. It is building the storage foundation for AI agents, gaming worlds, esports content, identity protocols, data markets, creative ecosystems, and prediction engines. It is doing the work most people do not see, the silent background work that makes everything else possible.

Walrus feels like one of those protocols that will be remembered later as the moment decentralized storage finally stepped into maturity. A network that keeps data alive, verifies it, protects it, and treats it like something that will matter long after companies change, apps evolve, and technologies shift. A protocol that does not sleep, does not rest, and does not forget. Because in the end, it is Walrus, and its entire identity is built around one simple promise: your data will not disappear.